I recently attended HP’s Securities Analyst Meeting (SAM) where HP made its case to Wall Street on why investors should believe in

the company and buy the stock. I will write about the conference later when I have thought through the content a bit more, as, unlike financial analysts, I need to think three to five years out. Thinking short term about HP doesn’t do anyone any good. What I do want to talk about are two of the products shown at SAM, a new impressive tablet lineup.

The tablet market right now is exhibiting all the traditional signs of any tech market. You have a lot of experimentation going on right now to see what consumers and business users really want. Apple, like the early days of the consumer PC, is punishing everyone who stands in its way in the tablet market. If you have any doubt on that, just ask Motorola, RIM, Samsung, and anyone attached to webOS. As experimentation progresses, the markets starts to settle into more of a predictable rhythm and then after that, massive segmentation and specialization will occur. This is classic product lifecycle behavior. HP, like Dell and Lenovo have learned a lot over the last 18 months, and HP, in particular, put the pedal to the metal with their latest tablets.

HP segmented its line into a consumer and large enterprise line. What about small business? Too early to know and quite frankly this will be the last market to adopt tablets, so I like this decision. Technologically, HP has opted to forgo ARM-based technology in its latest offering and instead has opted for the Intel CloverTrail solution. Only after we all see pricing and battery life will we know if this is a good decision. Let’s dive into the models.

HP ENVY x2 for the Consumer

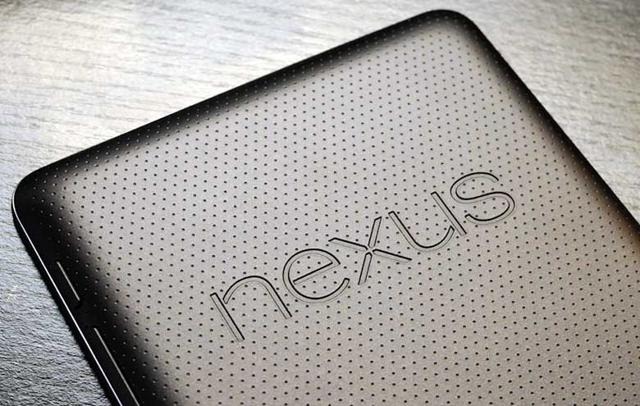

The first time I saw the ENVY x2 at the Intel Developer Forum I was stunned, quite frankly, at how thin, light, and sexy the unit was. My latest Intel tablet experience was a thick and heavy Samsung tablet with a loud fan used for Windows 8 app development. The HP ENVY x2 wasn’t anything like this as it was thin, light, fan-less, and sexy industrial design made from machined aluminum. No, this didn’t feel like an iPad… it felt in some ways even better. This is a big thing for me to say given my primary tablets have been iPads… gen 1-2-3. It is very hard to describe good ID in words, but it just felt good, real good.

It was apparent to me that HP stepped up their game in design and after talking with Stacy Wolff, HP’s Global VP of design, they have amped up resources a lot. While most of Intel’s OEMs are focused on enterprise devices, this consumer device stands out. The only thing that could potentially derail the ENVY x2 is Microsoft with a lack of Metro applications or too high a price tag. Net-net I will need to see pricing and Windows 8 Metro launch apps before I can assess what this will do to iPad and even notebook sales.

HP ElitePad for Commercial Markets

Let’s face it, commercial devices run counter on many variables to what consumers want. The tablet market is no different. Enterprise IT wants security, durability, expandability, cheap and known deployment, training, software, and manageability. Consumers want sexy, cool, thin, light, easiest to use, and based on the amount of cracked iPad screens (mine included), durability is not that important. HP has somehow managed to cross the gap between beauty and brawn in a very unique way. When I first saw the ElitePad, I thought it was a consumer device. It even has beveled corners to make it easier to pick up off the conference table! Like the ENVY x2, it also feels like machined aluminum.

The ElitePad, because it has at its core an Intel CloverTrail-based design, can run the newer Metro-based Windows 8 apps and legacy and new Windows 8 desktop apps. IT likes to leverage their investments in software and training, and they will like that they can run full Office with Outlook as well as any corporately developed apps without any changes. You don’t want to be running Photoshop on this as it is Atom-based, but lighter apps will run just fine.

IT “sees” the ElitePad as a PC. Unlike an iPad, it is deployed, managed and has security like a Windows PC. For expandability, durability and expanded battery life, HP has engineered a “jacket” system that easily snaps around the ElitePad, which felt to me like the HP TouchPad. The stock jacket provides extra battery life and a fully bevvy of IO including USB and even full-sized HDMI. If HP isn’t doing it already, they should be investing in special jacket designs for health care, retail, and manufacturing. Finally, there is serviceability. While I don’t want to debate if throwing away a device is better than servicing it, IT believes that servicing it is better than tossing it in the trash. For large customer serviceability needs, HP is even offering special fixtures to easily service the tablet by attaching suction cups to the surface and removing the display. Net-net, this is a very good enterprise alternative to any iPad enterprise rollout.

I am very pleased to see the care and time put into the planning and design of these devices. The three unknowns at this point are pricing, battery life and Windows 8 Metro acceptance and the number of tablet apps. If there are a lack of Metro apps at launch, the entire consumer category will be in jeopardy in Q4, but commercial is quite different as ecosystems can grow into their show size over time. I cannot give a final assessment until I have actually used the devices, but what I see from HP in Windows 8 tablets is exceptional.