For the past few weeks, I have been using the M1 power Mac mini as my primary day to day computer. I have not lived through as many Apple processor transitions as others who have been sharing their thoughts, but I vividly remember Apple’s transition to Intel. Ever since the late ’90s, Mac’s have been my primary computers. I have fond memories of bringing my Mac into meetings with PC OEMs and Intel in the early 2000s and always taking flack for not using Windows and Intel or what some would call a real work computer. Which is why I found it ironic after Apple switched to Intel how many Macs I saw floating around Intel when I was there for meetings. That’s another story.

During the Intel transition, the first Macs running Intel Silicon had a somewhat rocky beginning with many apps not optimized for x86. Rosetta handled the translation of code from Apple’s PowerPC architecture to Intel’s x86. The main thing about that transition that was burned into my mind was endless bouncing icons (the action an icon performs in the dock while it is opening on macOS) and how many times the app either never opened, requiring me to force quit or did not open requiring a total restart. Once apps were open, I recall the experience being mostly solid, with the exception of some frequent crashes. Still, those minutes wasted opening an app as Rosetta translated is burned into my memory.

Rosetta 1’s translation abilities were dependent on the code and Intel’s processing power back at that time, which is not what it is today as the x86 architecture is far more sophisticated and powerful. Given the underlying technology at the time during Apple’s transition from PowerPC to x86, some of these hiccups are understandable in retrospect. Still, many of us early users remember the pain of that transition.

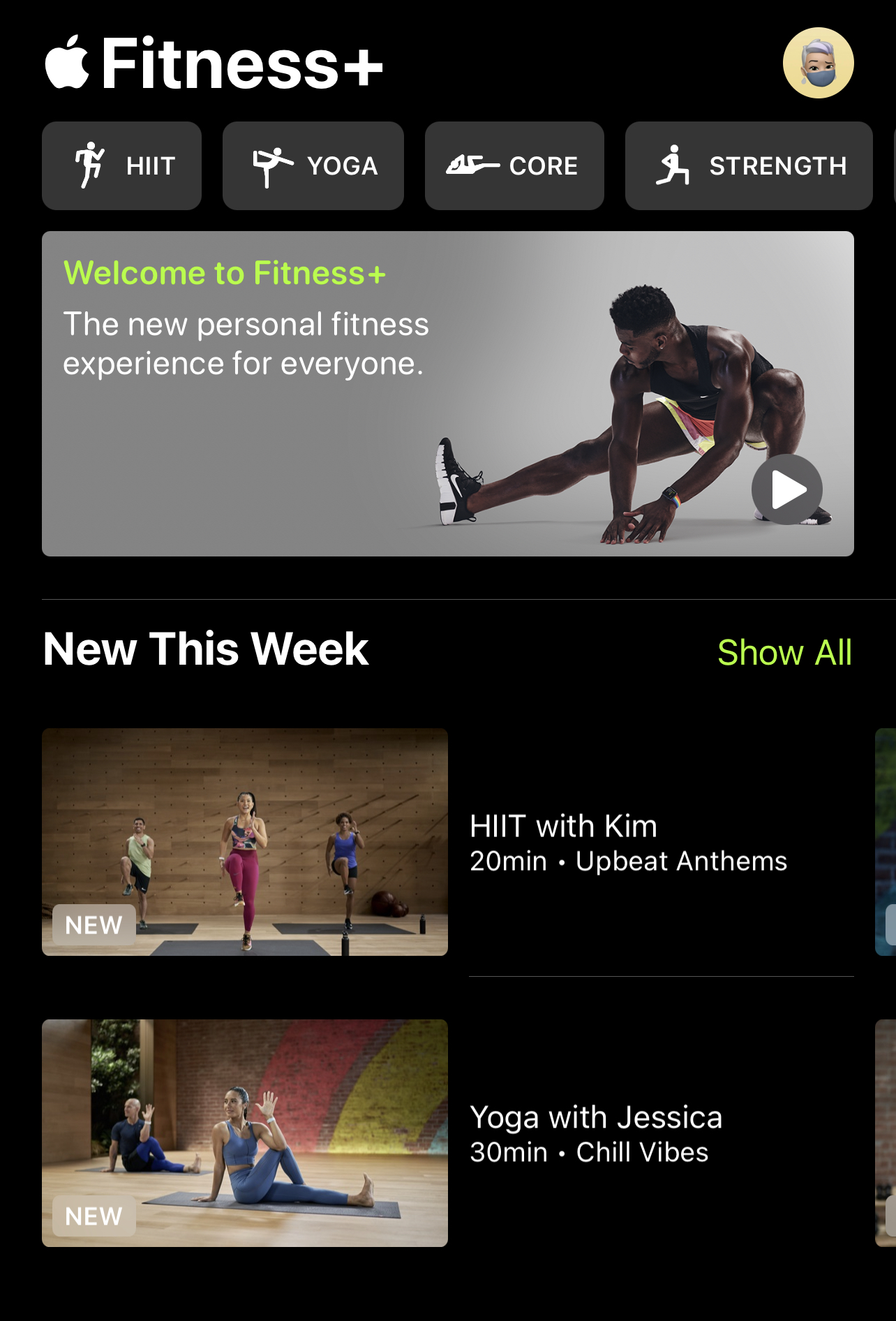

Fast forward to today, and the current experience I’ve had with the M1 powered Mac mini, and it is night and day. After a smooth migration to the Mac mini M1 from my 16″ MacBook Pro, I started instantly picking up some work where I left off on the MacBook. I had a little flashback anxiety as I first launched Superhuman, the email client I run for Gmail, which is fairly lightweight. The Superhuman icon started bouncing in the dock and did so for 20 seconds or more. I briefly had Rosetta 1 deja vu. I immediately quit the app to try again to see what would happen, and it opened instantly. I quit again and opened several times, and each time, it opened instantly, to my relief. I then went on to open nearly all my other applications, which I knew were not M1 native-like Office apps, Zoom, Slack, some audio apps I use for editing audio. All of them took 20-30 seconds at first open but then opened each time instantly after.

What makes Rosetta 2 unique this time around is Apple is translating more like an ahead of time compiler (AOT) than a just in time compiler (JIT). Upon first open, Rosetta 2 is essentially translating all the x86 application code into native M1 instructions, which it will then run at each new open. This is the key experience that led many reviewers to remark on how well x86 apps performed on the M1 and how it felt like a native app. That’s because it basically was a native app after Rosetta 2 translation was performed. This is a huge advantage to Apple being the designer of the Mac CPU. Rosetta could, for the first time, be optimized and co-designed with the M1 and have unique knowledge of each other. This was not a luxury Apple has had in past silicon platform transitions.

I timed each non-native app’s translation process upon first open, and the average time was 26.7 seconds. That’s basically the time it took for the M1 to translate an x86 app to native M1 code. This is pretty impressive when you consider all that is going on under the hood.

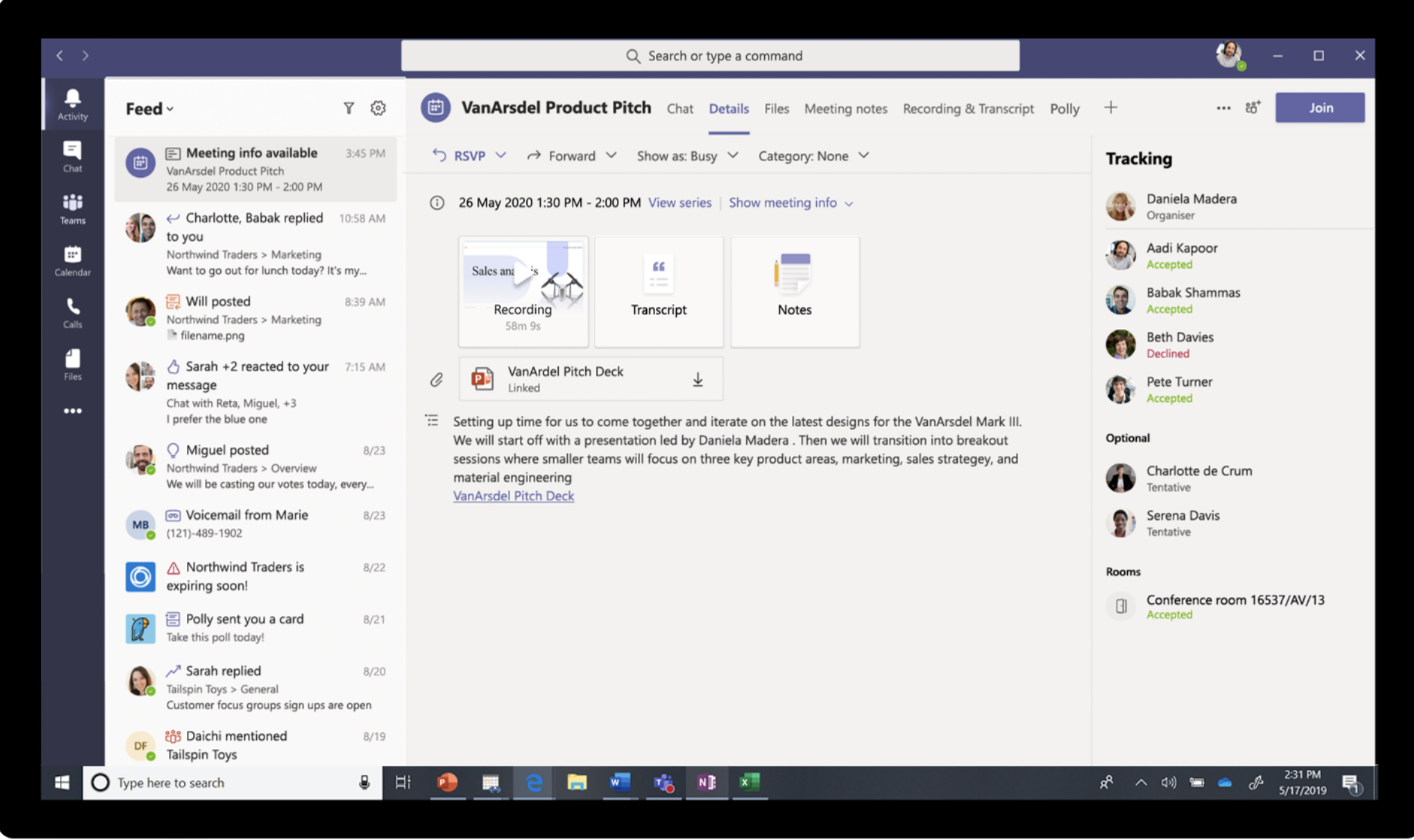

Once the translation process was complete, all my non-M1 native apps performed just like I was used to on my Intel-based MacBook Pro. To have a reference, I timed how long it took the same apps to launch on both the M1 and my Intel-based MacBook Pro (2.4 GHz 8 Core i-9 processor). The table below shows the average time to launch, in seconds, and be usable of each app on each system. I timed each app five times and then averaged each out.

|

M1 Mac mini |

Intel 16′ MBP |

| Superhuman |

4.41 |

6.61 |

| Zoom |

2.52 |

2.12 |

| Word |

0.94 |

0.97 |

| Excel |

1.89 |

2.52 |

| Slack |

5.65 |

2.87 |

| Powerpoint |

0.97 |

0.95 |

| Teams |

4.05 |

3.91 |

| Outlook |

1.03 |

0.95 |

| Photoshop |

6 |

7.5 |

| MS Edge |

1.81 |

1.35 |

As you can see, each system had comparable app times. What was surprising was how none of my work-flows were interrupted as I moved from the Intel MacBook Pro to the M1 Mac mini. Literally zero disruption.

In terms of speed and performance, while I’m not a benchmarker, I did try and tax the M1 system in various ways I could with the software I have. Below was the CPU performance while I opened a native x86 app to run Rosetta 2 translation, scrubbed a 4k video in real-time, while on a Microsoft Teams video call (Teams not optimized for M1). I know it is a weird workflow to test, but it was the most CPU intense software at my fingertips.

As you can see, the spike was caused by the Rosetta 2 translation but never during this 1-2 min span did I see the system become sluggish, unresponsive, or have the spinning rainbow of death known on macOS.

What I found most intriguing about this CPU chart is the M1 has four performance cores and four low-power cores. This CPU chart shows that even the four low-power cores kick in, to a degree, during CPU intensive applications and are not just primarily there for lower-performance tasks.

Suffice it to say, the M1 has gone beyond my expectations right out of the gate, and from the reviews, it looks like I’m not alone. And any localized issues experienced by anyone with some non-optimized apps will be a thing in the past by the end of next year when nearly all, probably all, macOS apps will be optimized for the M1.

The M1 and the future of Macs

I wanted to conclude with a few thoughts on the role the M1 will play for the future of the Mac and Apple Silicon. I’ve long been bullish on Apple’s ambitions with custom silicon since Apple has helped establish the trend of specific purpose silicon away from the old world of general-purpose silicon. We also know Apple’s growing team of in-house silicon designers in-house, which gives them a huge advantage in custom silicon. What is exciting about Apple now challenging their silicon team with setting a new bar for high-performance computing is how those efforts will benefit Apple as a whole, not just with M1 Macs.

The work the team puts in to push the limits of performance-per-watt in high-performance applications will, likely, trickle down to things like iPhone, iPad, future augmented or virtual reality, and more. Meaning, this effort will yield fruit across Apple silicon, not just for Mac hardware.

Having experienced some of the latest processors from Intel and AMD, I am convinced Apple will set a new bar not just in notebooks but desktop and workstations as the M1 scales up to those classes of machines. And this leads me to the last point I want to make.

Apple making processors for Macs is extremely good for semiconductor competition. Not to say that AMD and Intel have been standing still, but both those companies have been focusing on competing with each other and largely competing in the datacenter when it came to pushing performance and high-performance design and applications. Apple has now created a new dynamic where both these companies are now competing with Apple to bring its PC customers a solution that will compete with M1 Macs. If they don’t, Apple could run away with the high-end of the PC market, which would have a drastic impact on the PC category, one I’m not sure Intel, AMD, and the PC OEMs have fully realized yet.