Of all the futuristic technologies that seem closer to becoming mainstream each day, robotics is the one that is likely to elicit both the strongest and widest range of reactions. It’s not terribly surprising if you really think about it. After all, robots in various forms offer the potential for both the most glorious beneficence and the most insidious evil. From performing superhuman feats to the complete destruction of the human race, it’s hard to imagine a technology that could have a more wide-ranging impact.

Of course, the practical reality of today’s robots is far from either of these extremes. Instead, they’re primarily focused on freeing our lives and our businesses of the drudgery of mundane tasks. Whether it’s automatically sweeping our floors or rapidly piecing together elements on an assembly line, the robots of today are laser-focused on the practical. Still, whenever most people think about robots in any form, I’m guessing visions of dystopian robot futures silently lurk in the back of their minds–whether people want to admit it or not.

We can’t help it, really. We have all been exposed to so many types of robotic visions in our various forms of entertainment for so long that it’s hard to imagine not being at least somewhat affected. Whether through the pioneering science fiction novels of Isaac Asimov, the giddy futurism of the Jetsons cartoons, the hellish destruction of the Terminator movies, or countless other examples, we all come to the concept of robotics with preconceived notions. Much more than with any other technology, it’s very difficult to approach robotics objectively.

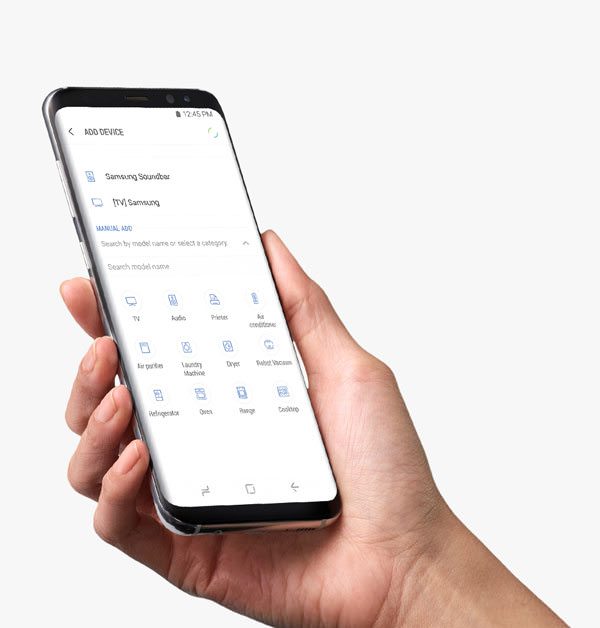

Now that we’re starting to see some more interesting new advances in robotics-driven services—such as food and package delivery and, eventually, autonomous cars—the question becomes how will those loaded expectations impact our view and acceptance of these new offerings. At a simplistic level, it’s easy to say—and likely true—that we can accept these basic capabilities for what they are: minor conveniences. No need to worry about robotic delivery carts causing much more damage than scaring a few pets, after all.

In fact, initially, there is likely to be a “cool” factor of having something done by a robot. Just as with other new technologies, it may not even matter if it’s the best or most efficient way of achieving a particular task: the novelty will be considered a value unto itself. Eventually, though, we’ll likely start to turn a more critical eye to these capabilities, and only those that can offer some kind of lasting value will succeed.

But the real challenge will come when we start to combine robotics with Artificial Intelligence (AI) and deep learning. That’s where things can (and likely will) start to get both really exciting and really scary. The irony is that to achieve the kind of “Asimovian” robotic benevolence that our most positive views of the technology bring to mind—whether that be robotic surgery, butler-like personal assistant services, or other dramatical beneficial capabilities—the machines are going to have to get smarter and more capable.

However, we’ve also seen how that movie ends—not well. Though admittedly a bit irrational, there’s no shaking the fear that we’re rapidly approaching a point in the evolution of technology—driven by this inevitable blending of robotics and software-driven machine learning—where some really big societal-impacting trends could start to develop. We won’t really be able to recognize them for some time, but it does feel like we’re on the cusp.

Of course, there is also the potential for some incredibly positive developments. Removing people from dangerous conditions, helping extend our ability to further explore both our world and our universe, letting people focus on the things that really matter to them, instead of things they have to do. As we move forward with robotics-driven technological advances and transition from science fiction to reality, the possibilities are indeed endless.

We should be ever mindful, however, of just how far we are willing to go.