Another year has come and gone, and in the tech world, it seems not much has changed. 2015 was arguably a relatively modest year when it comes to major innovations, with many of the biggest developments essentially coming as final delivery or extensions to bigger trends that started or were first announced in 2014. Autonomous cars, smart homes, wearables, virtual reality, drones, Windows 10, large-screen smartphones, and the sharing economy all made a bigger initial mark in 2014 and continued to evolve over this past year.

Looking ahead to 2016, I expect we will see changes that, on the surface, also don’t seem to amount to much initially, but will actually prove to be key foundational shifts that drive a very different, and very exciting future. Here are the first five of my predictions of key themes for the new year (the next five will appear in next week’s column.)

Prediction 1: The Death of Software Platforms, The Rise of the MetaOS

Proprietary software platforms like iOS, Windows, and Android have served as the very backbone of the tech industry and the tech economy for quite some time, so it may seem a bit ludicrous to predict their demise. However, I believe the walls supporting these ecosystems are starting to crumble. Device operating systems were built to enable the creation of applications that worked on specific devices, and they did an incredible job—perhaps too good—of doing just that. We now have somewhere between 1.5 and 2 million apps available each for iOS and Android and hundreds of thousands of Windows apps. The problem is, the vast majority of people download less than a hundred and actually use more like 5-10 apps on a regular basis.

More importantly, most consumers now own and regularly use multiple devices with multiple operating systems and what they really want isn’t a bunch of independent apps, but access to the critical services that they access through their devices. Yes, some of those services are delivered through apps, but many of the biggest software and service providers are altering their strategies to ensure that they can deliver a high quality experience regardless of the app, device, OS, or browser being used to access their application or service. Factor in the increasing range of smart home, smart car, and other connected devices we’ll all own and regularly use in the near future—plus the general app fatigue that I think many consumers now feel—and the whole argument around an app-driven world starts to make a lot less sense.

Instead, from Facebook to Microsoft to DropBox and hundreds of other cloud service providers, we’re seeing companies build what I call a MetaOS—a platform-like layer of software and services that remains independent of any underlying device platform to deliver the critical capabilities that people are ultimately looking to access. Bigger companies like Facebook and Microsoft are integrating a wide range of services into these MetaOS platforms—particularly around communications and contextual intelligence agents—that will increasingly take on the tasks and roles that other individual applications used to. Want access to media content or documents or (eventually) commerce and financial services? Even better, want a smart assistant to help coordinate your efforts? Log into one of these MetaOS megaservices and your unique digital identity (another key element of a MetaOS) will give you secure access to these services and much more.

Look for Google, Apple, and Amazon, among others, to start making a bigger effort in this area, and expect to see some of these larger companies make key acquisitions to fill in gaps in their MetaOS efforts over the course of the next year. This isn’t something that’s going to happen overnight, but I think 2016 will be the year we start to see more of these strategies take shape.

Prediction 2: Market Maturation Leads to Increased Specialization

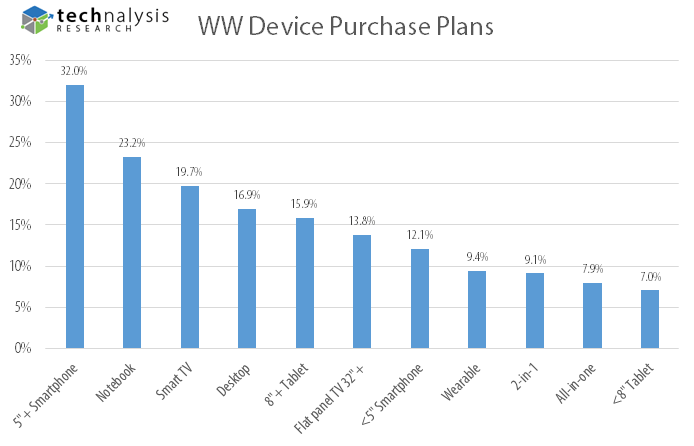

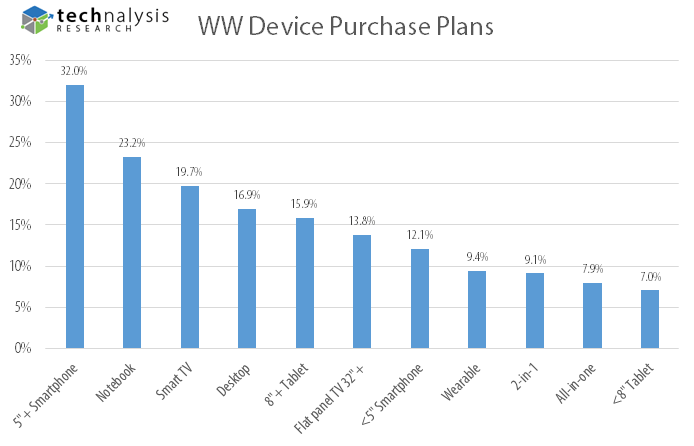

The era of products that appeal to a broad, cross-section of all consumers is coming to an end and it’s being replaced by a new era where we will see more products that are more tightly focused on specific sets of customers. The key product categories have matured, and it’s hard to find broad new product categories that appeal to a wide range of consumers in the same way that PCs, tablets, and smartphones have.

That’s not to say that we won’t be seeing any exciting or interesting new product categories—after all, something has to be next year’s hoverboard—but they won’t have the same kind of wide-ranging impact that the now more “traditional” smart devices have had. As a result, I think we’ll see a wide variety of sub-categories for smart homes, connected cars, wearables, drones, VR headsets, and consumer robotics that will perhaps sell in the tens or hundreds of thousands instead of the tens of millions that other product categories have enjoyed. The Maker Movement and crowd-funding efforts will go a long way towards helping drive these changes, but I also expect that we’ll see the China/Shenzen hardware ecosystem start to adjust and focus more efforts on being able to specialize and even personalize devices. The end result will be a wider range of devices that more specifically meet different consumers’ needs. At the same time, I believe it will also be harder to “find the pulse” of where major hardware developments are headed, because they will be moving in so many different directions. The key will be in developing manufacturing technologies that can enable greater abilities to specialize and that can produce products profitably with lower production runs.

Prediction 3: Apple Reality Check Leads to Major Investment

Apple has had an incredible run at the top of the technology heap for quite some time and, to be clear, I’m not saying that 2016 is the year this will end. What I am saying, however, is that 2016 is the year the company will face some of its biggest challenges, and the year that the “reality distortion field” surrounding the company will start to fade. With two-thirds of its revenues dependent on a single product line (the iPhone) that’s running into the realities of a slowing global smartphone market, the company is going to have to make some big new bets in 2016 in order to retain its market-leading position. I’m not exactly sure what those bets might be (augmented/virtual reality, financial services, automotive, enterprise software, media, or some combination of all of the above), but I’m convinced there are a great deal of very smart people at Apple who are undoubtedly thinking through what’s next for them. Maintaining the status quo in 2016 doesn’t seem like a great option, so this should be the year they seriously tap into that massive cash reserve of theirs and make some major, game-changing acquisitions.

Prediction 4: The Great Hardware Stall Forces Shift to Software and Services

As most companies besides Apple have already learned, it’s very hard to make money on hardware alone, and those problems will only be exacerbated in 2016. With expected declines in tablets and PCs, the flattening of the smartphone market and only modest overall uptake for wearables and other new hardware categories, we’re nearing the end of a several decade-long run of hardware growth. We’ll see pockets of opportunity to be sure—see Prediction 2 above—but companies who have been primarily or even solely dependent on hardware sales are going to have to make some difficult decisions on how they evolve in the era of software and services. As a result, I expect to see more major acquisitions such as the recent Dell/EMC/VMware deal. The challenge, of course, is that many hardware-focused organizations don’t have the in-house skill sets or mindsets to make this transition, so I expect we’ll see very challenging times for some hardware-focused companies in 2016.

Another potential impact from this hardware stall could be an increased desire for hardware companies to become more vertically oriented in order to maximize their opportunity in a shrinking profit pool. This could lead either to acquisitions of key semiconductor vendors and other core component providers by device makers, or vice versa, but either way, hardware-focused companies are going to have to focus on maximizing profitability through reduced costs. After decades of widening the supply chain horizontally, it seems the pendulum is definitely swinging back towards vertical integration.

Prediction 5: Autonomous Car Hype Overshadows Driver Assistance Improvements

The technological advancements in automobiles have been impressive over the last year or two, with the idea of a connected car, and even a partially automated car, quickly moving from science fiction to everyday reality. However, there are still a number of major legislative, social, and technology challenges that need to be overcome before our roadways are filled with self-driving cars. The real advancements that are starting to take place in advanced driver assistance systems (ADAS), such as lane departure warnings, automatic braking, more sophisticated cruise controls, etc., offer some very beneficial safety benefits. But they’re not as sexy as autonomous driving, so much of the press seems to be overlooking them. Even the car vendors seem to be focused more on delivering their vision of autonomous driving than on what we’ll be able to actually purchase and drive over the next five years. In reality, they’re showing the modern-day version of concept cars instead of production cars, but that point is being missed by many. Remember that, unlike the tech industry, the automotive industry regularly builds and displays products it has little or no intention of ever releasing to the world at large.

Improvements in car electronics and intelligence are happening at an impressive pace, and the quality of our in-car experiences is going to change dramatically over the next several years. It’s important to put all the advancements in context, however, and recognize that they’re not all going to occur at the same time. We’re really just now starting to get high-quality connectivity into the latest generation cars, and there are many improvements that we can expect to see in infotainment systems (with or without Apple and Google’s help) over the next few years. As we learned this past year, there are still critical security implications just from those changes, and they won’t all be easily resolved overnight.

Eventually, we will get to truly autonomous cars that regular people can actually buy, but it’s important to understand and appreciate the step-by-step advancements that are being made along the way. These advancements may not be as revolutionary as driverless cars, but they are the news that the automotive industry can realistically deliver on over the next 12 months. Unfortunately, I think the message is going to be lost in the noise of “autonomous automania” this year, leading to thoroughly confused consumers and unrealistic expectations.

Next week I’ll finish off my 2016 predictions with five more on wearables, foldable displays, IOT, connected homes, and VR/AR. In the meantime, have a Happy New Year!