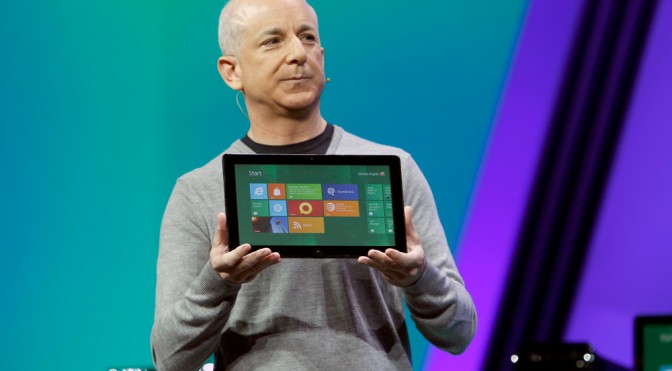

Yesterday, Microsoft unveiled via a blog the different Windows 8 editions and comparing the different features and functionalities. There are three versions, Windows 8, Windows 8 Pro, and Windows RT. One of the biggest changes in Windows 8 versus previous editions is the support for the ARM architecture with NVIDIA, Qualcomm, and Texas Instruments, and the new naming reflects it. The Windows 8 on ARM, or WOA for short, gets its own name, called “Windows RT”. I believe that this naming cuts both ways, some positive and some challenging for the ARM camp, but can be mitigated with marketing spend and education.

and functionalities. There are three versions, Windows 8, Windows 8 Pro, and Windows RT. One of the biggest changes in Windows 8 versus previous editions is the support for the ARM architecture with NVIDIA, Qualcomm, and Texas Instruments, and the new naming reflects it. The Windows 8 on ARM, or WOA for short, gets its own name, called “Windows RT”. I believe that this naming cuts both ways, some positive and some challenging for the ARM camp, but can be mitigated with marketing spend and education.

Windows RT (ARM) versus Windows 8 (X86)

Windows RT and Windows 8 are very similar but in other ways very different, and in some ways reflect Windows RT’s shedding of legacy…. but not completely. The Microsoft blog had a lengthy line listing of differences, but here are the ones I feel are the most significant to the general, non-geeky consumer.

The following reflects relevant typical features Windows 8 provides over Windows RT:

- Installation of X86 desktop software

- Windows Media Player

The following reflects relevant typical consumer features Windows RT provides over Windows 8:

- Pre-installed Word, Excel, PowerPoint, OneNote

- Device encryption

Again, this isn’t the complete list and I urge you to check out the long listing, but these are the features most relevant to the non-geeky consumer.

What isn’t Addressed

What I would have like to seen discussed at length and in detail was support for hardware peripherals. I will use a personal example to illustrate this. Last week, I bought for $149 a new HP Photosmart 7510 printer, scanner and fax machine. Will I am confident I will be able to do a basic print with a Windows RT machine, will I be able to use the advanced printer features and be able to scan and fax? We won’t know these details until closer to launch, but this needs to be addressed sooner rather than later.

Next, I would have also liked to see some specifics on battery life and any specific height restrictions for Windows RT tablets. If these devices are intended to be better than an iPad, they will need some experiential consistency to provide consumers with confidence, unlike Android. As I address below, this wasn’t overt, but a little covert.

The Plusses with what Microsoft Disclosed with Windows RT

There are some positive items for the ARM camp that came from Microsoft’s blog post that covered Windows RT. Windows RT does support the primary secondary tablet-based needs a general consumer would desire. In the detailed blog posts, Windows RT supports many features. This comes to light specifically when you put yourself in the shoes of the general consumer, who doesn’t need features like Group Policy, Domain Join, and Remote Desktop Host. Also, I don’t see the absence of Storage Spaces or Windows Media Player as major issues for different reasons. Storage Spaces is very geeky and I do not believe the typical consumer would do much with it. I believe Windows RT will have many, many methods of playing video as we see on the iPad and Android tablets, so the absence of Windows media Player isn’t a killer, specifically for tablets.

Windows RT also contains Office, specifically Word, Excel, PowerPoint and OneNote which sells for $99 today. Finally, while details are sketchy, Windows RT supports complete device encryption. I can only speculate that all data, storage and memory operations are encrypted. This can potentially leveraged with the consumer, but it’s not something that has kept the iPad from selling.

A final, important note, is the consistent experience I expect Windows RT to deliver. By definition, all Windows RT systems will be lightweight with impressive battery life. While this doesn’t come out as clearly in the blog post, I do read between the lines and see where this is headed. I believe Microsoft wants to deliver the most consistency with Windows RT and leave the experience variability to Windows 8.

There will be challenges, though.

The Risks with what Microsoft Disclosed with Windows RT

While there are positives in what Microsoft disclosed on Windows RT, there are risks and potential downsides, too. First of all and primarily is the absence of the “8”. Regardless of how much Microsoft may attempt to downplay the “8”, consumers fixate on generational modifiers to add value to something. Consumers do this because it makes it easy for them. When a consumer walks into a store and sees Windows 8 and Windows RT, I expect them to ask about the difference. What will the answer be from the Best Buy “blue shirt”? Without a tremendous amount of training on “RT” I would expect them to say, “RT has MS Office, but won’t run older programs. 8 runs all your old programs but doesn’t come with Office.” With that said, the street price adder for Office isn’t public knowledge, but I know that it does add at least $50 to the street price. This is a discount to $99, but then again, I don’t miss not having Office on my iPad.

As I discussed above, Microsoft needs to disclose more on backward hardware compatibility. Every day that ensues without a more definitive statement, Microsoft draws in the skeptics. What wasn’t discussed in the industry 6 months ago is being discussed now. Finally, how can the lack of X86 desktop software be turned into a positive? The basic consumer, if offered something more in their minds for the same price, will always choose more, unless they see a corresponding behavior to give up something. Apple has done a fine job with this on the iPad. When the iPad first launched, many focused on what it didn’t have, namely USB ports, SD cards, or the ability to print. The iPad can print in limited fashion, still has no USB or SD card slot and is still selling great. Windows RT needs a distinct value proposition related to Windows 8 but different too.

What Needs to Be Done Next

If I were in the ARM camp, I would plead with Microsoft to reconsider the naming. Even adding an “8” to the naming to render “Windows 8 RT” would at least recognize it’s in the same family. Without it, Windows RT looks like part of the Windows family, but not “new Windows” table. This can be overcome by spend on a unique value proposition. This distinct value proposition may be that all RT units are thin and light weight and provide a consistent experience, something that Windows 8 cannot guarantee. The ecosystem then would need to fill “RT” with value and meaning which will be expensive. Finally, the Windows RT ecosystem needs to start better communicating about peripheral compatibility, as every day passes, the broader ecosystem gets more nervous. With six months to go, there’s a whole lot of work to do, and a lot more in the Windows RT camp than the Windows 8 camp.

and development environments to support this. Why Microsoft? I believe they see that the future of the client is the smartphone and if they don’t win in smartphones, they could lose the future client. They can’t just abandon PCs today, so they are inching toward that with a scalable Metro-Desktop interface and dev environment. Metro for Windows 8 means for Metro apps not just for the PC, but for the tablet and the Windows smartphone. The big question is, if Microsoft sees the decline of the PC platform in favor of the smartphone, then why aren’t all the Windows PC OEMs seeing this too? One thing I am certain of- the PC industry cannot ignore the smartphone market or they won’t be in the client computing market in the long-term.

and development environments to support this. Why Microsoft? I believe they see that the future of the client is the smartphone and if they don’t win in smartphones, they could lose the future client. They can’t just abandon PCs today, so they are inching toward that with a scalable Metro-Desktop interface and dev environment. Metro for Windows 8 means for Metro apps not just for the PC, but for the tablet and the Windows smartphone. The big question is, if Microsoft sees the decline of the PC platform in favor of the smartphone, then why aren’t all the Windows PC OEMs seeing this too? One thing I am certain of- the PC industry cannot ignore the smartphone market or they won’t be in the client computing market in the long-term.

ARM architecture. If true, this could impact Microsoft, ARM and ARM’s licensees and Texas Instruments, NVIDIA, and Qualcomm are in the best position to challenge the high end of the ARM stack and are publicly supported by Microsoft. One question that hasn’t been explored is, why would Microsoft even consider something like this? It’s actually quite simple and makes a lot of sense the position they’re in; it’s all about risk-return and the future of phones and living room consoles.

ARM architecture. If true, this could impact Microsoft, ARM and ARM’s licensees and Texas Instruments, NVIDIA, and Qualcomm are in the best position to challenge the high end of the ARM stack and are publicly supported by Microsoft. One question that hasn’t been explored is, why would Microsoft even consider something like this? It’s actually quite simple and makes a lot of sense the position they’re in; it’s all about risk-return and the future of phones and living room consoles.

standard iOS email app, but nothing that is really exciting the wide swath of users if “number of stars” is any indicator. To boot, many have argued that the Gmail app actually removes features from standard iOS email platform. So the question is why did Google really launch Gmail application for iOS? There’s a lot here beneath the surface.

standard iOS email app, but nothing that is really exciting the wide swath of users if “number of stars” is any indicator. To boot, many have argued that the Gmail app actually removes features from standard iOS email platform. So the question is why did Google really launch Gmail application for iOS? There’s a lot here beneath the surface.