Though NVIDIA gets most of the attention for accelerating the movement to more advanced processing technologies with its massive drive of GPU hardware into servers for all kinds of general compute purposes, and rightfully so, Intel has a couple of pots in the fire as well. While we are still waiting to see what Raja Koduri and the graphics team can do on the GPU side itself, Intel has another angle to improve efficiency and performance in the data center.

Intel’s re-entry to the world of accelerators comes on the heels of a failed attempt at bridging the gap with a twist on its x86 architecture design, initially called Larrabee. Intel first announced and showed this technology that combined dozens of small x86 cores in a single chip during an IDF under the pretense of it a discrete graphics solution. That well dried up quickly though as the engineers realized it couldn’t keep up with the likes of NVIDIA and AMD in graphics rendering. Larrabee eventually became a discrete co-processor called Knights Landing, shipping in 2015 but killed off in 2017 due to lack of customer demand.

Also in 2015 Intel purchased Altera for just over $16 billion, one of the largest makers of FPGAs (field programmable gate arrays). These chips are unique in that they can be reprogrammed and adjusted as workloads and algorithms shift, allowing enterprises to have an equivalent to custom architecture processors on hand as they need them. Xilinx is the other major player in this field, and now that Intel has gobbled up Altera, must face down the blue-chip-giant in a new battle.

Intel’s purchase decision made a lot of sense, even at the time, but it’s showing the fruits of that labor now. As NVIDIA has proven, more and more workloads are being shifted from general compute processors like the Xeon family and are being moved to efficient and powerful secondary compute models. The GPU is the most obvious solution today, but FPGAs are another; and one that is growing substantially in the move to machine learning and artificial intelligence.

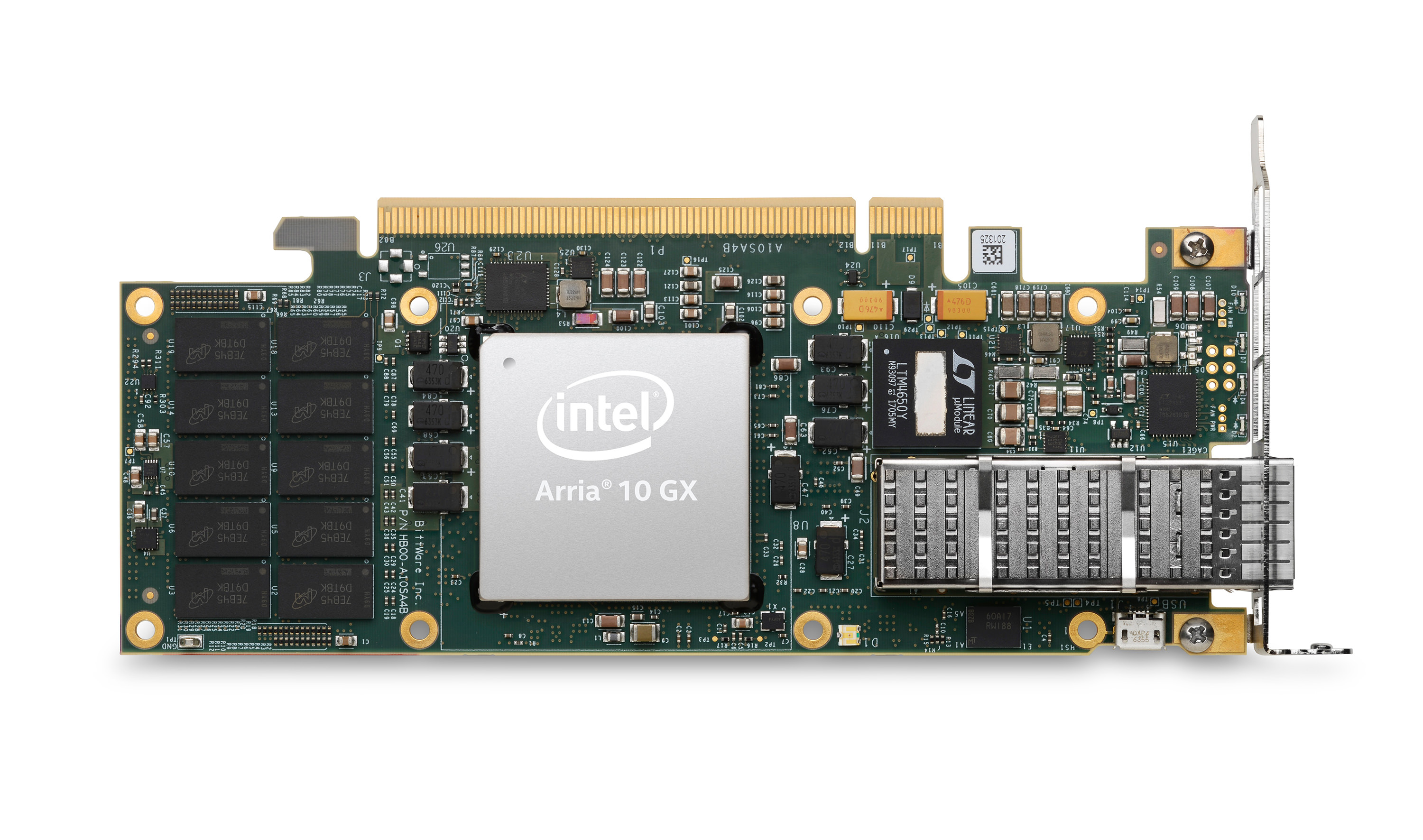

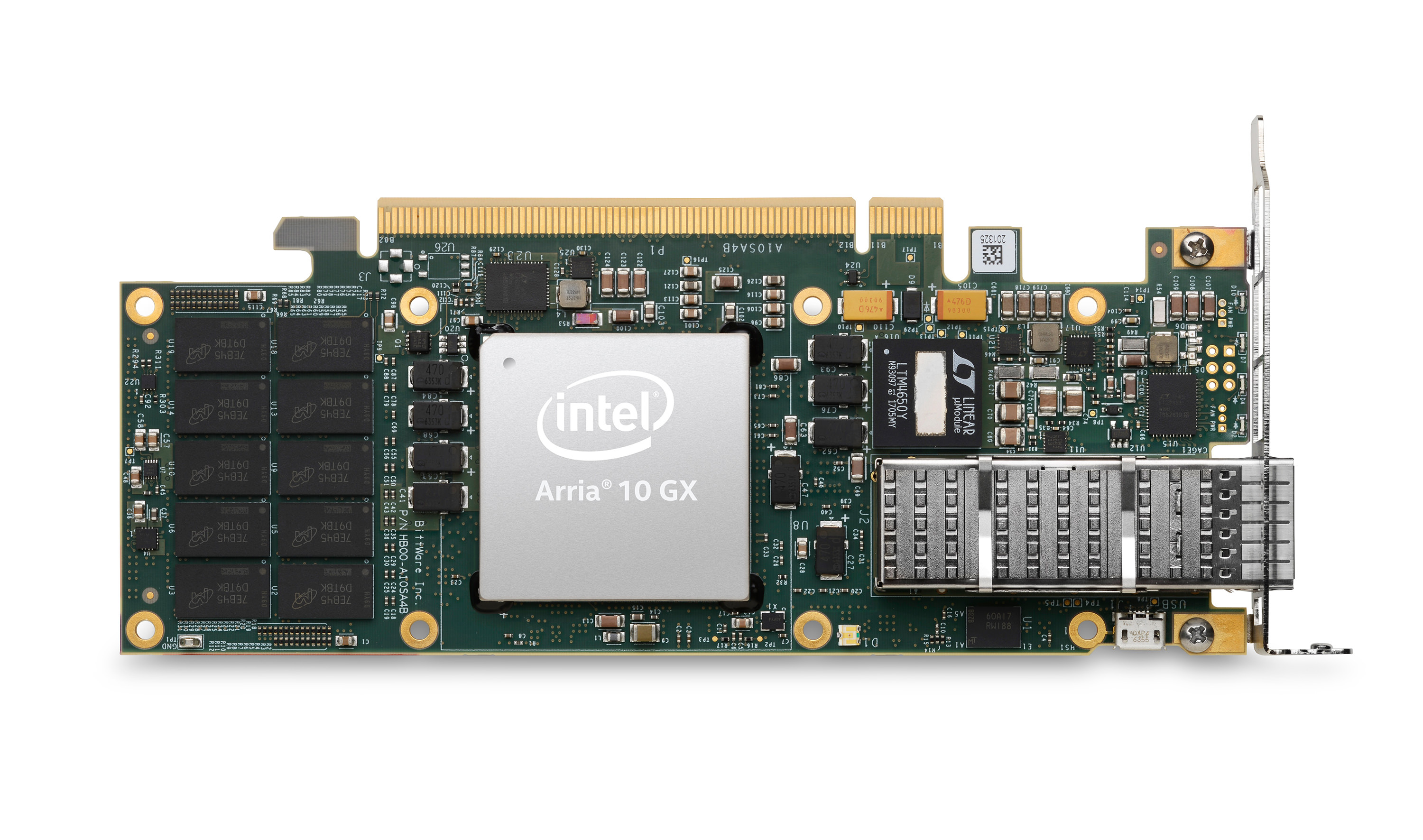

Though initially shipping as a combination Xeon processor and FPGA die on a single package, Intel is now offering to customers Programmable Acceleration Cards (PACs) that feature the Intel Arria 10 GX FPGA as an add-in option for servers. These are half-height, half-length PCI Express add-in cards that feature a PCIe 3.0 x8 interface, 8GB of DDR4 memory, and 128MB of flash for storage. They operate inside a 60 watt envelope, well below the Xeon CPUs and NVIDIA GPUs they are looking to supplant.

Intel has spent a lot of time and money developing the necessary software stack for this platform as well, called the Acceleration Stack for Intel Xeon Scalable processors with FPGAs. It provides acceleration libraries, frameworks, SDKs, and the Open Programmable Acceleration Engine (OPAE), all of which attempts to lower the barrier of entry for developers to bring work to the FPGA field. One of Intel’s biggest strengths over the last 30 years has been its focus on developers and enabling them to code and produce on its hardware effectively – I have little doubt Intel will be class-leading for its Altera line.

Adoption of the accelerators should pick up with the news that Dell EMC and Fujitsu are selling servers that integrate the FPGAs for the mainstream market. Gaining traction with the top-tier OEMs like Dell EMC means awareness of the technology will increase quickly and adoption, if the Intel software tools do their job, should be spike. The Dell PowerEdge R740 and R740XD will be able to support up to four FPGAs while the R640 will support a single add-in card.

Though specific performance claims are light mainly due to the specific nature of each FPGA implementation and the customer that is using and coding for it, Intel has stated that tests with the Arria 10 GX FPGA can see a 2x improvement in options trading performance, 3x better storage compression, and 20x faster real-time data analytics. One software partner, Levyx, that provides high-performance data processing software for big data, built an FPGA-powered system that achieved “an eight-fold improvement in algorithm execution and twice the speed in options calculation compared to traditional Spark implementations.”

These are incredible numbers, though Intel has a long way to go before adoption of this and future FPGA technologies can rival what NVIDIA has done for the data center. There is large opportunity in the areas of AI, genomics, security, and more. Intel hasn’t demonstrated a sterling record with new market infiltration in recent years but thanks to the experience and expertise that the Altera team brings with that 2015 acquisition, Intel appears to be on the right track to give Xilinx a run for its money.