Though it’s a year shy of the big decade marker, 2019 looks to be one of the most exciting and most important years for the tech industry in some time. Thanks to the upcoming launch of some critical new technologies, including 5G and foldable displays, as well as critical enhancements in on-device AI, personal robotics, and other exciting areas, there’s a palpable sense of expectation for the new year that we haven’t felt for a while.

Plus, 2018 ended up being a pretty tough year for several big tech companies, so there are also a lot of folks who want to shake the old year off and dive headfirst into an exciting future. With that spirit in mind, here’s my take on some of what I expect to be the biggest trends and most important developments in 2019.

Prediction 1: Foldable Phones Will Outsell 5G Phones

At this point, everyone knows that 2019 will see the “official” debut of two very exciting technological developments in the mobile world: foldable displays and smartphones equipped with 5G modems. Several vendors and carriers have already announced these devices, so now it’s just a question of when and how many.

Not everyone realizes, however, that the two technologies won’t necessarily come hand-in-hand this year: we will see 5G-enabled phones and we will see smartphones with foldable displays. As of yet, it’s not clear that we’ll see devices that incorporate both capabilities in calendar year 2019. Eventually, of course, we will, but the challenges in bringing each of these cutting-edge technologies to the mass market suggest that some devices will include one or the other. (To be clear, however, the vast majority of smartphones sold in 2019 will have neither an integrated 5G modem nor a foldable display—high prices for both technologies will limit their impact this year.)

In the near-term, I’m predicting that foldable display-based phones will be the winner over 5G-equipped phones, because the impact that these bendable screens will have on device usability and form factor are so compelling that I believe consumers will be willing to forgo the potential 5G speed boost. Plus, given concerns about pricing for 5G data plans, limited initial 5G coverage, and the confusing (and, frankly, misleading) claims being made by some US carriers about their “versions” of 5G, I believe consumers will limit their adoption of 5G until more of these issues become clear. Foldable phones on the other hand—while likely to be expensive—will offer a very clear value benefit that I believe consumers will find even more compelling.

Prediction 2: Game Streaming Services Go Mainstream

In a year when there’s going to be a great deal of attention placed on new entrants to the video streaming market (Apple, Disney, Time Warner, etc.), the surprise breakout winner in cloud-based entertainment in 2019 could actually be game streaming services, such as Microsoft’s Project xCloud (based on its Xbox gaming platform) and other possible entrants. The idea with game streaming is to enable people to play top-tier games across a wide range of both older and newer PCs, smartphones, and other devices. Given the tremendous growth in PC and mobile gaming, along with the rise in popularity of eSports, the consumer market is primed for a service (or two) that would allow gamers to play popular high-quality gaming titles across a wide range of different device types and platforms.

Of course, game streaming isn’t a new concept, and there have been several failed attempts in the past. The challenge is delivering a timely, engaging experience in the often-unpredictable world of cloud-driven connectivity. It’s an extraordinarily difficult technical task that requires lag-free responsiveness and high-quality visuals packaged together in an easy-to-use service that consumers would be willing to pay for.

Thankfully, a number of important technological advancements are coming together to make this now possible, including improvements in overall connectivity via WiFi (such as with WiFi6) and wide area cellular networks (and 5G should improve things even more). In addition, there’s been widespread adoption and optimization of GPUs in cloud-based servers. Most importantly, however, are software advancements that can enable technologies like split or collaborative rendering (where some work is done on the cloud and some on the local device), as well as AI-based predictions of actions that need to be taken or content that needs to be preloaded. Collectively, these and other related technologies seem poised to enable a compelling set of gaming services that could drive impressive levels of revenue for the companies that can successfully deploy them.

It’s also important to add that although strong growth in game streaming services that are less hardware dependent may imply a negative impact on gaming-specific PCs, GPUs and other game-focused hardware (because people would be able to use older, less powerful devices to run modern games); in fact, the opposite is likely to be true. Game streaming services will likely expose an even wider audience to the most compelling games and that, in turn, will likely inspire more people to purchase gaming-optimized PCs, smartphones, and other devices. The gaming service will give them the opportunity to play (or continue playing) those games in situations or locations where they don’t have access to their primary gaming devices.

Prediction 3: Multi-Cloud Becomes the Standard in Enterprise Computing

The early days of cloud computing in the enterprise featured prediction after prediction of a winner between public cloud vs. private cloud and even of specific cloud platforms within those environments. As we enter 2019, it’s becoming abundantly clear that all those arguments were wrong headed and that, in fact, everyone won and everyone lost at the same time. After all, which of those early prognosticators would have ever guessed that in 2018, Amazon would offer a version of Amazon Web Services (called AWS Outpost) that a company could run on Amazon-branded hardware in the company’s own data center/private cloud?

It turns out that, as with many modern technology developments, there’s no single cloud computing solution that works for everybody. Public, private, and hybrid combinations all have their place, and within each of those groups, different platform options all have a role. Yes, Amazon currently leads overall cloud computing, but depending on the type of workload or other requirements, Microsoft’s Azure, Google’s GCP (Google Cloud Platform), or IBM, Oracle, or SAP cloud offerings might all make sense.

The real winner is the cloud computing model, regardless of where or by whom it’s being hosted. Not only has cloud computing changed expectations about performance, reliability, and security, the DevOps software development environment it inspired and the container-focused application architecture it enabled have radically reshaped how software is written, updated, and deployed. That’s why you see companies shifting their focus away from the public infrastructure-based aspects of cloud computing and towards the flexible software environments it enables. This, in turn, is why companies have recognized that leveraging multiple cloud types and cloud vendors isn’t a weakness or disjointed strategy, but actually a strength that can be leveraged for future endeavors. With cloud platform vendors expected to work towards more interoperability (and transportability) of workloads across different platforms in 2019, it’s very clear that the multi-cloud world is here to stay.

Prediction 4: On-Device AI Will Start to Shift the Conversation About Data Privacy

One of the least understood aspects of using tech-based devices, mobile applications, and other cloud-based services is how much of our private, personal data is being shared in the process—often without our even knowing it. Over the past year, however, we’ve all started to become painfully aware of how big (and far-reaching) the problem of data privacy is. As a result, there’s been an enormous spotlight placed on data handling practices employed by tech companies.

At the same time, expectations about technology’s ability to personalize these apps and services to meet our specific interests, location, and context have also continued to grow. People want and expect technology to be “smarter” about them, because it makes the process of using these devices and services faster, more efficient, and more compelling.

The dilemma, of course, is that to enable this customization requires the use of and access to some level of personal data, usage patterns, etc. Up until now, that has typically meant that most any action you take or information you share has been uploaded to some type of cloud-based service, compiled and compared to data from other people, and then used to generate some kind of response that’s sent back down to you. In theory, this gives you the kind of customized and personalized experience you want, but at the cost of your data being shared with a whole host of different companies.

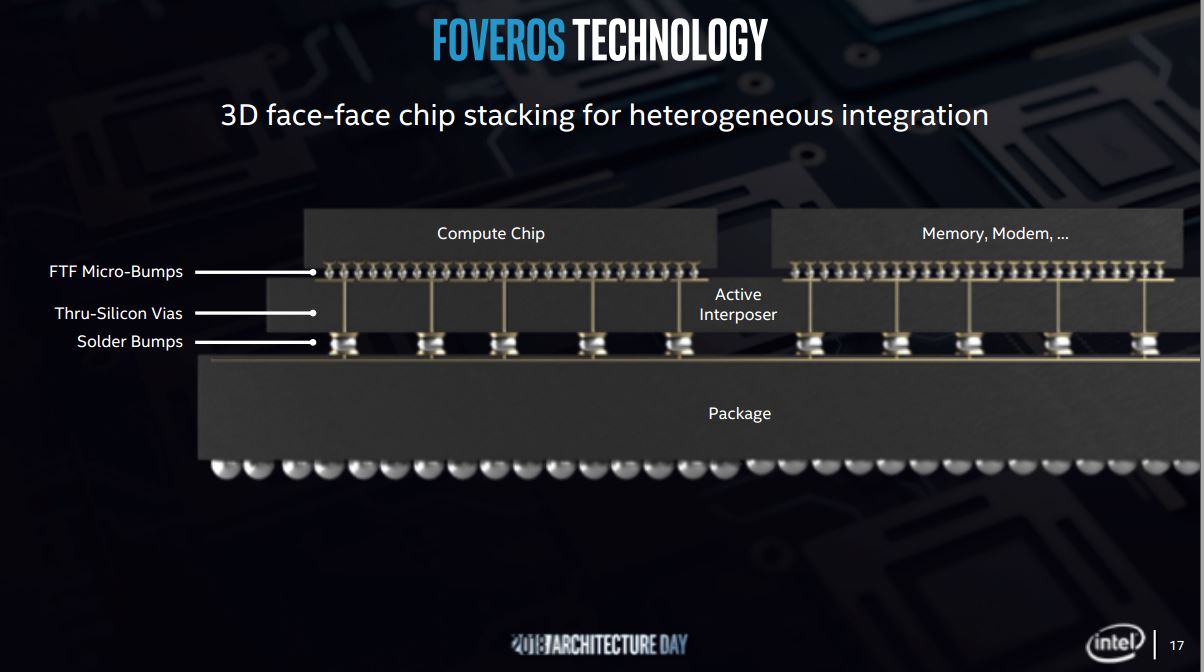

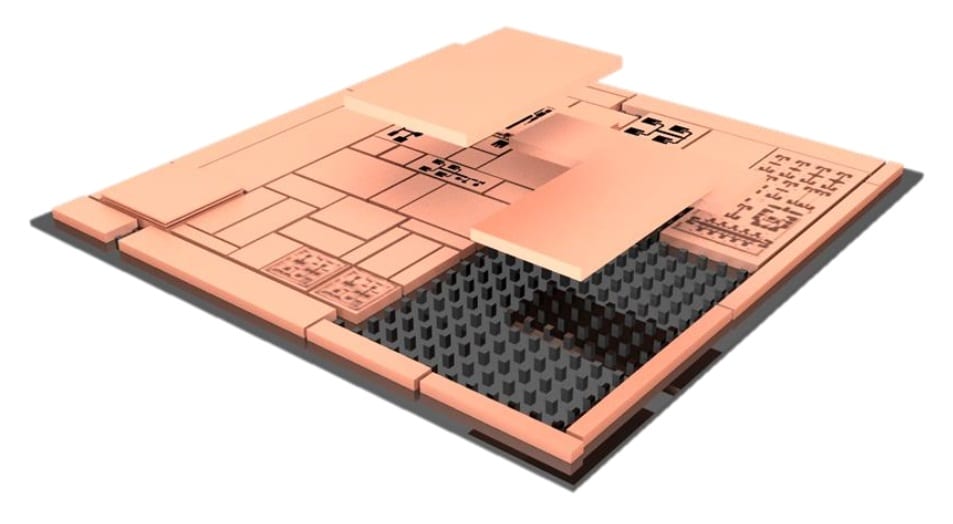

Starting in 2019, more of the data analysis work could start being done directly on devices, without the need to share all of it externally, thanks to the AI-based software and hardware capabilities becoming available on our personal devices. Specifically, the idea of doing on-device AI inferencing (and even some basic on-device training) is now becoming a practical reality thanks to work by semiconductor-related companies like Qualcomm, Arm, Intel, Apple, and many others.

What this means is that—if app and cloud service providers enable it (and that’s a big if)—you could start getting the same level of customization and personalization you’ve become accustomed to, but without having to share your data with the cloud. Of course, it isn’t likely that everyone on the web is going to start doing this all at once (if they do it at all), so inevitably some of your data will still be shared. However, if some of the biggest software and cloud service providers (think Facebook, Google, Twitter, Yelp, etc.) started to enable this, it could start to meaningfully address the legitimate data privacy concerns that have been raised over the last year or so.

Apple, to its credit, started talking about this concept several years back (remember differential privacy?) and already stores things like facial recognition scans and other personally identifiable information only on individuals’ devices. Over the next year, I expect to see many more hardware and component makers take this to the next level by talking not just about their on-device data security features, but also about how onboard AI can enhance privacy. Let’s hope that more software and cloud-service providers enable it as well.

Prediction 5: Tech Industry Regulation in the US Becomes Real

Regardless of whether major social media firms and tech companies enable these onboard AI capabilities or not, it’s clear to me that we’ve reached a point in the US social consciousness that tech companies managing all this personal data need to be regulated. While I’ll be the first to admit that the slow-moving government regulatory process is ill-matched to the rapidly evolving tech industry, that’s still not an excuse for not doing anything. As a result, in 2019, I believe the first government regulations of the tech industry will be put into place, specifically around data privacy and disclosure rules.

It’s clear from the backlash that companies like Facebook have been receiving that many consumers are very concerned with how much data has been collected not only about their online activities, but their location, and many other very specific (and very private) aspects of their lives. Despite the companies’ claims that we gave over most all of this information willingly (thanks to the confusingly worded and never read license agreements), common sense tells us that the vast majority of us did not understand or know how the data was being analyzed and used. Legislators from both parties recognize these concerns, and despite the highly polarized political climate, are likely going to easily agree to some kind of limitations on the type of data that’s collected, how it’s analyzed, and how it’s ultimately used.

Whether the US builds on Europe’s GDPR regulations, the privacy laws instated in California last year, or something entirely different remains to be seen, but now that the value and potential impact of personal data has been made clear, there’s no doubt we will see laws that control the valued commodity that it is.

Prediction 6: Personal Robotics Will Become an Important New Category

The idea of a “sociable” robot—one that people can have relatively natural interactions with—has been the lore of science fiction for decades. From Lost in Space to Star Wars to WallE and beyond, interactive robotic machines have been the stuff of our creative imagination for some time. In 2019, however, I believe we will start to see more practical implementations of personal robotics devices from a number of major tech vendors.

Amazon, for example, is widely rumored to be working on some type of personal assistant-based robot leveraging their Alexa voice-based digital assistant technology. Exactly what form and what sort of capabilities the device might take are unclear, but some type of mobile (as in, able to move, not small and lightweight!) visual smart display that also offers mechanical capabilities (lifting, carrying, sweeping, etc.) might make sense.

While a number of companies have tried and failed to bring personal robotics to the mainstream in the recent past, I believe a number of technologies and concepts are coming together to make the potential more viable this year. First, from a purely mechanical perspective, the scarily realistic capabilities now exhibited by companies like Boston Dynamics show how far the movement, motion, and environmental awareness capabilities have advanced in the robotics world. In addition, the increasingly conversational and empathetic AI capabilities now being brought to voice-based digital assistants, such as Alexa and Google Assistant, demonstrate how our exchanges with machines are becoming more natural. Finally, the appeal of products like Sony’s updated Aibo robotic dog also highlight the willingness that people are starting to show towards interacting with machines in new ways.

In addition, robotics-focused hardware and software development platforms, like Nvidia’s latest Jetson AGX Xavier board and Isaac software development kit, key advances in computer vision, as well as the growing ecosystem around the open source ROS (Robot Operating System) all underscore the growing body of work being done to enable both commercial and consumer applications of robots in 2019.

Prediction 7: Cloud-Based Services Will Make Operating Systems Irrelevant

People have been incorrectly predicting the death of operating systems and unique platforms for years (including me back in December of 2015), but this time it’s really (probably!) going to happen. All kidding aside, it’s becoming increasingly clear as we enter 2019 that cloud-based services are rendering the value of proprietary platforms much less relevant for our day-to-day use. Sure, the initial interface of a device and the means for getting access to applications and data are dependent on the unique vagaries of each tech vendor’s platform, but the real work (or real play) of what we do on our devices is becoming increasingly separated from the artificial world of operating system user interfaces.

In both the commercial and consumer realms, it’s now much easier to get access to what it is we want to do, regardless of the underlying platform. On the commercial side, the increasing power of desktop and application virtualization tools from the likes of Citrix and VMWare, as well as moves like Microsoft’s delivering Windows desktops from the cloud all demonstrate how much simpler it is to run critical business applications on virtually any device. Plus, the growth of private (on-premise), hybrid, and public cloud environments is driving the creation of platform-independent applications that rely on nothing more than a browser to function. Toss in Microsoft’s decision to leverage the open-source Chromium browser rendering engine for its next version of its Edge browser, and it’s clear we’re rapidly moving to a world in which the cloud finally and truly is the platform.

On the consumer side, the rapid growth of platform-independent streaming services is also promoting the disappearance (or at least sublimation) of proprietary operating systems. From Netflix to Spotify to even the game streaming services mentioned in Prediction 2, successful cloud-based services are building most all of their capabilities and intelligence into the cloud and relying less and less on OS-specific apps. In fact, it will be very interesting to see how open and platform agnostic Apple makes its new video streaming service. If they make it too focused on Apple OS-based device users only, they risk having a very small impact (even with their large and well-heeled installed base), particularly given the strength of the competition.

Crossover work and consumer products like Office 365 are also shedding any meaningful ties to specific operating systems and instead are focused on delivering a consistent experience across different operating systems, screen sizes, and device types.

The concept of abstraction goes well beyond the OS level. New software being developed to leverage the wide range of different AI-specific accelerators from vendors like Qualcomm, Intel, and Arm (AI cores in their case) is being written at a high-enough level to allow them to work across a very heterogeneous computing environment. While this might have a modest impact on full performance potential, the flexibility and broad support that this approach enables is well worth it. In fact, it’s generally true that the more heterogeneous the computing environment grows, the less important operating systems and proprietary platforms become. In 2019, it’s going to be a very heterogenous computing world, hence my belief that the time for this prediction has finally come.