It would come as no surprise that tech is a big part of my life, not just my job. As such, many books around the house, podcasts I listen to, documentaries I watch are tech-related. If you read my article ‘What “Hidden Figures” Can Teach Us about AI’ or follow me on Twitter you also know I have a 9-year-old daughter who is mixed race. So, as a mom, I always try and make sure my girl has role models for her gender and ethnic background. When it comes to tech, however, finding names of black leaders is still not that easy.

Let’s Look at the Numbers

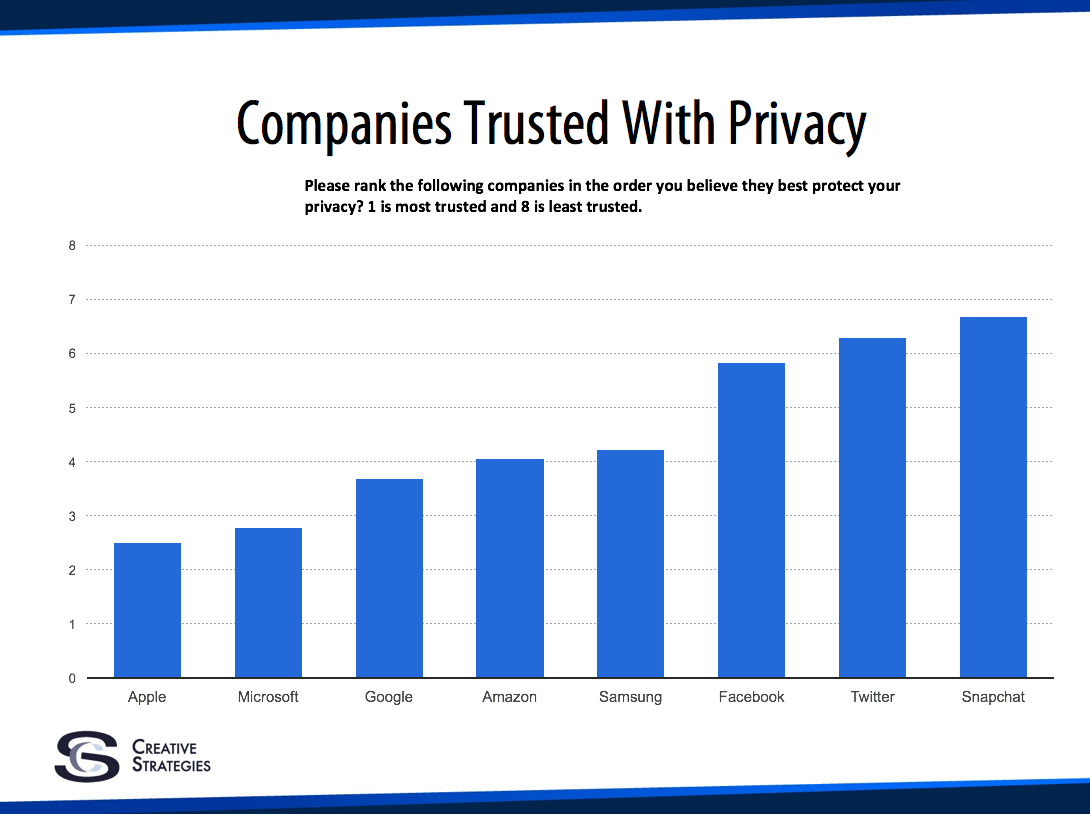

The most recent Apple Inclusion and Diversity Report shows black employees make up 9% of the current workforce and 13% of new hires. When looking at leadership, however, that 9% drops to 3%, a number that has not changed since 2014. At Google, black employees represent only 2% of both the overall staff and the leadership. At Microsoft, 3.7% of the employees are black and only 2.1% are in leadership positions. Amazon’s workforce is only 5% black (I could not find any information on how blacks are represented in leadership roles). At Facebook, black employees represent 2% of overall employees and 3% of senior leadership. Twitter, that just last week lost its diversity chief, had recently shared its diversity numbers showing the percentage of black employees in its workforce had remained the same as in 2015: 3%. This was after a very public diversity pledge.

It’s hard for me to look at these numbers and feel encouraged about how inclusive tech really is and what opportunity my daughter will have in it.

The Diversity Wheel

The Diversity Wheel was created by Marilyn Loden in the 1990s to better understand how group-based differences contribute to people’s social identities. There have been several iterations of the diversity wheel but the most common is made of three circles:

- Internal Dimensions – age, gender, physical ability and race. These dimensions are usually the most permanent and they are also the most visible.

- External Dimension – marital status, work experience, income

- Organizational Dimension – management status, work location, work field

The latter two circles represent dimensions acquired over time and can also change over time.

Educational background is one of the external dimensions that contributes to people’s social identity. A recent report by Georgetown University said that, while the number of African-Americans going to college has never been higher, African-American college students are more likely to pursue majors that lead to low-paying jobs. Law and public policy is the top major for African-Americans with a Bachelor’s Degree. The highest paying major among African-Americans is in health and medical administration. The second lowest paying major among African-American is in human services and community organization with median earnings at $39,000. African-Americans only account for eight percent of general engineering majors, seven percent of mathematics majors, and five percent of computer majors. Even those who do major in high-paying fields typically choose the lowest paying major within them. For example, the majority of black women in STEM typically study biology, the lowest paying of the science discipline. Among engineers, most black men study civil engineering, the lowest paying in that sector.

A very interesting point the report also raises is that African-Americans who have strong community-based values enter into college majors that reflect those values. Despite comprising just 12 percent of the population, African-Americans are 20 percent of all community organizers.

Incorporating elements of community service into careers in tech, business and STEM will increase the appeal to Africa-American students and will be a way for tech to be more visible in those communities. This can become a positive circle of evangelization but needs to start with black students seeing the opportunity first.

Diversity is the Nation’s Unfinished Business

How do you break the cycle first? How can my little girl be inspired to be in tech if she does not see enough peoplelike her, not just in tech, but people being successful in tech? Chief Diversity Officer at Case Western Reserve University, Dr Marilyn Sanders Mobley, refers to diversity as “the nation’s unfinished business”. When it comes to tech, it certainly is the case.

The recent focus on immigration have had many comment on how diverse Silicon Valley is. You only need to stroll down Mountain View to bump into Chinese, Koreans, Europeans, Indians. But this only means Silicon Valley is international, not diverse. Dr. Sanders Mobley says you cannot address what you cannot acknowledge and it starts with acknowledging blind spots. Here is the first one: internationalism and diversity are not one and the same.

Another important point Dr. Sanders Mobley highlights is that, when it comes to fostering diversity in the workplace, there is a need for affinity and employee resource groups. Not everybody will use them or need them but they are necessary to provide a sense of belonging.

So it starts with empowering students to enter the workplace aiming for better paying jobs, aiming for management and leadership positions and then creating a work environment that fosters a sense of belonging. Kimberly Bryant’s effort with BlackGilrsCode is a great example of how to plant the seed with kids, in this case girls, right at the time when they are starting to think about what they want to be when they grow up.

While black students are underrepresented in tech education, however, this is not the ultimate issue as there are still more black students graduating than there are currently working in tech. How is that possible? Mostly because the recruiting process is broken. Silicon Valley often looks within itself. Employee referral programs are very common and recruiters, who often do not have any coding or engineering expertise, tend to rely on Ivy League universities and large tech names like Google and Apple as a measure of a candidate’s ability. Then there is a hiring bias. Blind resumes like the ones that Blendoor offer help in making a candidate visible to the recruiter but do not necessarily guarantee an interview, let alone a job.

Widening the pipeline, changing recruiting techniques and increasing awareness of bias will all help to solve what is the ultimate issue in attracting a diverse workforce: nobody wants to be a tick in the box of a diversity report. It is hard to attract a diverse workforce when the current mix of the company is predominantly white and male. It is even harder for a black kid to think he or she can be the next Steve Jobs.