We are almost at the end of 2019, and as I prepare for CES, I look back to this year to see what characterized tech over the past twelve months. A lot happened this year so much so that remembering every product and every piece of news is almost impossible. When I look back at big themes that shaped tech, there were five that stood out to me: Streaming, China, Foldable, 5G, and Regulation.

Streaming

It seems as though 2019 was the year when everybody decided that consumers did not have enough options when it came to video streaming. Despite already having a long list of streaming services from Netflix. Hulu, Prime Video, HBO… two new brands came barging into what they believe is still a big opportunity for connecting to consumers but also getting a slice of the revenue pie.

After a big launch event in March, Apple started airing its Apple TV + content at the beginning of November, followed shortly after by Disney+. One day after launch, Disney shared that Disney+ had reached 10 million signups. Of course, signups don’t necessarily equal subscribers. Many people could opt-out after the first seven-day free trial. Some could be among the 20 million Verizon customers who received a free subscription for the first 12 months. Nevertheless, the numbers seem to bode well for revenue service.

Judging the success of Apple TV + will be equally difficult, considering that we shouldn’t be expecting much guidance from Apple’s earnings calls. Plus, any consumers who bought a new iPhone, iPad, Mac, or TV would have received a year’s of free subscription.

What is clear, however, is the fact that both services have yet to impact the fortunes of long-standing streaming service, Netflix, the brand, that most feared, would have been affected. It seems as though the hunger for content remains, and consumers seem happy to add to their budget for worthwhile content. Interestingly, though, both services ended up launching at a lower price than most people expected, maybe a sign of awareness of how crowded this market was already.

2020 seems to be as busy with new services expected from HBO, Quibi. Peacock and Discovery/BBC. I continue to believe that the biggest threat these new services represent is aimed at cable TV rather than one another. Cable subscriptions are not just costly, but they also fail to show a clear benefit associated with the amount users pay every month. It’s not so much about cord-cutting but more about making sure the money you pay sees a clear return. I think bundling will be the real battle going forward. We already started to see Disney+, Hulu, and ESPN coming together under one subscription, and I expect Apple will bundle some of its services in 2020. Whether it will be Apple TV + and Apple Music or Apple TV + and Apple News remains to be seen, but my bet is bundled services are on the map.

China

Whether you are thinking about Huawei, or the U.S./China tariffs, or the race to 5G and AI, China was very much at the center of many technology conversations during 2019. The growth and importance that China has represented in technology over the past few years seem to have shifted in 2019, from a market opportunity growth to a supplier opportunity growth. China was not just the market that offered a lot of sales potential, but it became the market where a lot of technologies had their first moment in both development and commercialization.

5G and AI are probably the most prominent tech trends of the next decade, and China is certainly on a mission to dominate both. While a lot of the innovation in these two areas was born in the US, China has been faster to deploy them thanks to government and regulation’s decision that support rather than hinder commercialization.

The tension between the two countries escalated when the US moved to halt Huawei’s ability to sell equipment in the US and, more importantly, to buy parts from US suppliers, by adding Huawei to a Commerce Department blacklist which included another eight Chinese companies. It soon became obvious that Huawei was used as a pawn by the Trump administration in its bargaining with China on favorable tariffs. In what seemed like a chess game, the administration granted temporary reprieves that allow US companies to continue to sell parts to Huawei. Microsoft for instance, said it got a license to sell “mass-market software” to Huawei. Yet, the US Federal Communications Commission voted to prohibit the use of federal subsidies to buy telecommunications equipment made by Huawei and ZTE and said it would consider requiring carriers now using the products to remove them.

The tariffs battle between the US and China culminated this month in the Chinese government mandating all government offices and public institutions to remove foreign computer devices and software within three years, adding to the complications non-Chinese OEMs are already facing with tariffs. Ironically, given the complaints made against Huawei, this mandate is part of China’s longer-term push for “secure and controllable” technology in government and critical industries as part of its Cyber Security Law, which should boost domestic PC makers share.

The ramifications of this power struggle between the two superpowers will continue into 2020 with both countries possibly opening up opportunities for other geographies like South East Asia for manufacturing.

Foldable

Right out of CES 2019, it was clear that this was the year of foldables, if not for sales, certainly for marketing buzz. After years of more or less attractive rectangular pieces of glass, people were excited about new phone form-factors and even about experimenting with PC designs.

Yet, as it is often the case with cutting edge technology, getting off the ground, wasn’t as smooth as many expected. Samsung’s launch of the Galaxy Fold was negatively impacted by some design decisions around screen protectors and hinge. Spring almost turned into Fall as Samsung delayed the official launch of a Galaxy Fold and made some changes to tighten the area around the hinge and tuck the screen protector under the bezel. The second highly anticipated foldable smartphone was the Huawei Mate X, which has, so far, been released only in China, and an international launch said to be dependent on carriers 5G rollout has, in reality, been put on hold by the lack of Google services.

Towards the end of the year, we also saw a more mainstream design with the Motorola Razr. The Razr injects some positivity back into this segment after the issues Samsung and Huawei have had. The inward folding solution protects the screen, and the design does not require developers to rethink their apps for a larger screen nor users to rethink how they use their phones. In other words, you can enjoy the Razr familiarity and uniqueness all at the same time.

Foldables also became a talking point in the PC market where Lenovo introduced the first foldable PC, the ThinkPad X1. As we know, however, people seem to be much more flexible and open to new form factors and experiences with smartphones than they are with PCs. This attitude, and the high price tag associated with the first devices, is why dual-screen devices will play a bigger role in 2020, both driven by efforts by Intel and its Athena project as well as Microsoft and their Surface Neo running Windows 10X, a new incarnation of the Windows operating system, focused on delivering a more agile set of workflows.

5G

It seems strange to talk about 2019 as the year of 5G because in fairness is more about the time that was spent talking about 5G, than the availability of 5G services. As we are closing the year, you will have quite a different opinion of 5G depending on which region you’re located. In the US, industry watchers, as well as consumers, seem to continue to have more questions than answers. And this is not because anyone is questioning the impact that 5G will eventually have on the way that both people and things will all be connected and communicate. It is more because much of the marketing and messaging, especially from the carriers, continues to confuse rather than excite or reassure. We continue to see marketing messages focusing on coverage maps and ideal speeds, but not much time is spent to really show the value of betting on 5G.

Today, as companies start to invest in private 5G networks, the value is becoming more transparent. Still, for consumers, the limited number of devices, the higher price points of these devices, and the extra cost associated with some 5G plans are leaving people skeptical. The elbow fight among carriers to push through as a leader is, unfortunately, ending up hurting 5G’s overall value proposition.

2020 will see broader device selection, as well as a broader price points, bringing the 5G functionality down in the mid-tier portfolio. And, more importantly, a lot of the confusion around network frequencies will go away as chipsets will be able to support multiple frequencies.

Regulation

Big tech was undoubtedly on the agenda in Washington this year! There was plenty to choose from: the FTC and the internet, the FTC and Qualcomm; Google, Twitter, and Facebook all went to Washington, breaking up Big Tech as big part of the 2020 elections agenda.

Privacy was on the agenda in the US and Europe as GDPR started rolling out earlier in the year. Apple was the only vendor who was able to pivot on the push for privacy and made it a big marketing message thought-out the year, talking about privacy as a fundamental civil right.

Antitrust was also on the agenda as politicians called for breaking up Big Tech mostly because it seemed the only way many felt they could hinder Facebook, Amazon, and Google from becoming even more powerful.

Other tech areas that have been under scrutiny are bitcoin, encryption, and AI. Regulators tried to put a stop on Libra, Facebook’s cryptocurrency, for fear of the impact that an unregulated cryptocurrency might have on traditional currencies. Luckily for them, initial vital partners such as Paypal, Visa, MasterCard, and eBay all backed away from the project.

This month, US lawmakers threatened to pass legislation that would force tech companies to provide court-ordered access to encrypted devices and messages. Law enforcement officials argue that encryption keeps them from accessing criminals’ devices, while tech companies continue to be concerned that creating a backdoor into any device or messaging service opens up the opportunity for bad actors to maliciously access them too. This is the same argument made by Apple against the FBI after the San Bernardino shooting.

After leading the way on privacy, Europe seems to want to shift its attention to AI with Ms. Vestager, Europe’s Commissioner for Competition pledging to create the world’s first regulations around artificial intelligence as well as delivering rights to gig economy workers like Uber drivers.

All these big topics will remain on the agenda for politicians in the US, Europe, and Australia. Still, I have to admit that I hope to see a much better understanding in the US not just on technology but also on business models and the role that technology will play in key areas such as education, transportation, and real estate.

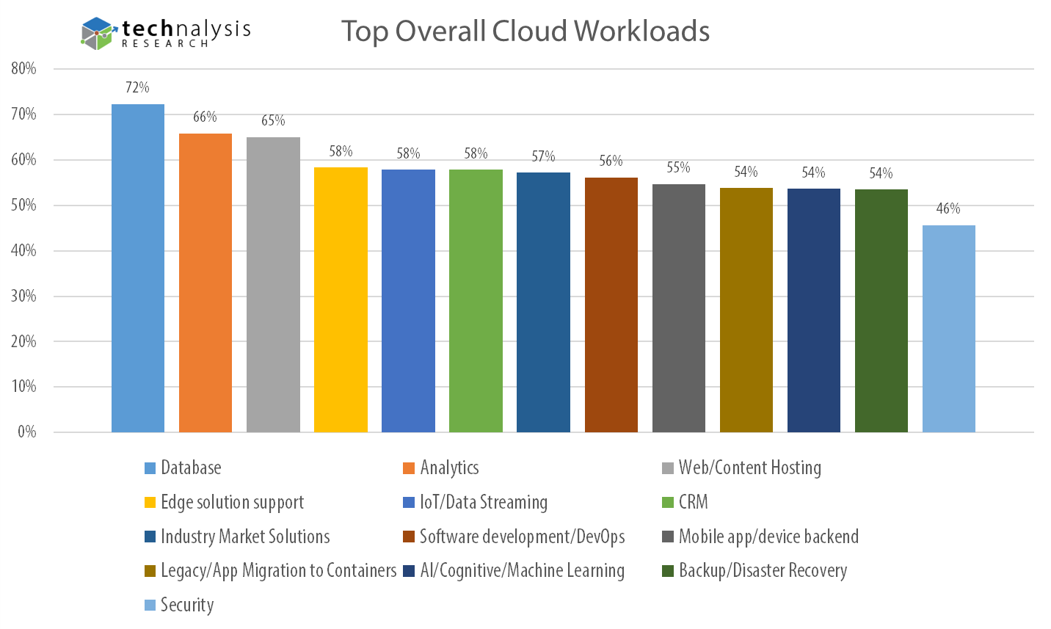

2019 was also the year of tech leadership shakeups, Tik-Tok, silicon, diversity and inclusion (albeit more as a talking point), cloud, and edge. As we look ahead to 2020, we can rest assured we will see many of these big themes to further develop with some materially impacting our lives daily but with all certainly absorbing more marketing budget.

Subscribe to the Tech.pinions Think.tank and get weekly in-depth analysis of the latest technology industry trends, key news, and industry events that shape the market. Join the many business leaders, investors, and Fortune 100 executives who subscribe for the Think.tank’s thought shaping content.