Last month, Benedict Evans wrote a great post talking about unfair comparisons. He faced some criticism for comparing PC market sales to smartphone market sales. Many claimed it was unfair. What if Benedict’s comparison actually isn’t unfair? What if comparing PCs to smartphones is exactly the right comparison to make?

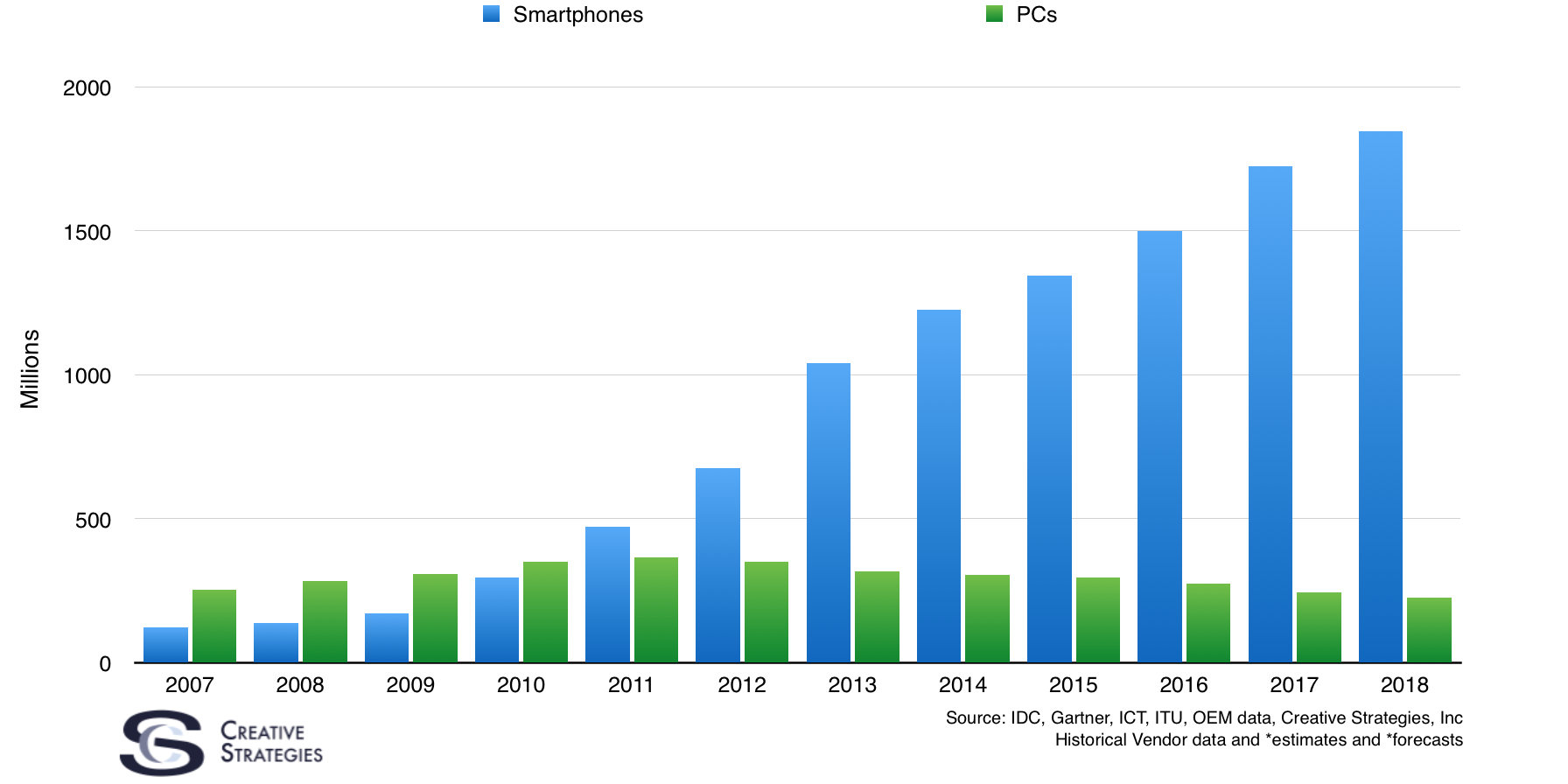

The takeaway from this slide is that one segment is shrinking while one is growing. Those with a PC bias will point out the limitations of a smartphone; highlighting what it can’t do according to their bias and use that argument as a basis to state comparing the growth rates of PCs to smartphones is unfair and irrelevant. When in reality neither are true.

The journey of computing has been from big to small and from complex to simple. Computers started in a giant room and are now in our pockets. Computers which used to require many hours of training to become literate (and many used them and never ever became computer literate) are now operated by toddlers.

So enters the next era of computing. It is computing in the post-modern world. It leads us to computing’s s-curve.

The reason comparing PCs to smartphones is not unfair is, for hundreds of millions of people and soon to be a billion people, the pocket computer is their only computer. For them, this is their starting point. But will it be their end point? For many in the PC era, a desktop or notebook PC was the starting point and, to a degree, the smartphone was their end point. As you can see in the chart above, computing is moving forward and is now finally being adopted by the masses in handheld form.

Computing’s history up to this point has been defined and shaped by stationary computers. Tomorrow will be shaped by pervasively connected, context aware, artificially intelligent computers that fit in our hands and pockets. As crazy as it sounds with today’s modern technology, we will look back in 20 years and this era will look like the Stone Ages.

Comparing the sales of smartphones to PC is not just a relevant comparison, it is THE comparison. Mobile is king. People don’t want to be tethered. Those with a PC bias will make the claim the smartphone has limitations – which is true. However, the PC also has limitations, and those limitations are the reason the market size for PCs is smaller than of smartphones. The PC represents the capabilities of what you can do with a computer when stationary and the smartphone represents the capabilities of what you can do when mobile. While both evolve on parallel paths, the size of the market for one is bigger than the other. It is a simple as that. More people value the capabilities of mobile computing than they do of stationary computer regardless of perceived limitations. For many, those limitations are computing enablers.

What the future is we don’t know. What’s beyond a smartphone? A valid question is not only what a smartphone will be in the future but what a computer will be in the future?

On June 4th, in San Francisco, Horace Deidu of Asymco, myself and others are putting on an executive summit called Post Modern Computing. If having a deep dive of the key points in technology history that got us where we are today and where it may all go tomorrow is interesting to you, then I encourage you to learn more at Postmoderncomputing.com. Seats are extremely limited.

A spot on, honest position.

There are pressures, agendas perhaps, to re-define the PC. So be it. Perhaps the very notion of “computing” should be re-defined as well.

-Is it anything utilizing a CPU? (I don’t think so).

-Is it email and internet access? (Weakly).

-Is it Physics and Medical simulations? (Definitely)

-Photoshop? (Definitely).

-Is it consuming media? (Then my Blu-Ray player is a computer).

This is why I’ve always made the distinction between “Appliance Computers” and General Purpose computers (which includes the PC). Due to their hardware, software, AND their implementation they are different. A General Purpose Computer is just that. Most importantly, and distinctly, it doesn’t require another computer to program it.

A mobile device cannot do CAD/CAE or even professional videography, cinematography. The consumer does not do these operations on a routine basis. The mobile phone now has become an email communicator, a phone, a voice recorder, a calendar, a GPS, an audio MP3 player, a point and shoot camera, a video camera, a book reader, a social media tool, a health monitor and so on. All these functions rely on simplicity and ease of use.

A majority of the people prefer a point and shoot camera while a professional photographer still likes the traditional SLR or large format camera where s/he can adjust everything (aperture, shutter speed, film processing). PCs will shift into a category like professional cameras while the mobile devices will take over the user specific simple operations. With Apple and Google pressing on e-Wallet technology, carrying credit cards might become history. A PC or a laptop will not be needed.

Who knows, mobile devices might infiltrate into music creation industry and start replacing many hard-wired operations, may get into diagnostics area and simplify things. Sky is the limit for mobile devices right now. There will always be use for PCs and it will become specific to high data crunching operations, productivity related tasks that need deep analysis, calculations, simulations, graphics etc. One never knows, if the chip making technology for processors and memory leapfrog into new avenues, mobile devices might be able to do them all. In twenty years, PCs will be hobby items like HAM radio.

Right! We’ve been driving thumbtacks with sledgehammers. But we don’t call sledgehammers thumbs, or hammers now. Do we?

You are missing the point of your own analogy. It’s not the tool that is compared, it is the job…

For most “general” purposes, a thumbtack is as good as a nail. Both a nail and a thumbtack hold things on the wall or bulletin board. The professional may need nails to build houses. Those with a house need thumbtacks much more often.

As you said, thumbtacks have been driven in by sledgehammers when a thumb was sufficient. But, if you happen to need a nail for a particular job, use a hammer by all means.

Yes, it’s the job. I left that out as understood. Still a thumb is a thumb, a hammer is a hammer, and a sledgehammer is a sledgehammer… I feel that the spin of recent months has been trying to convince me that a thumb is a sledgehammer.

No, the “spin”, which many don’t want to accept, is that everyone has two thumbs and most are quite happy without a sledgehammer and yet still do real work.

Call it as you will. If a smartphone is a PC, what is a PC then?

I guess we are back to cars and trucks, then, aren’t we?

https://disqus.com/home/discussion/techpinions/pc_stands_for_personal_choice#comment-1375094843

In the world as we imagine it, there will always be a place for things that require lots of screen and precise input that can be operated for hours at a time. But these are likely to become more specialized and rarefied as “mainstream” computing moves to simpler devices that are ubiquitous. We’ve been using PCs for everything, now we will use them for some things only as needed.

I can imagine the death of the general purpose computer as even the “heavy duty” tasks define the tools required; rather than getting a “general purpose PC”, those who do loads of Photoshoppy work might have a machine that is oriented towards wonderful resolution, color balance and precise non-linear input. Those who do massive spreadsheet work don’t need all that graphics firepower, they want a big screen and plenty of CPU. Perhaps these don’t need to be the same machine or even run the same OS; they are dedicated tools, optimized for what each does. Maybe.

“Perhaps the very notion of “computing” should be re-defined as well.”

I’d say it’s the ability to load and run apps beyond those originally built into the device. If all it can do is run preloaded apps, then it’s an appliance. But if it can download and run arbitrary apps, it’s a computer.

Which is not to say that all computers are the same. An oil company isn’t going to want to do seismic analysis to find their next drill site on a netbook. Nobody’s going to want to use a mainframe to book an Uber cab. So basically I don’t think there’s any such thing as a “general purpose computer” anymore. The kinds of things we ask computers to do have become too diverse for there to be a single form factor or even a single OS that can justly be classified as “general purpose”.

“Most importantly, and distinctly, it doesn’t require another computer to program it.”

That’s way too restrictive a criterion. Phones aside, the original 1984 Macintosh was clearly a real computer (it was significantly more capable than any IBM PC or Apple II), but in the beginning, you had to write programs for it on an Apple Lisa.

Your points are well taken, but microcontrollers do exactly what you describe. They are able to take new programs. A lathe machine for instance.

The original Mac was perfectly capable of being directly programmed, it just didn’t ship with a compiler. Compilers, however, did exist, and could be freely loaded and used. I don’t know the lag between launch and compiler (or interpreter) availability, but it was implemented in a fashion that could be done.

A set of commands for a lathe is data, not a program. Different parts are made with different data sets, all run thru the same control program.

Of course we can get philosophical about what is a program vs. data. A BASIC program is run thru the interpreter at runtime. By convention we call it a program, but is it data, in the interpreter the real program?

A discussion for another time and [lace.

The pocket computer is not only the only computer for some; it’s the best computer for all. Cus’ it’s in your pocket, i.e., you have it with you. The best computer … or camera … that is sitting at home when you need it … is worse than useless. Why? Opportunity cost: The money you spent on a computer that is inaccessible could have paid for one that you have at hand.

Wholeheartedly agree! That’s why I always have a laptop in the car. 😉

Yep. I see Ben is doing a conference on postmodern computing, which is more than just mobile computing.

It’s more than just mobile; it’s personal in a way PCs never where. Coins in your pocket are mobile; your wallet and ID are something else. Your car keys are something else.

It’s a lot more than computing; more importantly it’s communicating and connecting.

Personal communicators are not your grandpa’s train set anymore. Whoo whoo.

Like Jobs said, the post-PC era has begun already.

“The journey of computing has been from big to small and from complex to

simple. Computers started in a giant room and are now in our pockets.

Computers which used to require many hours of training to become

literate (and many used them and never ever became computer literate)

are now operated by toddlers.”

Except it’s not a matter of replacement, it’s a matter of addition. There are still computers that fill entire rooms or entire buildings. There are still computers that can only be operated from the command line. They might be less common now than they were before the PC started the whole “miniatureize and simplify” trend, but they’ve never gone away. Likewise, today’s PCs might become less common, but they’re always going to be around.

The “post PC era” is great marketing but does a very poor job of communicating what’s going to happen. The mobile computer isn’t going to destroy or replace the PC (except in limited contexts), it’s going to complement and add to it.

Yes, the mobile computer is going to complement and add to the PC for many, but it will also decrease the number of PC sales per year as many delay PC replacements, and some choose not to buy a PC at all.

Interesting idea, the notion of throwing out “fairness” and looking at computing in the broadest sense.

If you’re starting with giant machines that took up entire rooms, which clearly served a non-personal need, what else would you include and would that look like?

What if you broadened this to all classes of computing? To include servers, infrastructure devices, and even other “personal” technologies like digital cameras and walkmans, etc.? If you’re starting with the giant machines that took up entire rooms, and you want to throw out “unfairness,” what would that look like?

I imagine that all the computing devices required to support the huge growth of non-PC “personal computing” sitting in all those data centers should clearly contribute to this s-curve as well, for example.

You can start with giant computers in rooms, because that is what computing people “used” in a hands-on way (they stuck punched cards in, and turned dials, etc.). Therefore, it was the equivalent of a “personal” computer today.

Racks of servers in data centers are in no way used personally, though each supports thousands of mobile users by serving webpages or cloud apps. The technician wandering through the racks probably uses an iPad or MacBook to monitor all the Linux servers.

PC sales may or may not already include sales of rack-mounted servers to data centres. If they do, then “PC” sales are still falling. If they don’t, well, again, they aren’t really “personal” computers, are they?

Perhaps data-center servers aren’t even purchased in the same way as “PCs” anyway — perhaps they are kits of boxes and CPUs bought by the pallet load and put together in the data centre, since each data centre likes to be in full control of configuration from the ground up as their proprietary differentiator. But be that as it may, PC sales figures that include racked servers may yet be falling, unless racked units are growing faster than the general decline.

In reference to the second chart, tablet growth is shown to fall behind the equivalent smartphone growth after this year. The reason for this ridiculously conservative estimate is the source: analysts who count Microsoft and traditional PC makers as their customers. While they can’t deny the past and present, they can predict a drop-off for tablet growth in the future.

In truth, as highlighted by Horace at Asymco, tablets are growing about twice as fast as smartphone, just count the first four light green bars (11-14) and compare them to the first four dark green (05-08). To imagine that will slow down after this year is simply wishful thinking from PC OEMs and their analyst mouthpieces.

Keep in mind I”m sharing our forecasts in that chart as well. Also keep in mind our forecasts for tablets are more aggressive than most other firms I see.

But overall I agree, we remain extremely bullish on tablets. Horace and I will also dive into this topic in our summit.

I think the biggest theme of postmodernism is a decentralization/democratization of just about anything one can think of. For instance, in the arts in the US, New York has long been effectively the only place one could make a mark as an artist. That is shifting more and more. It doesn’t mean NYC is _not_ important, just that it isn’t the universal center it once was. Trey McIntyre can make his home in Boise, ID, and still be considered a major player in the dance world. He still has to go to NYC, but he doesn’t have to stay there or even start there. Twenty year ago that was unimaginable.

Similarly, the newspaper or news broadcasts aren’t the only places for news anymore. The local radio station or local club/bar are not the only places to find music, etc. Systems that have existed for centuries are being deconstructed the world over.

The PC is not going away, but it isn’t the central/primary computing device it once was.

I wish i could make it to your summit! Envious of others who can.

Joe

A round of applause for your blog article.Really thank you! Great.

Hi techpinions.com administrator, Your posts are always well researched and well written.

demais este conteúdo. Gostei muito. Aproveitem e vejam este conteúdo. informações, novidades e muito mais. Não deixem de acessar para aprender mais. Obrigado a todos e até mais.

Wow that was strange. I just wrote an really long commentbut after I clicked submit my comment didn’t appear.Grrrr… well I’m not writing all that over again. Regardless, just wanted to say excellent blog!

I think the admin of this site is really working hard for his website, since here every stuff is quality based data.

There is some nice and utilitarian information on this site.

Some really excellent info, I look forward to the continuation.

I really enjoy the article.Really looking forward to read more. Much obliged.

Hmm is anyone else having problems with the pictures on this blog loading?I’m trying to figure out if its a problemon my end or if it’s the blog. Any feedback would be greatly appreciated.

Hello There. I found your blog using msn. This is a really well written article. I?ll be sure to bookmark it and come back to read more of your useful information. Thanks for the post. I will definitely comeback.

Der Mensch kann stark von den Eigenschaften von Reishi profitieren

Awesome blog. Really Great.

It’s hard to locate experienced people On this certain subject, however you sound like you understand what you’re discussing! Thanks

I wanted to thank you for this excellent read!! I definitely loved every little bit of it. I have got you bookmarked to check out new stuff you post…

A round of applause for your blog post.Really thank you! Will read on…

Very neat blog.Much thanks again. Much obliged.

Major thankies for the blog article.Much thanks again. Really Cool.

Hi there! Nice post! Please do inform us when we could see a follow up!

I loved your post.Thanks Again.

I do consider all of the ideas you have presented on your post.They are really convincing and will definitely work. Still,the posts are too quick for starters. May just you please lengthen them a bit from subsequent time?Thank you for the post.

This is a great tip especially to those fresh to the blogosphere. Simple but very accurate information… Thanks for sharing this one. A must read post!

Hello mates, how is everything, and what youwould like to say regarding this article,in my view its in fact awesome for me.

Hello there, just became alert to your blog through Google, and found that it’s really informative. I’m going to watch out for brussels. I will appreciate if you continue this in future. Many people will be benefited from your writing. Cheers!

Very informative blog article.Really looking forward to read more. Great.

What a information of un-ambiguity and preserveness of valuable know-how on the topic of unexpected feelings.

I loved your blog post. Want more.

F*ckin’ tremendous things here. I’m very glad to see your article. Thanks a lot and i am looking forward to contact you. Will you please drop me a e-mail?

tamoxifen alternatives premenopausal nolvadex for sale – how to get nolvadex

whoah this blog is wonderful i like reading your posts. Stay up the great work! You realize, lots of people are looking around for this information, you can aid them greatly.

Thanks , I’ve just been looking for information approximately this subject for a while andyours is the greatest I’ve came upon so far. But, what about the bottomline? Are you certain concerning the source?

Lovely material. Cheers! paperwritingservicestop.com how to write an essay for scholarship

Thank you for sharing your info. I really appreciateyour efforts and I am waiting for your furtherwrite ups thank you once again.

These are in fact great ideas in regarding blogging.You have touched some pleasant things here. Any way keepup wrinting.

Hey there, aren’t you too great? Your own writing widens my knowledge. Give thanks to you.

Everyone loves what you guys are usually up too. This sort ofclever work and reporting! Keep up the wonderful works guys I’ve added youguys to my blogroll.

Thanks for one’s marvelous posting! I genuinely enjoyed reading it,you may be a great author.I will be sure to bookmark your blog andmay come back later on. I want to encourage you to continue yourgreat job, have a nice day!

Wow that was unusual. I just wrote an very longcomment but after I clicked submit my comment didn’t appear.Grrrr… well I’m not writing all that over again. Anyway, just wanted to say superb blog!

Normally I don’t read article on blogs, but I wish to say that this write-up very pressured me to try and do it!Your writing style has been surprised me. Thank you, very greatpost.my blog post … 23.95.102.216

İstanbul Ucuz Taksi 04 Nov, 2021 at 1:42 pm An excellent article. I have now learned about this. Thanks admin

Excellent post but I was wondering if you could write a litte more on this topic? I’d be very grateful if you could elaborate a little bit more. Many thanks!

To the techpinions.com webmaster, Thanks for the well-organized and comprehensive post!

paxil progress paxil erectile dysfunction how to taper off paxil how long does rash last from withdrawal from paxil

Hello techpinions.com admin, Your posts are always on topic and relevant.

Right now it appears like Drupal is the best blogging platform available rightnow. (from what I’ve read) Is that what you’re using on your blog?

Great information. Regards. top rated canadian pharmacies online

I conceive you have noted some very interesting points, thanks for the post.

Thanks for every other magnificent post. The place else could anybody get that type of information in such a perfect way of writing?I have a presentation next week, and I am on the search for such info.

My relatives all the time say that I am killing my time here at net, howeverI know I am getting know-how all the time by reading thes pleasant content.

alanya sigorta ile ilgili bir firma arıyorsanız hemen bize ulaşın

Steve and Brenton produced their own picks this week and Lucas gets all the cash.

There’s definately a great deal to know about this topic. I love all the points you’ve made.

Thanks a lot for the article post.Really thank you! Really Cool.

Usually I do not learn post on blogs, however Iwould like to say that this write-up very compelled me to check outand do it! Your writing taste has been amazed me.Thanks, very great article.

Very good written story. It will be useful to anyone who utilizes it, as well as yours truly :). Keep up the good work – i will definitely read more posts.

Truly loads of valuable material!how to write a short essay essaytyper online thesis writing services

Nicely put, Thank you.how to write an amazing essay research paper custom writing service reviews

Hoje é um dia triste Mas o que importa é que sábado eu vou ta com os meus vendo show do QUAVO, djonga, sidoka, recayd, jovem dex,fbc, hot e oreia, bk, clara lima, caveirinha e etc

I value the blog.Really looking forward to read more. Much obliged.

Thanks-a-mundo for the article.Really looking forward to read more. Really Cool.

Very informative post.Much thanks again. Really Cool.

medication canadian pharmacy canadapharmacyonline – walmart pharmacy online

An intriguing discussion is worth comment. I do believe that you should write more about this subject, it may not be a taboo matter but typically people don’t talk about these topics. To the next! Many thanks!!

Im obliged for the blog article. Fantastic.

Generally I don’t learn post on blogs, however I wish to say that this write-up very compelled me to check out and do it! Your writing taste has been amazed me. Thanks, very great article.

As I website owner I believe the content material here is really good appreciate it for your efforts.

Im thankful for the post.Much thanks again. Much obliged.

Thank you for another wonderful article. The place else could anyone get that type of info in such a perfect means of writing? I ave a presentation next week, and I am on the look for such info.

Really informative article post.Really thank you! Awesome.

This is a good tip particularly to those fresh to the blogosphere. Simple but very accurate information… Thanks for sharing this one. A must read post!

Hey, thanks for the article.Really looking forward to read more. Fantastic.

Thanks so much for the article.Thanks Again. Will read on…

Wow, great blog.Really looking forward to read more. Will read on…

I appreciate you sharing this blog post. Thanks Again. Cool.

tamoxifen macmillan tamoxifen male infertility tamoxifen citrate usp

You are my inspiration , I own few blogs and very sporadically run out from to post .

essay writer online online homework writing paper help

I really enjoy the article post.Really looking forward to read more. Keep writing.

over the counter ed pills cvs – help for ed best otc ed pills

A fascinating discussion is worth comment. I do think that you ought to publish more on this issue, it might not be a taboo subject but usually people don’t discuss these topics. To the next! Best wishes!!

I really liked your article. Want more.

Level up while tipping girls in chat rooms and enjoy the BEST new live cam site experience https://cupidocam.com/content/tags/blonde

I really liked your article post.Much thanks again. Fantastic.

I really like and appreciate your blog.Much thanks again. Fantastic.

Hi! This post could not be written any better!Reading this post reminds me of my previous room mate!He always kept chatting about this. I will forward thiswrite-up to him. Fairly certain he will have a good read.Many thanks for sharing!

Thanks again for the blog.Thanks Again. Fantastic.

Enjoyed every bit of your blog.Really thank you!

wow, awesome blog.Really thank you! Want more.

Appreciate you sharing, great article post.Thanks Again. Much obliged.

Major thanks for the blog.Much thanks again. Really Cool.

latin homework college essays written for you quotes for college essays

Im thankful for the article post. Great.

To the techpinions.com admin, Thanks for the informative and well-written post!

whoah this blog is wonderful i love reading your articles. Keep up the great work! You know, many people are hunting around for this info, you can aid them greatly.

I quite like reading an article that can make men and women think.Also, thank you for allowing me to comment!

Major thanks for the blog article. Really Great.

Hey! I just wanted to ask if you ever have any trouble with hackers?My last blog (wordpress) was hacked and I ended up losingseveral weeks of hard work due to no backup. Do youhave any methods to prevent hackers?

Тестоотсадочная машина Тестоотсадочная машина

Awesome article post.Really thank you! Really Great.

Hi techpinions.com webmaster, You always provide valuable information.

Thanks for the blog post.Thanks Again. Awesome.

Thanks for sharing, this is a fantastic article.Really thank you! Really Cool.

To the techpinions.com administrator, You always provide in-depth analysis and understanding.

Hi, all is going sound here and ofcourse every one is sharing data,that’s actually excellent, keep up writing.

Dear techpinions.com owner, Your posts are always well-written and easy to understand.

Dear techpinions.com administrator, Your posts are always informative and well-explained.

Maximum term. The rate (APR) assumes no fees apply.

Hello techpinions.com webmaster, Your posts are always a great source of knowledge.

I value the blog article.Really thank you! Want more.

http://withoutprescription.guru/# viagra without a doctor prescription

Hi techpinions.com admin, Thanks for sharing your thoughts!

Great post. I used to be checking continuously this blog and I’m impressed!

What’s up it’s me, I am also visiting this site on a regular basis,

this website is actually fastidious and the

users are genuinely sharing nice thoughts.

Hey there, You’ve done an incredible job.

I will definitely digg it and personally recommend to my friends.

I am sure they will be benefited from this website.

mexico drug stores pharmacies: п»їbest mexican online pharmacies – mexican online pharmacies prescription drugs

https://edpills.icu/# best ed pills online

discount prescription drugs: cialis without a doctor’s prescription – prescription drugs without prior prescription

doxy: buy doxycycline without prescription uk – doxycycline 100mg

I’m not sure where you are getting your info, but good topic.

I must spend some time studying more or working out more.

Thank you for magnificent information I used to be on the

lookout for this info for my mission.

best ed pills online: best erectile dysfunction pills – online ed pills

https://canadapharm.top/# canadian neighbor pharmacy

I all the time emailed this blog post page to all my friends, for

the reason that if like to read it afterward my links will

too.

buy prescription drugs from canada cheap: buy prescription drugs from canada cheap – non prescription ed pills

This article will assist the internet visitors for creating new web site or

even a blog from start to end.

https://canadapharm.top/# canada cloud pharmacy

how to get sildenafil: purchase sildenafil 100 mg – sildenafil 100mg price usa

https://kamagra.team/# super kamagra

sildenafil oral jelly 100mg kamagra п»їkamagra Kamagra 100mg

buy generic tadalafil: tadalafil online cost – buy tadalafil from canada

hello there and thank you for your info – I’ve certainly picked up something new from right here.

I did however expertise a few technical issues using

this site, as I experienced to reload the site many times previous to I

could get it to load properly. I had been wondering if

your web hosting is OK? Not that I’m complaining, but slow loading instances times will

often affect your placement in google and can damage your high-quality score if advertising and marketing with Adwords.

Anyway I am adding this RSS to my email and can look out

for a lot more of your respective fascinating content. Ensure that you update

this again soon.

buy kamagra online usa: super kamagra – Kamagra tablets

http://sildenafil.win/# sildenafil over the counter united states

https://edpills.monster/# pills erectile dysfunction

buy kamagra online usa buy kamagra online usa Kamagra 100mg

Heya i am for the first time here. I came across this board and I find It really useful & it helped me

out much. I hope to give something back and help others like

you helped me.

Right now it looks like BlogEngine is the best blogging platform available right

now. (from what I’ve read) Is that what you’re using on your blog?

what is the best ed pill: herbal ed treatment – best pills for ed

A fascinating discussion is definitely worth comment. I do think that you ought to publish more on this subject, it might not be a taboo subject but generally people do not talk about these topics. To the next! Best wishes!!

http://edpills.monster/# men’s ed pills

buy Kamagra Kamagra tablets п»їkamagra

medicine for erectile: erectile dysfunction drugs – medicine for erectile

sildenafil over the counter nz sildenafil pharmacy sildenafil online europe

sildenafil australia paypal: sildenafil canada over the counter – sildenafil from india

sildenafil oral jelly 100mg kamagra cheap kamagra sildenafil oral jelly 100mg kamagra

new treatments for ed: best ed medication – ed pills cheap

https://levitra.icu/# Levitra online USA fast

http://azithromycin.bar/# can you buy zithromax over the counter in mexico

buy ciprofloxacin tablets buy ciprofloxacin online ciprofloxacin

medicine lisinopril 10 mg: Over the counter lisinopril – lisinopril pill

http://azithromycin.bar/# buy azithromycin zithromax

buy doxycycline 100mg online usa: doxycycline buy online – doxycycline hyclate

doxycycline 100mg india buy doxycycline over the counter doxycycline 50 mg buy uk

buy cipro online canada: Get cheapest Ciprofloxacin online – ciprofloxacin 500mg buy online

https://azithromycin.bar/# where can i buy zithromax medicine

where can i buy amoxicillin over the counter uk purchase amoxicillin online azithromycin amoxicillin

zithromax 500 mg lowest price drugstore online: buy zithromax – zithromax online usa

http://lisinopril.auction/# cheap lisinopril 40 mg

amoxicillin over the counter in canada: buy amoxil – can i buy amoxicillin over the counter

can you buy amoxicillin uk cheap amoxicillin 875 mg amoxicillin cost

http://azithromycin.bar/# zithromax online

buy cipro online canada: Buy ciprofloxacin 500 mg online – ciprofloxacin 500mg buy online

zithromax 500 price zithromax antibiotic zithromax pill

amoxicillin 500mg capsule: cheap amoxicillin – how to get amoxicillin over the counter

https://ciprofloxacin.men/# cipro pharmacy

amoxicillin canada price: amoxicillin 500 mg cost – generic amoxicillin

how much is zithromax 250 mg zithromax antibiotic without prescription order zithromax over the counter

http://indiapharmacy.site/# top 10 online pharmacy in india

canadian pharmacy 24h com: certified canadian pharmacy – pharmacy wholesalers canada

best canadian pharmacy online: certified canadian pharmacy – best online canadian pharmacy

mexico pharmacy mexico drug stores pharmacies mexico drug stores pharmacies

http://indiapharmacy.site/# online pharmacy india

india pharmacy mail order: pharmacy website india – india online pharmacy

canada ed drugs: accredited canadian pharmacy – canada drug pharmacy

canadapharmacyonline com certified canada pharmacy online canada online pharmacy

https://clomid.club/# cheap clomid prices

buy paxlovid online: Paxlovid over the counter – paxlovid buy

http://claritin.icu/# ventolin prescription online

wellbutrin 150 mg generic: Buy Wellbutrin XL online – buy generic wellbutrin

https://claritin.icu/# buy ventolin in mexico

purchase wellbutrin sr: Buy Wellbutrin XL online – wellbutrin 450 xl

paxlovid covid http://paxlovid.club/# paxlovid cost without insurance

I don’t commonly comment but I gotta tell appreciate it for the post on this amazing one :D.My blog; 159.203.199.234

http://claritin.icu/# ventolin best price

Howdy! I’m at work browsing your blog from my new iphone

3gs! Just wanted to say I love reading your blog and look forward to all your

posts! Carry on the fantastic work!

wellbutrin 65mg: Buy Wellbutrin XL 300 mg online – wellbutrin 10mg

http://wellbutrin.rest/# wellbutrin 300 mg no prescription online

ventolin 4mg price: Ventolin inhaler – ventolin tablets buy

https://clomid.club/# how can i get cheap clomid without dr prescription

paxlovid buy: Paxlovid over the counter – paxlovid generic

https://paxlovid.club/# buy paxlovid online

farmacie online autorizzate elenco avanafil prezzo comprare farmaci online con ricetta

farmacie online autorizzate elenco: Farmacie a milano che vendono cialis senza ricetta – farmacie on line spedizione gratuita

http://avanafilit.icu/# farmacia online miglior prezzo

top farmacia online: farmacia online miglior prezzo – farmacia online migliore

viagra generico recensioni: sildenafil prezzo – miglior sito dove acquistare viagra

farmacie online autorizzate elenco farmacia online spedizione gratuita farmacia online

siti sicuri per comprare viagra online: esiste il viagra generico in farmacia – viagra acquisto in contrassegno in italia

http://kamagrait.club/# comprare farmaci online con ricetta

comprare farmaci online con ricetta kamagra gel prezzo farmacie online affidabili

acquisto farmaci con ricetta: Avanafil farmaco – farmacie on line spedizione gratuita

http://tadalafilit.store/# migliori farmacie online 2023

farmacie online sicure avanafil generico prezzo farmacia online senza ricetta

cialis farmacia senza ricetta: viagra online siti sicuri – cialis farmacia senza ricetta

http://farmaciait.pro/# comprare farmaci online all’estero

comprare farmaci online con ricetta: farmacia online spedizione gratuita – farmacie online affidabili

farmacia online senza ricetta farmacia online migliore farmacia online piГ№ conveniente

п»їfarmacia online migliore: kamagra gel – farmacie on line spedizione gratuita

acquistare farmaci senza ricetta farmacia online migliore farmacie online affidabili

farmacia online miglior prezzo: kamagra gold – comprare farmaci online con ricetta

https://farmaciait.pro/# acquistare farmaci senza ricetta

farmacia online senza ricetta farmacia online miglior prezzo farmacie on line spedizione gratuita

farmaci senza ricetta elenco: farmacia online – acquisto farmaci con ricetta

http://avanafilit.icu/# farmacia online

farmacia online internacional: farmacias baratas online envio gratis – farmacia barata

Appreciate this post. Let me try it out.

farmacia online barata comprar cialis online seguro opiniones п»їfarmacia online

http://farmacia.best/# farmacia online envÃo gratis

farmacias baratas online envГo gratis: comprar kamagra en espana – farmacia envГos internacionales

farmacia online madrid Levitra precio farmacia online envГo gratis

http://sildenafilo.store/# venta de viagra a domicilio

farmacia online barata: farmacia envio gratis – п»їfarmacia online

viagra online cerca de bilbao comprar viagra en espana viagra para hombre precio farmacias

https://farmacia.best/# farmacia barata

farmacia online internacional: kamagra gel – farmacia envГos internacionales

http://sildenafilo.store/# viagra online cerca de toledo

http://vardenafilo.icu/# farmacias online seguras en españa

farmacia 24h: kamagra oral jelly – farmacias baratas online envГo gratis

http://tadalafilo.pro/# farmacias online seguras en españa

http://sildenafilo.store/# farmacia gibraltar online viagra

п»їfarmacia online Comprar Levitra Sin Receta En Espana farmacia online madrid

farmacias online seguras en espaГ±a: Levitra Bayer – farmacia online 24 horas

https://sildenafilo.store/# sildenafilo 100mg sin receta

https://kamagraes.site/# farmacia 24h

farmacia envГos internacionales: kamagra – farmacias online seguras

farmacia online 24 horas Precio Cialis 20 Mg farmacia barata

https://sildenafilo.store/# se puede comprar viagra sin receta

farmacias online seguras en espaГ±a: precio cialis en farmacia con receta – п»їfarmacia online

https://kamagraes.site/# farmacias online seguras

https://farmacia.best/# farmacia online

http://kamagraes.site/# farmacia barata

farmacias baratas online envГo gratis: Levitra 20 mg precio – farmacia envГos internacionales

http://pharmacieenligne.guru/# pharmacie ouverte 24/24

Pharmacie en ligne fiable pharmacie en ligne acheter medicament a l etranger sans ordonnance

https://viagrasansordonnance.store/# Viagra prix pharmacie paris

acheter mГ©dicaments Г l’Г©tranger: Levitra acheter – acheter mГ©dicaments Г l’Г©tranger

https://kamagrafr.icu/# Pharmacie en ligne fiable

farmacias online seguras en espaГ±a: se puede comprar kamagra en farmacias – п»їfarmacia online

Acheter mГ©dicaments sans ordonnance sur internet kamagra gel Pharmacie en ligne livraison gratuite

Pharmacie en ligne France: kamagra 100mg prix – acheter mГ©dicaments Г l’Г©tranger

http://kamagrafr.icu/# Pharmacie en ligne France

farmacias baratas online envГo gratis: Cialis precio – farmacias baratas online envГo gratis

http://viagrasansordonnance.store/# Sildénafil 100mg pharmacie en ligne

Viagra sans ordonnance livraison 24h Meilleur Viagra sans ordonnance 24h Viagra homme sans ordonnance belgique

excellent issues altogether, you just won a new reader. What may you suggest in regards to your publish that you made some days in the past? Any certain?

Pharmacie en ligne livraison 24h: Medicaments en ligne livres en 24h – п»їpharmacie en ligne

https://viagrasansordonnance.store/# Viagra pas cher livraison rapide france

farmacia barata: Precio Levitra En Farmacia – farmacia envГos internacionales

pharmacie ouverte levitra generique Pharmacie en ligne sans ordonnance

Pharmacie en ligne pas cher: cialis prix – acheter medicament a l etranger sans ordonnance

farmacias online baratas: comprar cialis online seguro opiniones – farmacia online 24 horas

https://kamagrafr.icu/# acheter médicaments à l’étranger

http://pharmacieenligne.guru/# acheter medicament a l etranger sans ordonnance

online apotheke gГјnstig cialis rezeptfreie kaufen internet apotheke

http://viagrakaufen.store/# Generika Potenzmittel rezeptfrei online kaufen

http://cialiskaufen.pro/# online-apotheken

https://potenzmittel.men/# internet apotheke

https://potenzmittel.men/# versandapotheke

Viagra Generika kaufen Deutschland viagra bestellen Viagra kaufen ohne Rezept legal

versandapotheke versandkostenfrei: kamagra jelly kaufen deutschland – online apotheke versandkostenfrei

http://viagrakaufen.store/# Viagra Alternative rezeptfrei

http://cialiskaufen.pro/# п»їonline apotheke

I’m not sure why but this site is loading very slow for me.

Is anyone else having this issue or is it a problem on my end?

I’ll check back later and see if the problem still exists.

online apotheke versandkostenfrei potenzmittel manner versandapotheke versandkostenfrei

http://viagrakaufen.store/# Viagra Apotheke rezeptpflichtig

https://potenzmittel.men/# online apotheke deutschland

gГјnstige online apotheke: online apotheke rezeptfrei – п»їonline apotheke

Viagra rezeptfreie Länder viagra ohne rezept Viagra kaufen ohne Rezept legal

https://cialiskaufen.pro/# п»їonline apotheke

mexican rx online mexico drug stores pharmacies medication from mexico pharmacy

http://mexicanpharmacy.cheap/# best online pharmacies in mexico

mexican pharmacy buying from online mexican pharmacy mexican online pharmacies prescription drugs

http://mexicanpharmacy.cheap/# mexican rx online

mexican rx online pharmacies in mexico that ship to usa mexican border pharmacies shipping to usa

https://mexicanpharmacy.cheap/# mexico drug stores pharmacies

https://mexicanpharmacy.cheap/# buying from online mexican pharmacy

mexican pharmaceuticals online best online pharmacies in mexico purple pharmacy mexico price list

mexican border pharmacies shipping to usa mexico pharmacies prescription drugs mexican pharmaceuticals online

http://mexicanpharmacy.cheap/# mexican mail order pharmacies

http://mexicanpharmacy.cheap/# medication from mexico pharmacy

best online pharmacies in mexico п»їbest mexican online pharmacies buying from online mexican pharmacy

http://mexicanpharmacy.cheap/# buying prescription drugs in mexico online

buying prescription drugs in mexico online mexican mail order pharmacies mexican rx online

https://mexicanpharmacy.cheap/# mexican mail order pharmacies

buying from online mexican pharmacy mexico drug stores pharmacies mexican border pharmacies shipping to usa

mexican border pharmacies shipping to usa mexican mail order pharmacies mexico drug stores pharmacies

india pharmacy indian pharmacy – world pharmacy india indiapharmacy.guru

Thank you for your post.Much thanks again. Really Cool.

best india pharmacy online shopping pharmacy india top 10 pharmacies in india indiapharmacy.guru

pharmacy website india world pharmacy india – online shopping pharmacy india indiapharmacy.guru

https://edpills.tech/# men’s ed pills edpills.tech

canadian pharmacy 24 canadian discount pharmacy – legit canadian pharmacy canadiandrugs.tech

https://mexicanpharmacy.company/# medication from mexico pharmacy mexicanpharmacy.company

cross border pharmacy canada best rated canadian pharmacy legal to buy prescription drugs from canada canadiandrugs.tech

https://edpills.tech/# best pill for ed edpills.tech

best online pharmacy india india pharmacy – cheapest online pharmacy india indiapharmacy.guru

https://indiapharmacy.guru/# top 10 pharmacies in india indiapharmacy.guru

online ed medications ed dysfunction treatment – ed meds online edpills.tech

http://indiapharmacy.guru/# online shopping pharmacy india indiapharmacy.guru

world pharmacy india Online medicine order top 10 online pharmacy in india indiapharmacy.guru

Thanks for the auspicious writeup. It actually was a amusement account it.Look complex to far brought agreeable from you!By the way, how can we be in contact?

http://canadiandrugs.tech/# onlinepharmaciescanada com canadiandrugs.tech

https://canadiandrugs.tech/# canadianpharmacyworld canadiandrugs.tech

http://canadapharmacy.guru/# canadian pharmacy antibiotics canadapharmacy.guru

http://indiapharmacy.guru/# indian pharmacies safe indiapharmacy.guru

mens ed pills top ed pills – online ed medications edpills.tech

ed medication online ed treatment pills top rated ed pills edpills.tech

http://canadiandrugs.tech/# cheap canadian pharmacy canadiandrugs.tech

http://edpills.tech/# natural ed remedies edpills.tech

I will immediately seize your rss feed as I can’t find your e-mail subscription link or newsletter service. Do you’ve any? Please permit me recognise so that I could subscribe. Thanks.

canadian pharmacy meds reviews canadian pharmacy online reviews best canadian pharmacy online canadiandrugs.tech

canadadrugpharmacy com canadian drugstore online – canadian medications canadiandrugs.tech

http://indiapharmacy.guru/# Online medicine home delivery indiapharmacy.guru

https://indiapharmacy.pro/# cheapest online pharmacy india indiapharmacy.pro

http://indiapharmacy.guru/# india pharmacy indiapharmacy.guru

reputable indian pharmacies cheapest online pharmacy india cheapest online pharmacy india indiapharmacy.guru

http://edpills.tech/# new ed pills edpills.tech

india pharmacy world pharmacy india – reputable indian online pharmacy indiapharmacy.guru

https://canadiandrugs.tech/# canadian pharmacy canadiandrugs.tech

ed meds best ed treatment pills – cheap erectile dysfunction pill edpills.tech

Dear techpinions.com administrator, Your posts are always well written and informative.

https://edpills.tech/# what are ed drugs edpills.tech

buy amoxicillin 500mg canada where to buy amoxicillin over the counter amoxicillin 250 mg

http://ciprofloxacin.life/# buy cipro online without prescription

cost of generic clomid tablets: can i get generic clomid without dr prescription – can i order clomid pill

http://amoxil.icu/# buy amoxicillin

amoxicillin discount coupon: amoxicillin 50 mg tablets – where can i buy amoxicillin online

cost clomid no prescription: how can i get clomid prices – how can i get cheap clomid price

how can i get clomid no prescription get generic clomid tablets cost cheap clomid online

Hey I know this is off topic but I was wondering if you knew of any widgets I could

add to my blog that automatically tweet my newest twitter updates.

I’ve been looking for a plug-in like this for quite some time and was hoping maybe you would

have some experience with something like this. Please let me know

if you run into anything. I truly enjoy reading your blog and I look forward to

your new updates.

http://clomid.site/# how can i get clomid

prednisone 10 mg tablets: prednisone buy no prescription – cortisol prednisone

https://clomid.site/# can i purchase generic clomid tablets

price for amoxicillin 875 mg: amoxicillin 200 mg tablet – amoxicillin 500 mg tablets

where can i get prednisone: where to buy prednisone uk – prednisone 20mg tablets where to buy

paxlovid price paxlovid covid п»їpaxlovid

where buy generic clomid for sale: how can i get cheap clomid without dr prescription – how to buy generic clomid without rx

paxlovid generic: paxlovid price – paxlovid for sale

https://ciprofloxacin.life/# buy ciprofloxacin tablets

buy amoxicillin over the counter uk: buy amoxicillin online uk – purchase amoxicillin online

where to buy amoxicillin pharmacy generic amoxicillin amoxicillin 500 mg tablet

paxlovid for sale: buy paxlovid online – paxlovid price

buy paxlovid online: paxlovid for sale – paxlovid pill

https://paxlovid.win/# paxlovid buy

2.5 mg prednisone daily: cheap prednisone online – 80 mg prednisone daily

Thanks , I’ve just been searching for information about this subject for ages and yours is the best I’ve discovered so

far. But, what about the conclusion? Are you certain in regards to the supply?

how can i get prednisone online without a prescription: 200 mg prednisone daily – prednisone pill

http://amoxil.icu/# amoxicillin discount coupon

prednisone 10mg prices prednisone 20 mg pill prednisone 20mg online without prescription

ciprofloxacin over the counter: ciprofloxacin mail online – cipro pharmacy

paxlovid generic: Paxlovid over the counter – paxlovid covid

how to buy cheap clomid no prescription: can i purchase generic clomid tablets – can i order generic clomid tablets

п»їpaxlovid paxlovid for sale paxlovid cost without insurance

https://paxlovid.win/# paxlovid covid

amoxicillin 500 mg tablet: where can i get amoxicillin – amoxicillin 500mg prescription

where to get clomid without dr prescription where buy clomid online cheap clomid without rx

Muchos Gracias for your article. Really Cool.

amoxicillin over the counter in canada amoxicillin 500mg capsules amoxicillin buy no prescription

buy cheap amoxicillin online: price for amoxicillin 875 mg – price of amoxicillin without insurance

https://clomid.site/# can i order cheap clomid tablets

buy cipro cheap: buy ciprofloxacin – buy cipro online without prescription

Appreciate you sharing, great blog. Want more.

Boostaro increases blood flow to the reproductive organs, leading to stronger and more vibrant erections. It provides a powerful boost that can make you feel like you’ve unlocked the secret to firm erections

I appreciate you sharing this article.Thanks Again. Great.

Enjoyed every bit of your blog article.Really looking forward to read more.

http://amoxil.icu/# buy amoxicillin without prescription

https://ciprofloxacin.life/# cipro ciprofloxacin

can you buy clomid for sale can i order generic clomid – can i purchase generic clomid tablets

http://amoxil.icu/# amoxicillin price canada

how to buy cheap clomid tablets: where can i get generic clomid without insurance – where to get clomid without dr prescription

order prednisone from canada: prednisone 50 mg price – prednisone 2.5 mg cost

Major thankies for the blog.Much thanks again. Awesome.

where to buy zithromax in canada: zithromax cost – buy zithromax online cheap

lisinopril prescription: lisinopril 2.5 mg – zestoretic cost

https://nolvadex.fun/# where to buy nolvadex

To the techpinions.com owner, Good job!

http://nolvadex.fun/# tamoxifen generic

nolvadex generic: nolvadex estrogen blocker – does tamoxifen cause weight loss

Aw, this was an incredibly nice post. Spending some time and actual effort to make a great article… but what can I say… I procrastinate a lot and never manage to get nearly anything done.

https://nolvadex.fun/# tamoxifen mechanism of action

lisinopril 12.5 mg 10 mg: generic for prinivil – prinivil 10 mg tablet

what is tamoxifen used for: how does tamoxifen work – aromatase inhibitors tamoxifen

https://cytotec.icu/# cytotec pills buy online

zestril: prinivil drug cost – prinivil 5 mg

aromatase inhibitor tamoxifen: tamoxifen postmenopausal – tamoxifen for sale

https://lisinoprilbestprice.store/# drug lisinopril 5 mg

zithromax drug: zithromax 250 mg australia – zithromax tablets for sale

http://cytotec.icu/# Misoprostol 200 mg buy online

buy zithromax no prescription: cheap zithromax pills – zithromax antibiotic

doxycycline hyc 100mg: doxycycline – vibramycin 100 mg

generic doxycycline: generic doxycycline – how to order doxycycline

http://cytotec.icu/# cytotec buy online usa

http://nolvadex.fun/# what happens when you stop taking tamoxifen

top 10 online pharmacy in india: India Post sending medicines to USA – indian pharmacy online indiapharm.llc

canadian drugs online Cheapest drug prices Canada best canadian pharmacy canadapharm.life

https://indiapharm.llc/# reputable indian pharmacies indiapharm.llc

canadian pharmacy review: Pharmacies in Canada that ship to the US – canadian pharmacy ltd canadapharm.life

buying from online mexican pharmacy: Best pharmacy in Mexico – best online pharmacies in mexico mexicopharm.com

https://canadapharm.life/# canadian pharmacy price checker canadapharm.life

Puralean is an all-natural dietary supplement designed to support boosted fat-burning rates, energy levels, and metabolism by targeting healthy liver function.

https://canadapharm.life/# canadian pharmacy service canadapharm.life

reputable indian online pharmacy Medicines from India to USA online online pharmacy india indiapharm.llc

reputable indian pharmacies: Online India pharmacy – top 10 online pharmacy in india indiapharm.llc

prescription drugs canada buy online: Canadian pharmacy best prices – canadian pharmacy com canadapharm.life

http://indiapharm.llc/# online shopping pharmacy india indiapharm.llc

mexico drug stores pharmacies: Purple Pharmacy online ordering – buying from online mexican pharmacy mexicopharm.com

http://indiapharm.llc/# indian pharmacy paypal indiapharm.llc

http://indiapharm.llc/# india online pharmacy indiapharm.llc

Online medicine order India Post sending medicines to USA п»їlegitimate online pharmacies india indiapharm.llc

Wild Stallion Pro is a natural male enhancement supplement designed to improve various aspects of male

Folixine is a enhancement that regrows hair from the follicles by nourishing the scalp. It helps in strengthening hairs from roots.

medication from mexico pharmacy: Purple Pharmacy online ordering – mexico drug stores pharmacies mexicopharm.com

http://indiapharm.llc/# top online pharmacy india indiapharm.llc

india pharmacy mail order: indian pharmacy to usa – indian pharmacy paypal indiapharm.llc

http://indiapharm.llc/# world pharmacy india indiapharm.llc

sildenafil 100mg uk paypal: sildenafil discount – sildenafil 20 mg online rx

sildenafil 20 mg for sale cheap sildenafil buy real sildenafil online with paypal

https://sildenafildelivery.pro/# sildenafil 20 mg tablet brand name

Vardenafil price: Cheap Levitra online – Cheap Levitra online

https://kamagradelivery.pro/# buy kamagra online usa

top rated ed pills: erection pills over the counter – what are ed drugs

http://levitradelivery.pro/# buy Levitra over the counter

https://kamagradelivery.pro/# Kamagra 100mg price

ed meds ed pills delivery ed drug prices

Kamagra 100mg price: cheap kamagra – п»їkamagra

https://edpillsdelivery.pro/# ed medications online

Java Burn is a proprietary blend of metabolism-boosting ingredients that work together to promote weight loss in your body.

how much is tadalafil: generic tadalafil daily – tadalafil 2.5 mg price

http://kamagradelivery.pro/# sildenafil oral jelly 100mg kamagra

buy real sildenafil online with paypal: Buy generic 100mg Sildenafil online – sildenafil 100 mg tablet cost

https://sildenafildelivery.pro/# best online sildenafil prescription

sildenafil singapore: Buy generic 100mg Sildenafil online – can i buy sildenafil over the counter in uk

https://tadalafildelivery.pro/# tadalafil 5 mg coupon

tadalafil 20 mg over the counter Buy tadalafil online tadalafil generic over the counter

https://tadalafildelivery.pro/# buy tadalafil uk

cheapest online sildenafil: sildenafil 100 mg tablet cost – generic sildenafil 50mg

https://prednisone.auction/# cheap prednisone 20 mg

amoxicillin price without insurance: Amoxicillin buy online – cost of amoxicillin prescription

https://amoxil.guru/# prescription for amoxicillin

http://stromectol.guru/# ivermectin ireland

paxlovid india Buy Paxlovid privately Paxlovid buy online

http://stromectol.guru/# ivermectin 90 mg

where can i get clomid without rx: cheapest clomid – cost of cheap clomid pill

https://clomid.auction/# buying clomid without rx

Paxlovid buy online Buy Paxlovid privately paxlovid price

https://stromectol.guru/# stromectol tablets

prednisone 2 5 mg: buy prednisone from canada – prednisone steroids

https://clomid.auction/# order clomid tablets

https://stromectol.guru/# stromectol tablets

paxlovid generic paxlovid covid paxlovid buy

http://stromectol.guru/# ivermectin goodrx

http://stromectol.guru/# how much is ivermectin

zithromax generic cost: cheapest azithromycin – zithromax capsules 250mg

https://misoprostol.shop/# cytotec pills online

https://finasteride.men/# order generic propecia without rx

Abortion pills online: buy cytotec over the counter – buy cytotec pills

Thank you a bunch for sharing this with all people you really understand what you are speaking about!

Bookmarked. Kindly additionally discuss with my web site =).

We can have a link exchange agreement among

us

https://lisinopril.fun/# lisinopril cost 40 mg

zithromax for sale us buy zithromax over the counter zithromax capsules 250mg

http://furosemide.pro/# lasix pills

can you buy zithromax over the counter: buy zithromax z-pak online – zithromax pill

http://lisinopril.fun/# lisinopril cost canada

lisinopril 80 mg: High Blood Pressure – lisinopril 15 mg tablets

Thank you for sharing your info. I really appreciate your efforts and I am waiting for your next post thank you once

again.

http://azithromycin.store/# zithromax online

order propecia without dr prescription Finasteride buy online cost of generic propecia without dr prescription

http://azithromycin.store/# zithromax for sale 500 mg

http://furosemide.pro/# furosemida

buy misoprostol over the counter: buy cytotec online – purchase cytotec

cheap propecia without dr prescription: Best place to buy propecia – cheap propecia without a prescription

https://finasteride.men/# buying generic propecia price

propecia rx: Cheapest finasteride online – buy propecia without prescription

get propecia without insurance: Cheapest finasteride online – propecia for sale

website lisinopril generic lisinopril 10 mg canada

EndoPump is a dietary supplement for men’s health. This supplement is said to improve the strength and stamina required by your body to perform various physical tasks. Because the supplement addresses issues associated with aging, it also provides support for a variety of other age-related issues that may affect the body. https://endopumpbuynow.us/

https://misoprostol.shop/# purchase cytotec

The most talked about weight loss product is finally here! FitSpresso is a powerful supplement that supports healthy weight loss the natural way. Clinically studied ingredients work synergistically to support healthy fat burning, increase metabolism and maintain long lasting weight loss. https://fitspressobuynow.us/

zithromax online: Azithromycin 250 buy online – zithromax 500 mg lowest price drugstore online

BioVanish a weight management solution that’s transforming the approach to healthy living. In a world where weight loss often feels like an uphill battle, BioVanish offers a refreshing and effective alternative. This innovative supplement harnesses the power of natural ingredients to support optimal weight management. https://biovanishbuynow.us/

http://lisinopril.fun/# lisinopril prinivil zestril

https://finasteride.men/# propecia order

https://misoprostol.shop/# buy cytotec over the counter

get cheap propecia without a prescription: Best place to buy propecia – cost of generic propecia price

Sonovive™ is an all-natural supplement made to address the root cause of tinnitus and other inflammatory effects on the brain and promises to reduce tinnitus, improve hearing, and provide peace of mind. https://sonovivebuynow.us/

DentaTonic is a breakthrough solution that would ultimately free you from the pain and humiliation of tooth decay, bleeding gums, and bad breath. It protects your teeth and gums from decay, cavities, and pain. https://dentatonicbuynow.us/

Protoflow is a prostate health supplement featuring a blend of plant extracts, vitamins, minerals, fruit extracts, and more. https://protoflowbuynow.us/

ProDentim is a nutritional dental health supplement that is formulated to reverse serious dental issues and to help maintain good dental health. https://prodentimbuynow.us/

buy propecia pills buy propecia buy cheap propecia without insurance

lisinopril 20 mg pill: buy lisinopril online – order lisinopril 10 mg

https://misoprostol.shop/# buy cytotec

Prostadine is a dietary supplement meticulously formulated to support prostate health, enhance bladder function, and promote overall urinary system well-being. Crafted from a blend of entirely natural ingredients, Prostadine draws upon a recent groundbreaking discovery by Harvard scientists. This discovery identified toxic minerals present in hard water as a key contributor to prostate issues. https://prostadinebuynow.us/

Sugar Defender is the #1 rated blood sugar formula with an advanced blend of 24 proven ingredients that support healthy glucose levels and natural weight loss. https://sugardefenderbuynow.us/

TropiSlim is the world’s first 100% natural solution to support healthy weight loss by using a blend of carefully selected ingredients. https://tropislimbuynow.us/

Puralean incorporates blends of Mediterranean plant-based nutrients, specifically formulated to support healthy liver function. These blends aid in naturally detoxifying your body, promoting efficient fat burning and facilitating weight loss. https://puraleanbuynow.us/

Unlock the incredible potential of Puravive! Supercharge your metabolism and incinerate calories like never before with our unique fusion of 8 exotic components. Bid farewell to those stubborn pounds and welcome a reinvigorated metabolism and boundless vitality. Grab your bottle today and seize this golden opportunity! https://puravivebuynow.us/

Neotonics is an essential probiotic supplement that works to support the microbiome in the gut and also works as an anti-aging formula. The formula targets the cause of the aging of the skin. https://neotonicsbuynow.us/

GlucoTrust is a revolutionary blood sugar support solution that eliminates the underlying causes of type 2 diabetes and associated health risks. https://glucotrustbuynow.us/

buy cytotec pills online cheap: Misoprostol best price in pharmacy – Cytotec 200mcg price

Serolean, a revolutionary weight loss supplement, zeroes in on serotonin—the key neurotransmitter governing mood, appetite, and fat storage. https://seroleanbuynow.us/

https://lisinopril.fun/# zestril coupon

SynoGut is an all-natural dietary supplement that is designed to support the health of your digestive system, keeping you energized and active. https://synogutbuynow.us/

https://azithromycin.store/# zithromax for sale us

lasix for sale: Over The Counter Lasix – lasix generic name

https://furosemide.pro/# furosemide 100mg

https://misoprostol.shop/# cytotec buy online usa

can you buy zithromax over the counter in canada: Azithromycin 250 buy online – buy generic zithromax online

lisinopril 10 mg pill: buy lisinopril canada – lisinopril 60 mg

https://avanafilitalia.online/# comprare farmaci online con ricetta

viagra ordine telefonico: sildenafil 100mg prezzo – viagra generico sandoz

https://kamagraitalia.shop/# farmacie online affidabili

п»їfarmacia online migliore: farmacia online miglior prezzo – comprare farmaci online con ricetta

http://sildenafilitalia.men/# viagra 100 mg prezzo in farmacia

farmacie on line spedizione gratuita: Dove acquistare Cialis online sicuro – п»їfarmacia online migliore

https://avanafilitalia.online/# farmacia online miglior prezzo

farmacia online senza ricetta: kamagra gold – acquisto farmaci con ricetta

п»їfarmacia online migliore kamagra gel prezzo п»їfarmacia online migliore

farmacie online autorizzate elenco: Avanafil farmaco – farmacia online piГ№ conveniente

https://sildenafilitalia.men/# viagra prezzo farmacia 2023

farmacie online sicure: kamagra oral jelly – farmacia online migliore

http://avanafilitalia.online/# acquistare farmaci senza ricetta

https://kamagraitalia.shop/# acquistare farmaci senza ricetta

http://avanafilitalia.online/# farmacie online affidabili

farmacie online autorizzate elenco: kamagra gold – farmacia online miglior prezzo

https://tadalafilitalia.pro/# migliori farmacie online 2023

farmacia online piГ№ conveniente avanafil prezzo in farmacia farmacia online piГ№ conveniente

http://tadalafilitalia.pro/# comprare farmaci online all’estero

acquisto farmaci con ricetta: farmacia online – comprare farmaci online con ricetta

farmacie online sicure: kamagra oral jelly – farmaci senza ricetta elenco

https://tadalafilitalia.pro/# comprare farmaci online con ricetta

http://farmaciaitalia.store/# farmacie on line spedizione gratuita

http://farmaciaitalia.store/# migliori farmacie online 2023

medicine in mexico pharmacies: buying from online mexican pharmacy – medicine in mexico pharmacies

homework help says: essay writing service online j79pnq writing essays help d90sow

canadian pharmacy prices: canadian pharmacy 24 com – pharmacies in canada that ship to the us

п»їbest mexican online pharmacies medication from mexico pharmacy buying prescription drugs in mexico online

http://indiapharm.life/# indian pharmacy online

pharmacy website india: top 10 pharmacies in india – canadian pharmacy india

best online pharmacies in mexico: mexican border pharmacies shipping to usa – mexican online pharmacies prescription drugs

https://indiapharm.life/# cheapest online pharmacy india

mexican mail order pharmacies: mexico pharmacies prescription drugs – mexico drug stores pharmacies

http://indiapharm.life/# buy prescription drugs from india

http://canadapharm.shop/# canadapharmacyonline

escrow pharmacy canada: pharmacy in canada – canadapharmacyonline legit

mexico drug stores pharmacies: mexico drug stores pharmacies – buying prescription drugs in mexico online

http://canadapharm.shop/# canada drugs online

Online medicine home delivery: indian pharmacies safe – indian pharmacy paypal

http://canadapharm.shop/# canadian pharmacy

legitimate canadian pharmacies: pharmacy canadian superstore – canadian pharmacy world

https://canadapharm.shop/# canadapharmacyonline com

mexican pharmaceuticals online: purple pharmacy mexico price list – buying from online mexican pharmacy

https://canadapharm.shop/# pharmacy wholesalers canada

buying prescription drugs in mexico: mexico drug stores pharmacies – п»їbest mexican online pharmacies

vipps canadian pharmacy maple leaf pharmacy in canada safe reliable canadian pharmacy

http://indiapharm.life/# buy medicines online in india

www canadianonlinepharmacy: canada drugstore pharmacy rx – canadian pharmacy phone number

https://canadapharm.shop/# canadian pharmacy 24 com

safe canadian pharmacies: canadian pharmacy oxycodone – canadian pharmacy store

canadian world pharmacy: reputable canadian pharmacy – legitimate canadian pharmacy online

https://canadapharm.shop/# canadian pharmacy tampa

http://mexicanpharm.store/# mexican online pharmacies prescription drugs

online shopping pharmacy india top 10 pharmacies in india Online medicine order

india pharmacy: indian pharmacy – top 10 pharmacies in india

buy cytotec pills: buy cytotec over the counter – buy cytotec pills online cheap

I loved as much as you’ll receive carried out right here.

The sketch is attractive, your authored subject matter stylish.

nonetheless, you command get bought an edginess

over that you wish be delivering the following. unwell unquestionably come

more formerly again as exactly the same nearly very often inside case you shield this hike.

Find healthy, delicious recipes and meal plan ideas from our test kitchen cooks and nutrition experts at SweetApple. Learn how to make healthier food choices every day. https://sweetapple.site/

The latest news on grocery chains, celebrity chefs, and fast food – plus reviews, cooking tips and advice, recipes, and more. https://megamenu.us/

Their worldwide reputation is well-deserved http://prednisonepharm.store/# prednisone online pharmacy

https://zithromaxpharm.online/# zithromax price canada

The one-stop destination for vacation guides, travel tips, and planning advice – all from local experts and tourism specialists. https://travelerblog.us/

tamoxifen hair loss: buy nolvadex online – nolvadex vs clomid

Outdoor Blog will help you live your best life outside – from wildlife guides, to safety information, gardening tips, and more. https://outdoorblog.us/

A universal solution for all pharmaceutical needs http://clomidpharm.shop/# where to get clomid prices

http://clomidpharm.shop/# can i get generic clomid without rx

https://prednisonepharm.store/# prednisone 10mg tablet cost

buy prednisone online uk: prednisone 2.5 mg daily – prednisone pak

Stri is the leading entrepreneurs and innovation magazine devoted to shed light on the booming stri ecosystem worldwide. https://stri.us/

Their international health campaigns are revolutionary http://cytotec.directory/# cytotec online

https://prednisonepharm.store/# prednisone buy canada

Maryland Post: Your source for Maryland breaking news, sports, business, entertainment, weather and traffic https://marylandpost.us/

buy generic zithromax no prescription: how to get zithromax over the counter – how to get zithromax

Their global reputation precedes them http://prednisonepharm.store/# prednisone pills cost

https://cytotec.directory/# buy cytotec online

Everything what you want to know about pills http://nolvadex.pro/# should i take tamoxifen

nolvadex pct lexapro and tamoxifen tamoxifenworld

does tamoxifen make you tired: tamoxifen 20 mg – tamoxifen alternatives

Pilot News: Your source for Virginia breaking news, sports, business, entertainment, weather and traffic https://pilotnews.us/

The latest movie and television news, reviews, film trailers, exclusive interviews, and opinions. https://slashnews.us/

Orlando News: Your source for Orlando breaking news, sports, business, entertainment, weather and traffic https://orlandonews.us/

Guun specializes in informative deep dives – from history and crime to science and everything strange. https://guun.us/

The latest news and reviews in the world of tech, automotive, gaming, science, and entertainment. https://millionbyte.us/

https://clomidpharm.shop/# can i buy clomid no prescription

tamoxifen alternatives: tamoxifen hair loss – tamoxifen cost

Exclusive Best Offer is one of the most trusted sources available online. Get detailed facts about products, real customer reviews, articles

http://cytotec.directory/# cytotec online

Bridging continents with their top-notch service https://prednisonepharm.store/# prednisone 20 tablet

tamoxifen breast cancer: nolvadex pct – clomid nolvadex

http://prednisonepharm.store/# prednisone 50 mg tablet cost

They make prescription refills a breeze http://clomidpharm.shop/# how can i get clomid without rx

where can i buy nolvadex tamoxifen joint pain tamoxifen reviews

tamoxifen premenopausal: is nolvadex legal – is nolvadex legal

East Bay News is the leading source of breaking news, local news, sports, entertainment, lifestyle and opinion for Contra Costa County, Alameda County, Oakland and beyond https://eastbaynews.us/

ed meds online without doctor prescription viagra without a doctor prescription walmart buy cheap prescription drugs online

medications with no prescription http://edwithoutdoctorprescription.store/# best non prescription ed pills

recommended canadian online pharmacies

tadalafil without a doctor’s prescription buy prescription drugs from india real viagra without a doctor prescription

http://reputablepharmacies.online/# best online pharmacies reviews

over the counter erectile dysfunction pills: over the counter erectile dysfunction pills – non prescription ed drugs

canada drugs online: reliable canadian online pharmacy – canadian pharmacies without an rx

http://edwithoutdoctorprescription.store/# levitra without a doctor prescription

real viagra without a doctor prescription how to get prescription drugs without doctor prescription drugs without doctor approval

https://edpills.bid/# best male enhancement pills

The LB News is the local news source for Long Beach and the surrounding area providing breaking news, sports, business, entertainment, things to do, opinion, photos, videos and more https://lbnews.us/

canadian pharmaceuticals online reviews canadian pharmacy testosterone online pharmacies canadian

canadian pharmaceutical ordering http://edwithoutdoctorprescription.store/# non prescription ed pills

legitimate mexican pharmacy online

http://reputablepharmacies.online/# drugs without a prescription

erection pills viagra online natural ed medications top ed pills

viagra without a doctor prescription walmart buy prescription drugs from india 100mg viagra without a doctor prescription

buy prescription drugs: prescription meds without the prescriptions – viagra without a doctor prescription

http://reputablepharmacies.online/# onlinecanadianpharmacy com

http://reputablepharmacies.online/# prescription drug discounts

http://edwithoutdoctorprescription.store/# ed meds online without doctor prescription

Thanks for sharing, this is a fantastic blog post.Really thank you! Much obliged.

My website: секс насилие

top 10 online pharmacies: buy online prescription drugs – canadian drug store cialis

https://reputablepharmacies.online/# canadian drug companies

generic viagra without a doctor prescription best non prescription ed pills non prescription erection pills

A lot of blog writers nowadays yet just a few have blog posts worth spending time on reviewing.

My website: русское реальное порно пьяных баб смотреть

https://mexicanpharmacy.win/# buying prescription drugs in mexico mexicanpharmacy.win

canadian drug store viagra

canadian 24 hour pharmacy Canadian pharmacy online buy drugs from canada canadianpharmacy.pro

A round of applause for your article. Much thanks again.

My website: бесплатное порно русское

mexico pharmacy: online mexican pharmacy – buying prescription drugs in mexico mexicanpharmacy.win

vipps canadian pharmacy canadian pharmacy prices canadian pharmacy 24h com canadianpharmacy.pro

https://indianpharmacy.shop/# india online pharmacy indianpharmacy.shop

best india pharmacy: international medicine delivery from india – reputable indian online pharmacy indianpharmacy.shop

erection pills – medications for ed over the counter erectile dysfunction pills

top 10 online pharmacy in india Best Indian pharmacy indianpharmacy com indianpharmacy.shop

https://canadianpharmacy.pro/# legit canadian pharmacy online canadianpharmacy.pro

canada drugs without perscription

https://indianpharmacy.shop/# Online medicine order indianpharmacy.shop

mexican border pharmacies shipping to usa: reputable mexican pharmacies online – mexican drugstore online mexicanpharmacy.win

Greeley, Colorado News, Sports, Weather and Things to Do https://greeleynews.us/

https://indianpharmacy.shop/# Online medicine order indianpharmacy.shop

indian pharmacy Cheapest online pharmacy reputable indian pharmacies indianpharmacy.shop

https://mexicanpharmacy.win/# medication from mexico pharmacy mexicanpharmacy.win

https://mexicanpharmacy.win/# purple pharmacy mexico price list mexicanpharmacy.win

http://canadianpharmacy.pro/# vipps canadian pharmacy canadianpharmacy.pro

https://canadianpharmacy.pro/# cheap canadian pharmacy online canadianpharmacy.pro

discount pharmacies

https://indianpharmacy.shop/# reputable indian online pharmacy indianpharmacy.shop

indian pharmacy online

https://indianpharmacy.shop/# pharmacy website india indianpharmacy.shop

https://indianpharmacy.shop/# online shopping pharmacy india indianpharmacy.shop

india pharmacy mail order

http://mexicanpharmacy.win/# mexican border pharmacies shipping to usa mexicanpharmacy.win

https://canadianpharmacy.pro/# canadian pharmacy canadianpharmacy.pro

https://indianpharmacy.shop/# indianpharmacy com indianpharmacy.shop

my canadian family pharmacy

https://indianpharmacy.shop/# india pharmacy indianpharmacy.shop

indian pharmacies safe

http://canadianpharmacy.pro/# legit canadian pharmacy canadianpharmacy.pro

http://mexicanpharmacy.win/# purple pharmacy mexico price list mexicanpharmacy.win

cheapest online pharmacy india

It’s going to be ending of mine day, but before finish I

am reading this great piece of writing to increase my knowledge.