The concept of putting more computing power closer to where applications are occurring, commonly referred as “edge computing”, has been talked about for a long time. After all, it makes logical sense to put resources nearer to where they’re actually needed. Plus, as people have come to recognize that not everything can or should be run in hyperscale cloud data centers, there has been increasing interest in diversifying both the type and location of the computing capabilities necessary to run cloud-based applications and services.

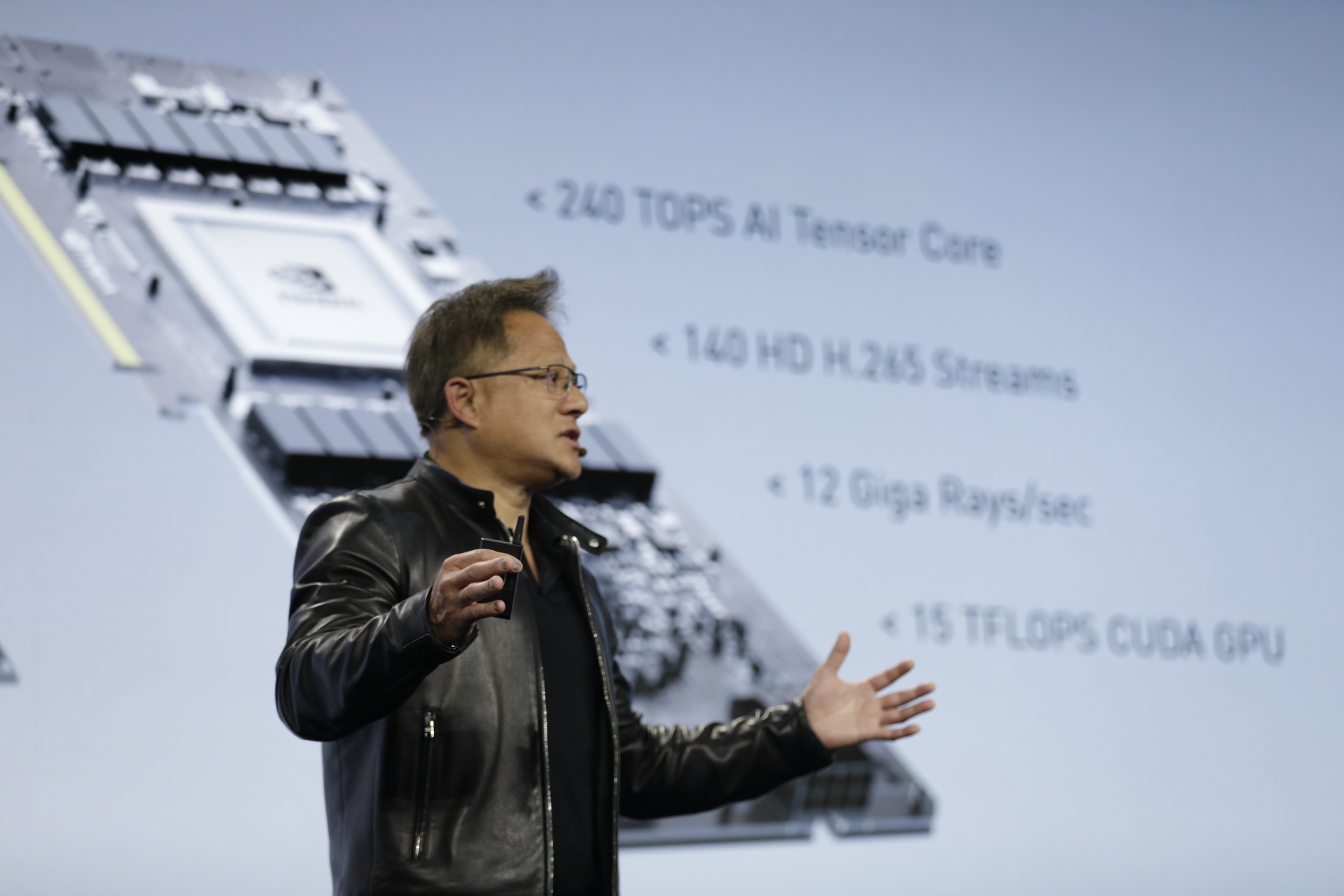

However, the choices for computing engines on the edge have been somewhat limited until now. That’s why Nvidia’s announcement (well, technically, re-announcement after its official debut at Computex earlier this year) of its EGX edge computing hardware and software platform has important implications across several different industries. At a basic level, EGX essentially brings GPUs to the edge, allowing IoT, telco, and other industry-specific applications, not typically thought of as being Nvidia clients, the ability to tap into general purpose GPU computing.

Specifically, the company’s news from the MWC LA show provides ways to run AI applications fed by IoT sensors on the edge, as well as two different capabilities important for 5G networks: software-defined radio access networks (RANs) and virtual network functions that will be at the heart of network slicing features expected in forthcoming 5G standalone networks.

Nvidia’s announced partnership with Microsoft to have the new EGX platform work with Microsoft’s Azure IoT platform is an important extension of the overall AI and IoT strategies for both companies. Nvidia, for example, has been talking about doing AI applications inside data centers for several years now, but until now they haven’t been part of most discussions for extending AI inferencing workloads to the edge in applications like retail, manufacturing, and smart cities. Conversely, much of Microsoft’s Azure IoT work has been focused on much lower power (and lower performance level) compute engines, limiting the range of applications for which they can be used. With this partnership, however, each company can leverage the strengths of the other to enable a wider range of distributed computing applications. In addition, it allows software developers a consistent platform from large data centers to the edge, which should ease the ongoing challenge of writing distributed applications that can smartly leverage different computing resources in different locations.

On the 5G side, Nvidia announced a new liaison with Ericsson—a key 5G infrastructure provider—which opens up a number of interesting possibilities for the future of GPUs inside critical mobile networking components. Specifically, the companies are working out how to leverage GPUs to build completely virtualized and software-defined RANs, which provide the key connectivity capabilities for 5G and other mobile networks. For most of their history, cellular network infrastructure components have primarily been specialized, closed systems typically based on custom ASICs, so the move to support GPUs potentially provides more flexibility, as well as smaller, more efficient equipment.

For the other 5G applications, Nvidia partnered with RedHat and its OpenShift platform to create a software toolkit they call Aerial. Leveraging the software components of Aerial, GPUs can be used to perform not just radio access network workloads (which should be able to run on the forthcoming Ericsson hardware), but virtual network functions behind 5G network slicing. The concept behind network slicing is to deliver individualized features to each person on a 5G network, including capabilities like AI and VR. Network slicing is a noble goal that’s part of the 5G standalone network standard but will require serious infrastructure horsepower to realistically deliver. In order to make the process of creating these specialized functions easier for developers, Nvidia is delivering containerized versions of GPU computing and management resources, all of which can plug into a modern, cloud-native, Kubernetes-driven software environment as part of RedHat’s OpenShift.

Another key part of enabling these network slicing capabilities is being able to process the data as quickly and efficiently as possible. In the real-time environment of wireless networks, that requires extremely fast connections to data on the networks and the need to keep that data in memory the whole time. That’s where Nvidia’s new Mellanox connection comes in, because another key function of the Aerial SDK is a low-latency connection between Mellanox networking cards and GPU memory. In addition, Aerial incorporates a special signal processing function that’s optimized for the real-time requirements of RAN applications.

What’s also interesting about these announcements is that they highlight how far the range of capabilities has expanded with GPUs. Well past the early days of faster graphics in PCs, GPUs, included as part of the EGX offering, now have the software support to be relevant in a surprisingly broad range of industries and applications.

I think the admin of this site is really working hard for his website since here every stuff is quality based data.

Hi there to all for the reason that I am genuinely keen of reading this website’s post to be updated on a regular basis. It carries pleasant stuff.

Very nice blog post. I definitely love this site. Stick with it!