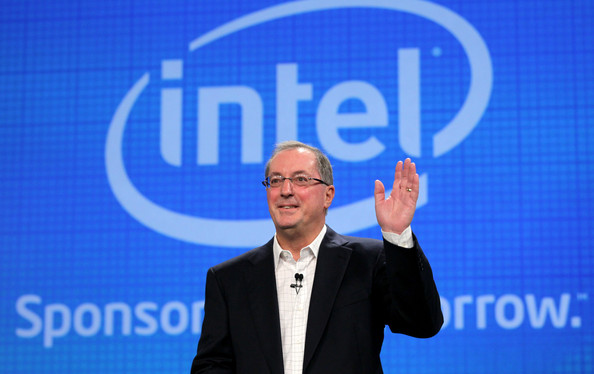

Intel’s CEO Paul Otellini is retiring in May 2013. His 40-year career at Intel now ending, it’s a timely opportunity to look at his impact on Intel.

Intel As Otellini Took Over

In September 2004 when it was announced that Paul Otellini would take over as CEO, Intel was #46 on the Fortune 100 list, and had ramped production to 1 million Pentium 4’s a week (today over a million processors a day). The year ended with revenues of $34.2 billion. Otellini, who joined Intel with a new MBA in 1974, had 30 years of experience at Intel.

The immediate challenges the company faced fell into four areas: technology, growth, competition, and finance:

Technology: Intel processor architecture had pushed more transistors clocking faster, generating more heat. The solution was to use the benefits of Moore’s Law to put more cores on each chip and run them at controllable — and eventually much reduced — voltages.

Growth: The PC market was 80% desktops and 20% notebooks in 2004 with the North America and Europe markets already mature. Intel had chip-making plants (aka fabs) coming online that were scaled to a continuing 20%-plus volume growth rate. Intel needed new markets.

Competition: AMD was ascendant, and a growing menace. As Otellini was taking over, a market research firm reported AMD had over 52% market share at U.S. retail, and Intel had fallen to #2. Clearly, Intel needed to win with better products.

Finance: Revenue in 2004 recovered to beat 2000, the Internet bubble peak. Margins were in the low 50% range — good but inadequate to fund both robust growth and high returns to shareholders.

Where Intel Evolved Under Paul Otellini

Addressing these challenges, Otellini changed the Intel culture, setting higher expectations, and moving in many new directions to take the company and the industry forward. Let’s look at major changes at Intel in the past eight years in the four areas: technology, growth, competition, and finance:

Technology

Design for Manufacturing: Intel’s process technology in 2004 was at 90nm. To reliably achieve a new process node and architecture every two years, Intel introduced the Tick-Tock model, where odd years deliver a new architecture and even years deliver a new, smaller process node. The engineering and manufacturing fab teams work together to design microprocessors that can be manufactured in high volume with few defects. Other key accomplishments include High-K Metal Gate transistors at 45nm, 32nm products, 3D tri-gate transistors at 22nm, and a 50% reduction in wafer production time.

Multi-core technology: The multi-core Intel PC was born in 2006 in the Core 2 Duo. Now, Intel uses Intel Architecture (IA) as a technology lever for computing across small and tiny (Atom), average (Core and Xeon), and massive (Phi) workloads. There is a deliberate continuum across computing needs, all supported by a common IA and an industry of IA-compatible software tools and applications.

Performance per Watt: Otellini led Intel’s transformational technology initiative to deliver 10X more power-efficient processors. Lower processor power requirements allow innovative form factors in tablets and notebooks and are a home run in the data center. The power-efficiency initiative comes to maturity with the launch of the fourth generation of Core processors, codename Haswell, later this quarter. Power efficiency is critical to growth in mobile, discussed below.

Growth

When Otellini took over, the company focused on the chips it made, leaving the rest of the PC business to its ecosystem partners. Recent unit growth in these mature markets comes from greater focus on a broader range of customer’s computing needs, and in bringing leading technology to market rapidly and consistently. In so doing, the company gained market share in all the PC and data center product categories.

The company shifted marketing emphasis from the mature North America and Europe to emerging geographies, notably the BRIC countries — Brazil, Russia, India, and China. That formula accounted for a significant fraction of revenue growth over the past five years.

Intel’s future growth requires developing new opportunities for microprocessors:

Mobile: The early Atom processors introduced in late 2008 were designed for low-cost netbooks and nettops, not phones and tablets. Mobile was a market where the company had to reorganize, dig in, and catch up. The energy-efficiency that benefits Haswell, the communications silicon from the 2010 Infineon acquisition, and the forthcoming 14nm process in 2014 will finally allow the company to stand toe-to-toe with competitors Qualcomm, nVidia, and Samsung using the Atom brand. Mobile is a huge growth opportunity.

Software: The company acquired Wind River Systems, a specialist in real-time software in 2009, and McAfee in 2010. These added to Intel’s own developer tools business. Software services business accelerates customer time to market with new, Intel-based products. The company stepped up efforts in consumer device software, optimizing the operating systems for Google (Android), Microsoft (Windows), and Samsung (Tizen). Why? Consumer devices sell best when an integrated hardware/software/ecosystem like Apple’s iPhone exists.

Intelligent Systems: Specialized Atom systems on a chip (SoCs) with Wind River software and Infineon mobile communications radios are increasingly being designed into medical devices, factory machines, automobiles, and new product categories such as digital signage. While the global “embedded systems” market lacks the pizzazz of mobile, it is well north of $20 billion in size.

Competition

AMD today is a considerably reduced competitive threat, and Intel has gained back #1 market share in PCs, notebooks, and data center.

Growth into the mobile markets is opening a new set of competitors which all use the ARM chip architecture. Intel’s first hero products for mobile arrive later this year, and the battle will be on.

Financial

Intel has delivered solid, improved financial results to stakeholders under Otellini. With ever more efficient fabs, the company has improved gross margins. Free cash flow supports a dividend above 4%, a $5B stock buyback program, and a multi-year capital expense program targeted at building industry-leading fabs.

The changes in financial results are summarized in the table below, showing the year before Otellini took over as CEO through the end of 2012.

| GAAP |

2004 |

2012 |

Change |

| Revenue |

34.2B |

53.3B |

55.8% |

| Operating Income |

10.1B |

14.6B |

44.6% |

| Net Income |

7.5B |

11B |

46.7% |

| EPS |

$1.16 |

$2.13 |

83.6% |

The Paul Otellini Legacy

There will be books written about Paul Otellini and his eight years at the helm of Intel. A leader should be measured by the institution he or she leaves behind. I conclude those books will describe Intel in 2013 as excelling in managed innovation, systematic growth, and shrewd risk-taking:

Managed Innovation: Intel and other tech companies always are innovative. But Intel manages innovation among the best, on a repeatable schedule and with very high quality. That’s uncommon and exceedingly difficult to do with consistency. For example, the Tick-Tock model is a business school case study: churning out ground-breaking transistor technology, processors, and high-quality leading-edge manufacturing at a predictable, steady pace of engineering to volume manufacturing. This repeatable process is Intel’s crown jewel, and is a national asset.

Systematic Growth: Under Otellini, Intel made multi-billion dollar investments in each of the mobile, software, and intelligent systems markets. Most of the payback growth will come in the future, and will be worth tens of billions in ROI.

The company looks at the Total Addressable Market (TAM) for digital processors, decides what segments are most profitable now and in the near future, and develops capacity and go-to-market plans to capture top-three market share. TAM models are very common in the tech industry. But Intel is the only company constantly looking at the entire global TAM for processors and related silicon. With an IA computing continuum of products in place, plans to achieve more growth in all segments are realistic.

Shrewd Risk-Taking: The company is investing $35 billion in capital expenses for new chip-making plants and equipment, creating manufacturing flexibility, foundry opportunities, and demonstrating a commitment to keep at the forefront of chip-making technology. By winning the battle for cheaper and faster transistors, Intel ensures itself a large share of a growing pie while keeping competitors playing catch-up.

History and not analysts will grade the legacy of Paul Otellini as CEO at Intel. I am comfortable in predicting he will be well regarded.