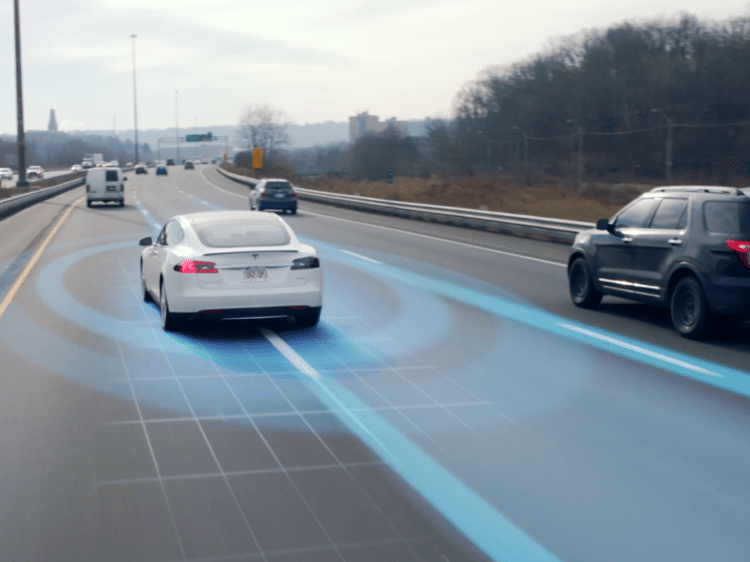

No stranger to creating evocative statements that generate headlines, Tesla founder and CEO Elon Musk said during the company’s quarterly earnings call that the future of its autonomous driving systems for Tesla vehicles would utilize in-house designed computing systems. This is the not the first time Elon has said the company was working on chips for AI processing but it does mark the first time more specific statements on capability have been made.

But maybe the most important question is one that went unasked from the financial analysts on the call: is this even something Tesla SHOULD be pursuing?

Let’s be very clear up front: building a processor is hard. Building one that can compete with the giants of the tech market like NVIDIA, Intel, AMD, and Qualcomm is even more difficult.

A trend of custom silicon

There has been a trend in the implementer space (companies that traditionally take computing solutions from vendors and implement them into their products) to create custom silicon. Apple is by far the most successful company to do this in recent history. It moved from buying all of the parts that make up the iPhone and iPad to designing almost every piece of computing silicon including the primary Arm processor, the graphics, and even the storage controller. (Interestingly the modem is still the one thing that eludes them, depending on Qualcomm and Intel for that.)

The other modern examples of this silicon transition are Google and Facebook. Google built the TPU for artificial intelligence processing and Facebook has publicly stated that it has research on-going for a similar project. Both of those companies are enormous with a substantial purse to back up the engineering feat that is creating a new product like this. Their financial future is not in doubt or dependent on the outcome of the AI chip process.

Tesla thrived on tech

Tesla is company that was born and lives off of the idea that it is more advanced than everyone else out there. I should know – I bought a Model S in 2015 with that exact mindset. Musk was brash, bucked the traditional automotive trends. He made promises like coast-to-coast autonomous drives by the end 2017 and AutoPilot seemed like magic when it was released.

Since then, we are more than half-way through 2018 without that autonomous drive taking place and AutoPilot has been surpassed by other driving assistance solutions from GM and others.

This might lead many to believe that Tesla NEEDS to develop its own AI hardware for autonomous driving in order to get back on track, no longer wanting to be beholden the companies that have provided previous generations of smart driving technology for its cars.

Mobileye was the first partner that Tesla brought on board, but the companies split because (as was widely rumored) Mobileye wasn’t comfortable with the expanding claims Tesla was making about its imaging and processing systems. NVIDIA hardware powered the infotainment and driving systems for some period of time and more recently Intel-based systems have found their way into the infotainment portion.

Clearly Tesla has experience working with the major players in the AI and automotive spaces.

Performance claims

On the call with analysts, Musk mentioned that these new processors Tesla was working on would have “10x” the performance of other chips. Obviously, no specifics were given but it seems reasonable he was talking about the NVIDIA platform in use on shipping cars today that is more than three years old, the Drive PX2. And even then, only half of the PX2 processing power was integrated on the vehicles.

Musk also brought up that the interconnect between the CPU and GPUs on current AI hardware systems was a “constraint” and a bottleneck on computational throughput.

These reasons for building custom hardware are mostly invalid as they are addressed by current and upcoming hardware from others including NVIDIA. The Drive Xavier system offers 10x the performance of PX2 and NVIDIA’s upcoming Drive Pegasus will be even faster. And these platforms integrate NVLink technology to provide a massive upgrade to the bandwidth between the CPU and GPU, addressing the second concern Musk voiced on the earnings call.

The Risks

Deciding to design and build your own AI silicon has a lot of risks that come along for the ride. First and likely most important is the issue of safety. If Google’s TPU AI system doesn’t work correctly then we get a mis-matched facial recognition result for an uploaded image. If a self-driving car system malfunctions then we have a more dangerous situation. After the Uber autonomous driving accident that killed a pedestrian early this year, the safety and reliability of self-driving vehicles is more prominent and top-of-mind than ever before. There are years of regulation and debate coming over who shares or holds liability for these types of occurrences but you can be damn sure that the car manufacturer is already on the top of that list.

If Tesla happens build the car, design the AI hardware, write the AI system level software, and sell it direct to the consumer, there are very few questions as to where the fingers will point.

Financial risks exist for building in-house silicon too. Tesla is a small company relative to Google and Facebook, and even smaller if we focus on the teams involved in software and hardware development outside of the vehicle-manufacturing systems. The cost to build custom chips is usually amortized over years and millions of units shipped, justifying the added price compared to using off-the-shelf components. Tesla has sold just north of 350,000 cars in the last 6+ years and even if we double that in the next six, we have only 700,000 chips that will be needed for these autonomous vehicles.

Companies like NVIDIA that have leadership positions in the AI landscape build processors and platforms for hundreds of different usage models, from AI training to inference, and from smart cameras to autonomous cars. It has the engineering talent in place and experience to build the best chips that combine performance, efficiency, and pricing viability.

Intel and AMD will also likely make more noise in these spaces soon. Intel just finished a data center announcement that included specific deep learning and AI accelerated instructions for its updated processor family coming later this year.

Would a chip that is custom built and tuned for Tesla specific AI models and sensor systems be more power and performance efficient than a more general solution from NVIDA or Intel? Yes. But does that make it worth the time, money, and risk to get it done when there are so many other problems that Tesla could be addressing? I just don’t see it.

Superb post however I was wanting to know if you could write a litte more on this topic? I’d be very grateful if you could elaborate a little bit more.

Very nice blog post. I definitely love this site. Stick with it!