Benchmarks are used in every market as one way to show why one company’s widget is better than another company’s widget. Whether it’s MPG on a car, energy ratings on appliances, or a Wine Spectator rating, it’s a benchmark. The high-tech industry loves benchmarks, too, and there is an industry full of companies and organizations that do nothing but develop and distribute benchmarks. With high-tech benchmarks come controversy, because the stakes are high as all things equal, the widget with the highest benchmark scores will typically receive more money. The recent spat about Intel versus ARM in smartphones illustrates what is wrong with the “system”. When I mean “system”, I mean the full chain from benchmarks to the reporting of them and to the buyer. I want to explore this and offer some suggestions to help fix what is broken.

The first thing I want to highlight is that getting benchmarks “right” is important to the buyer. If a buyer makes a choice based on a benchmark and either the benchmark isn’t representative of a comparative user experience or if the benchmark has been manipulated, the buyer has been misled. The first case would be like a buyer buying a racing boat based on the number cup holders and the second would be an auto MPG test during 100 mph tail winds. The smartphone benchmark blow-up has accusations of both and reminds me a bit of a Big Brother episode.

It all started with ABI Research publishing a note in June entitled, “Intel Apps Processor Outperforms NVIDIA, Qualcomm, Samsung”. News outlets like the Register picked up on this and wrote an article entitled, “Surprise! Intel smartphone trounces ARM in power trials.” Seeking Alpha jumps on the bandwagon, citing the ABI report with an article entitled, “Intel Breaks ARM, Sends Shares Down 20%”. 100′s of article later, if you believed the press,Qualcomm, Samsung, Nvidia, would be driven out of mobility and Intel would pick up all their business. Even Intel doesn’t believe that even though they have made some pretty remarkable mobile improvements with Atom from where they were three years ago.

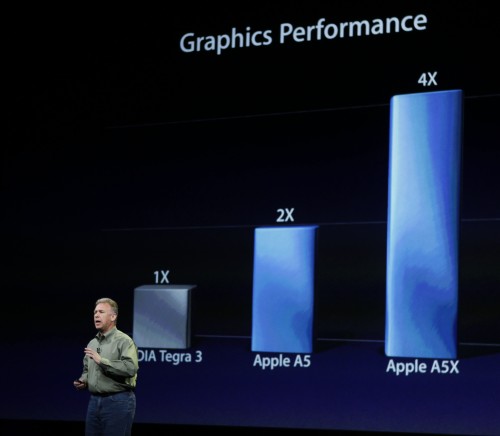

As I said before, this isn’t the first benchmark controversy or the last. BAPCO Sysmark andMobileMark were some of the more controversial in the PC world over the last decade, and even 3D benchmarks have controversy too. Apple isn’t immune either. Servers and systems are chock-full of examples, too. So why would mobile smartphone benchmarks be any different?

In talking with Intel, they said that no one should ever use one benchmark to measure performance and that if there were questions on how AnTuTu worked on their processors or compiler, they should contact AnTuTu. I’ve had no luck with that yet. Smartphone benchmarks are an issue, so what should the industry do?

I have had the pleasure of being part of multiple industry benchmarking efforts over the last 20 years, have seen some success, some failures, and learned a lot. I think that if consumers, press, analysts, and investors stuck to a few guidelines, we wouldn’t get sideways like this.

The following are my top benchmark learnings for client devices like phones, tablets and PCs:

- The best benchmarks reflect real world usage models: It all starts with what the user wants to do with their device. For smartphones, it should reflect what is done with the content- social media, email, messaging, web, games, music, photos, video, and talking. As an example, do smartphone benchmarks comprehend photo lag time or battery life?

- Never rely on one benchmark: There are no perfect benchmarks and every one of them has a flaw somewhere. In the AnTuTu case, many in the press and analyst community relied on one benchmark which exasperated the issue at hand.

- Benchmark shipping devices: Many benchmarks are performed on test systems, not real devices. Typically, there is benchmark variability between phones in-market and test systems and they go both ways. Some early ODM phones or reference designs have beta stage firmware and drivers which can be slower or faster than production level designs by OEMs. Many shipping phones have bloat-ware, which can slow down a benchmark. Intel was very clear with me to ignore the latest leaked Bay Trail benchmarks that were on reference designs

- Application-based benchmarks are the most reliable: There are three types of benchmarks, synthetic, application-based, and hybrids. Synthetic benchmarks, like the AnTuTu memory test, are running an algorithm that tests one specific subsystem, not how a smartphone would run an app in the real world. The benefit of synthetics are that they are easier to run and develop. I prefer application-based benchmarks like the 3D benchmarkers are using where they test a specific game like Crysis, but I am OK with hybrids as long as they reflect the performance of real-world applications. Application-based benchmarks take a lot of development time and resources.

- Look for transparency: Some benchmark companies and groups tell you exactly what they test, how they test, and even offer source-code inspection. If benchmarks don’t offer that, be very wary, and ask yourself, “why don’t they”?

- Look for consistency: The best benchmarks are repeatable and can be relied upon time and time again. Be wary of benchmarks that give you different results after running them multiple times. You just can’t rely on benchmarks like that.

While no benchmark is perfect and all have issues, I really like FutureMark’s benchmark approach, execution and the ability to herd many of the largest tech companies to arrive at decisions.

Mobile benchmarks are one of the more challenging benchmarks to develop, for many reasons. First off, mobile benchmarks support multiple mobile operating systems, primarily iOS and Android. Secondly, there are three processor instruction sets to support- ARM, MIPS, and X86. Thirdly, smartphones are based on somewhat custom subsystems like image signal processors, digital signal processors, and video encoders which are often hard to support. Finally, it is very difficult to measure power draw of specific components of an SoC or even a complete SOC because it entails connecting tiny measurement probes to specific parts of the phone.

In the end, I’m glad the AnTuTu-Intel-ARM blow-up happened, because it gave the industry a chance to reflect on how well smartphones are being evaluated with benchmarks, their pitfalls, and the impact of the industry quickly jumping to conclusions from one benchmark. Now it’s time for the smartphone industry to come together plug many of the holes out there.

Yellow hitec journalism will be with us forever because of the lazy louts who desire clicks instead of doing their homework. You obviously do do your homework. Good show. The other writers on this site do too.

Thanks robbiep, we do try hard at Tech.pinions to inform with an attitude.

I’d like to see a whole new set of “benchmarks” for smartphone SALES, sort of as a corollary to your suggestions about reforming smartphones “speed” benchmarks.

Today’s sales analyses use an overly broad “Smartphones” category that lumps together phones which shouldn’t be lumped together, based on capability. Consequently the data tells us nothing useful.

I suggest the sales metric to use is Average Selling Price.

The analyses could use these categories: Smartphones ASP $200-300, Smartphones ASP $300-400, Smartphones ASP $500-600 and finally Smartphones ASP $600 and above.

That would actually be meaningful and useful data about smartphones.

And now Samsung enters the ring,

http://venturebeat.com/2013/07/30/samsung-caught-being-creative-on-galaxy-s4-benchmarks/

Joe

I do not even understand how I ended up here, but I assumed this publish used to be great

You have noted very interesting details! ps decent web site.

Thank you for great content. I look forward to the continuation.

The CBD SEO Agency delivers top-notch services for the benefit of businesses in the thriving CBD industry. With a vital propose to to Search Motor Optimization (SEO), they cbd seo agency overshadow in enhancing online visibility and driving living traffic. Their savvy lies in tailoring SEO strategies specifically quest of CBD companies, navigating the unique challenges of this niche market. Via comprehensive keyword investigating, size optimization, and link-building tactics, they effectively upwards search rankings, ensuring clients take in default amidst competition. Their team’s address to staying updated with persistence trends and search locomotive algorithms ensures a emphatic and effectual approach. The CBD SEO Action’s commitment to transparency and client communication fosters safe keeping and reliability. Overall, their specialized services pander to to the noticeable needs of CBD businesses, making them a valuable partner in navigating the digital aspect within this competitive market.

I gave gummies for sleep cbd a strive for the earliest time, and I’m amazed! They tasted major and provided a brains of calmness and relaxation. My stress melted away, and I slept bigger too. These gummies are a game-changer representing me, and I highly propound them to anyone seeking health stress easing and better sleep.

I’m in dote on with the cbd products and organic facial mask! The serum gave my peel a youthful boost, and the lip balm kept my lips hydrated all day. Eloquent I’m using clean, natural products makes me feel great. These are infrequently my must-haves in support of a fresh and nourished look!