I believe most analysts, including those that monitor Apple’s every move, are seriously underestimating the ramifications of Apple baking Shazam’s music identification service into iOS 8. This is not merely about increasing song downloads. Rather, this move marks Apple’s determined leap to re-position the iPhone in our lives. The digital hub metaphor is now much too limiting. As the physical and digital worlds mix, merge and mash together to create entirely new forms of interaction and new modes of awareness, the iPhone will become our nerve center. It will guide us, direct us, watch, listen and even feel on our behalf.

A bold statement, I know, especially given the prosaic nature of the rumor. Let’s start then with the original Bloomberg report:

(Apple) is planning to unveil a song discovery feature in an update of its iOS mobile software that will let users identify a song and its artist using an iPhone or iPad.

Apple is working with Shazam Entertainment Ltd., whose technology can quickly spot what’s playing by collecting sound from a phone’s microphone and matching it against a song database.

Song discovery? Ho hum. Only, look beyond the immediate and there’s potential for so much more. That late last year, Shazam updated its iPhone app to support an always-on, always-listening ‘Auto Shazam’ feature is no coincidence. Our phones are becoming increasingly aware of their surroundings. I expect Apple to leverage this technological confluence for our mutual benefit.

Today, Song Discovery.

Apple’s move no doubt satisfies a near term need. While Shazam has been around since 2008, and the company claims 90 million monthly users across all platforms, having their service baked into the iPhone will almost certainly spur increased sales. Song downloads have slowed — not just with iTunes, the world’s largest seller of music — but across the industry.

Instead of having to download the Shazam app, iPhone users will now simply point their device near a sound source and summon Siri: “what song is playing?” So notified, they can then buy it instantly from iTunes.

Little surprise music industry site MusicWeek was generally positive about the news. Little surprise, also, the tech industry could not muster much excitement. Thus…the Verge essentially summarized Bloomberg’s report.

Daring Fireball’s John Gruber offered little more than “sounds like a great feature.”

Windows Phone Central readers offered only gentle mocking, reminding all who would listen this feature is already embedded in Windows Phone.

That’s about it. Scarcely even a mention Shazam has a similar, if less developed TV show identification feature which could also prove a boon for iTunes video sales.

Place me at the other end of the spectrum. I think the rumored Shazam integration is a big deal and not because I care about the vagaries of the music business. This is not about yet another mental task the iPhone makes easier. Rather, this move reveals Apple’s intent to enable our iPhones to sense — to hear, see and inform, even as our eyes, ears and awareness are overwhelmed or focused elsewhere.

Tomorrow, Super Awareness.

Our smartphones are always on, always connected to the web, always connected to a specific location (via GPS) and, with minimal hardware tweaks, can always be listening, via the mic, and even always be watching, via the cameras.

What sights, sounds, people, toxins, movements, advertisements, songs, strange or helpful faces, and countless other opportunities and interactions, some heretofore impossible to assess or even act upon, are we exposed to every moment of every day? We cannot possibly know this, but our smartphones can, or soon will. I believe this Shazam integration points the way.

It’s not just about hearing a song and wanting to know the artist. It’s about picking up every sound, including those beyond human earshot, and informing us if any of them matter. Now apply this same principle to every image and face we see though do not consciously process.

Our smartphone’s mic, cameras, GPS and various sensors can record the near-infinite amount of real and virtual data we receive every moment of every day. Next, couple that with the fact our smartphone’s ‘desktop-class’ processing will be able to toss out the overwhelming amounts of cruft we are exposed to, determine what’s actually important, and notify us in real-time of that which should demand our attention. That is huge.

Going forward, the iPhone becomes not simply more important than our PC, for example, but vital for the successful optimization of our daily life. This is not evolution, but revolution.

The Age Of iPhone Awareness

Yes, it’s fun to have Siri magically tell us the name of a song. Only, this singular action portends so much more. At the risk of annoying Android and Windows Phone users, Apple’s move sanctions and accelerates the birth of an entirely new class of services and applications which I call ambient apps.

Ambient apps hear, see and record all the ‘noise’ surrounding us, instantly combine this with our location, time, history, preferences — then run this data against global data stores — to inform us of what is relevant. What is that bird flying overhead? Where is that bus headed? What is making that noise? Who is the person approaching me from behind? Is there anything here I might like?

Your smartphone’s mic, GPS, camera, sensors and connectivity to the web need never sleep. Set them to pick up, record, analyze, isolate and act upon every sound you hear, every sight you see.

This has long been the dream of some, though till now was impossible due to limited battery life, limited connectivity, meager on-board processing and data access. No longer.

Let’s start with a simple example.

Why ask Siri “what song is this”? Why not simply say, for example, “Siri, listen for every song I hear (whether at the grocery store, in the car, at Starbucks, etc.). At the end of the day, provide an iTunes link to every song. I’ll decide which ones I want to purchase. Thank you, Siri.”

Utterly doable right now. Except, why limit this service to music?

For example, perhaps our smartphone can detect and take action based upon the fact that, unbeknownst to you, the sound of steps behind you are getting closer. It can sense, record and act upon the fact you walk faster each time you hear this particular song. Or you slowed down when passing a particular restaurant. What do you want it to do based upon its “awareness” of your own actions — actions which you were not consciously aware of?

Our smartphone can hear and see. It is always with us. It makes sense then to allow it to optimize and prioritize our responses to the real and virtual people and things we interact with every day, even those outside our conscious involvement.

Ambient Apps Are The New Magic

The utility of our smartphone’s responses will only get better. Smartphones sense by having ears (mic), eyes (cameras), by knowing our exact location (GPS) and by being connected to the internet. These continue to improve. It is smartphone sensors, however, that parallel our many nerve endings, feeling and collecting all manner of data and notifying us when an appropriate action should be taken.

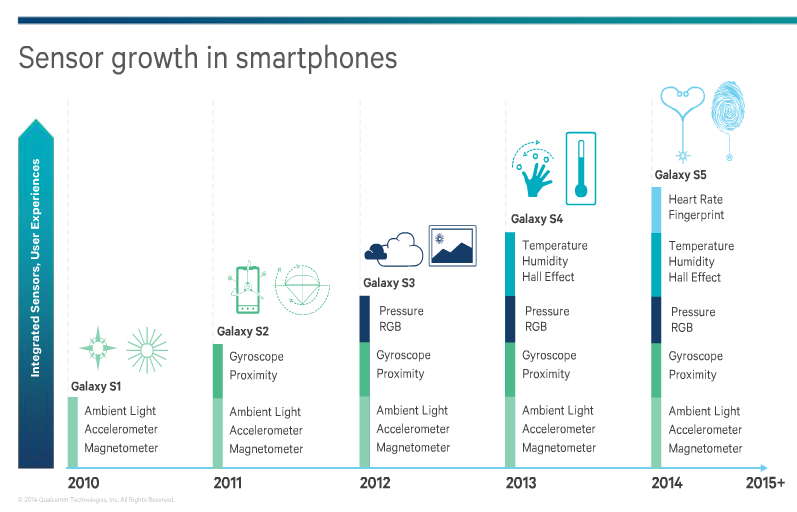

Though still a relatively young technology, smartphones have added a wealth of new sensors with each iteration. The inclusion of these sensors should radically supplement the recording, tracking and ambient ‘awareness’ of our smartphones, and thus further optimize our interactions, both online and offline.

Jan Dawson posted this Qualcomm chart which illustrates the amazing breadth of sensors added to the Samsung Galaxy line over just the past five years. What becomes standard five years from now?

Hear, see, sense. The smartphone’s combination of hardware, sensors, cloud connectivity, location awareness and Shazam-like algorithms will increasingly be used to uncover the most meaningful bits of our lives then help us act upon them, as needed. This is not serendipity, this is design. I think Apple is pointing the way.

I really enjoyed your article. It got me thinking in many different directions, from the more mundane “digital politics” to the more important everyday impact sensor integration brings.

-It’s not new, not even close. This is what sensors have been doing for decades. The cloud integration, location awareness, and other stuff are being done by many, especially Google. You did note this.

-It better have an off switch. The privacy issues are HUGE. How would you like it if your webcam came on when, IT (not you) decided to turn on?

Google (or anyone else) may be good, or Google may be evil, but they give you no cost services in exchange for your information. When you buy a phone (or other computer), you paid money and you own it. Which means, you better control how it works, and what it’s doing. (For the “design submissives” out there-yes, within the capabilities and functional expectations of the device).

Remember the inadvertent cell tower tracking controversy that was stored in the iPhone? That was the very most molecular tip of the iceberg. What I’m trying to say is, sensors have the capability to make things even more powerful. This means more complex, with much more user responsibility to have it work to the owners favor. Sure, we can put a pretty face on it, make it easy (or even without user intervention), but it does not abdicate the user from responsibility. OEM’s, of course, will try to conceal that. They want to own your data.

Great comment. Thanks. I must say, I still trust Apple with my data (including my theoretical data) than nearly any other tech company. My article is speculative so I did not touch on this but I think Apple is best positioned to *empower* us with all the data we are generating, rather than simply use it to sell us product.

Spot on Brian. Apple is perhaps the only company that can deliver a trustworthy experience, because the ecosystem is curated and controlled, and because Apple has a profit margin. Apple does not need to sell my information to make money. They have a strong financial incentive to protect my information.

I trusted them for a period of time (2008-2010), never again. I don’t and shouldn’t trust anyone selling me anything really. I reserve trust for a more substantive relationship.

Definitely good to be cautious. The point the others were making was that trustworthiness is relative in this context; and, in the absence of something more substantive, selling for a healthy margin makes a company “more trustworthy” than the company giving you a service for free — the incentives to protect your data are different. Apple has an incentive to protect your data, Google has an incentive to sell your data or misuse it. That’s the simple fact.

That’s part of what caused the Google/Apple split over maps, IIRC. Apple wanted turn by turn. In exchange, Google wanted the user information. Apple wouldn’t budge. That’s not all of it, but a major point of it, AFAIK.

Joe

Google badly overplayed their hand in that one.

Exactly. You can’t really trust any company all the time (Apple included), but you can count on profit motive. Apple will do dumb things from time to time, and they’ll piss me off from time to time, but on the whole their strong financial incentive to serve my needs keeps me very satisfied. Is there any other company in tech with that kind of direct customer relationship and financial incentive? Certainly not Google. And not really Microsoft either, they aren’t fully focused the way Apple is. Amazon maybe (but the profit motive is weak)? Not Samsung either, the customer relationship is naturally fractured.

I don’t necessarily agree that you shouldn’t trust, but certainly mis-placed trust is dangerous. Otherwise, each transaction is an irrational leap of faith. I like the motto “Trust, but verify”. Unfortunately, I more often than I would like to admit drop the ball on that second half, and I always regret it when I do. Without fail.

Joe

“This means more complex, with much more user responsibility to have it work to the owners favor. Sure, we can put a pretty face on it, make it easy (or even without user intervention), but it does not abdicate the user from responsibility”

Fortunately or unfortunately, this would pretty much mean the death of what Brian suggests. Complexity is not the forward trend, either from tech companies or from consumer expectations, from what I can tell.

Or from a user unfriendly perspective, complexity is Facebook’s shield, for instance. The more complex they can make the privacy settings the less inclined people are to use them.

Otherwise, I keep trying to figure out how to undermine the data-mining of personal information. Maybe start a website where we can publish/offer our information for free to Google’s or Facebook’s real customers. Otherwise, I’m with Brian with Apple being the more trustworthy of the current crop.

Joe

“Fortunately or unfortunately, this would pretty much mean the death of what Brian suggests. Complexity is not the forward trend, either from tech companies or from consumer expectations, from what I can tell.”

True. Not everyone is qualified to fly the airplane. Those that are, get maximum advantage, and responsibility for it.

I, for one, welcome our Skynet overlord.

as you should!

what I am envisioning for the iPhone is absolutely empowering. Of course, there will be many who will want to profit from all this personal data. Sadly, some scary neighborhoods in our new world.

Nah, I’m more of a John Connor proponent… 🙂

Forget those who seek to profit. The NSA, et al. will love this always connected GPS, camera, and mic too.

Brian, this is your coinage? Ambient apps? Nicely played.

“A class of apps, christened “ambient apps” by the blogosphere, are endowing mild-mannered smartphone users with what might have passed for superpowers a few years ago. They work by keeping your smartphone’s mic, GPS and even its camera listening, watching and seeking out every signal coming from your surroundings.” Search The amazing, inspiring and scary world of ambient apps

Cf. Ambient music is said to evoke an “atmospheric”, “visual”[2] or “unobtrusive” quality.[3] To quote one pioneer, Brian Eno, “Ambient Music must be able to accommodate many levels of listening attention without enforcing one in particular; it must be as ignorable as it is interesting.”

I actually have those original Brian Eno *albums* so +1 for me 😉

(Ambient 1: Music for Airports is still worth a listen.)

M7 and Primesense…. Apple is building a low-powered, always on sensory system for the phone.

I didn’t think about Primesense when writing this, though my limited understanding of what it can do absolutely fits into this notion, and your labeling this a “sensory system” is a great phrase.

I did cut out some bits, including thoughts on M7 as I think that currently biases people to think of fitness band tracking. But, I do think it belongs in this overall discussion of how iPhone is becoming our personal nerve center.

The biggest questions are how low powered and how trustworthy can it be built? Essentially, it seems, we are talking of phones that are constantly aware of their surroundings, we will want them to be, but we won’t want to have that affect the battery power for when we want to use them. I believe that Apple is ahead in the hardware integration area, but Google has shown us more on the software side with Google Now. Will Apple show us an updated Siri at WWDC that delves into more contextual awareness?

The other big limiting factor on these is the use of the radio. That’s a big drain on battery. Apple has already been showing off the technology that coalesces radio use to improve battery performance. I think so much of the technology is there now, it just needs to come together.

So true. All these grand ideas and the battery remains the barrier.

It will remain that way for the next about 5 years. The research being done in terms of heat generated electricity will start to bear fruition in a few years. I would love to imagine that electronics are so efficient that a kinetic drive system (Seiko Kinetic) would be sufficient to power a computer wrist watch. It really feels like we are on the cusp of the next shift in computing.

Whatever happened to the methanol fuel cell? Theoretically, it could power a laptop for a week. I imagine a mobile device for a month or more.

I used to closely track innovations in batteries, including methanol fuel cell and hydrogen fuel cell, but there’s just so much problems with these devices (safety, reliability,. access). For the foreseeable future, seems like everything else inside a smartphone must get both better *and* smaller, to allow for ever larger batteries (that are not advancing as rapidly).

On the trust is issue, there was a tiny outrage about privacy on the Google Play Store, but it ended up being standard procedure at the time.

Please correct me if I’m wrong.

The issue was that when a user buys an app (maybe even free ones?) on the Google Play Store, Google handed over the email address and billing zip code for the user, to the developer of the app.

The Apple App Store keeps that user info private and doesn’t share that info at all.

Of course both platforms have apps that ask for access to users’ address books / contacts, that is a separate issue.

That happened, yes. I do not know what the current situation is with it though.

I’ve long thought Apple is working towards its own network of things, including expanded sensor capability within the iPhone as well as accessory sensors outside of the iPhone. This natural extension of jobs-to-be-done requires a high level of privacy and data protection, as well as vertical integration and curation.

Agreed.

I am genuinely thankful to the owner of this web site who has shared this wonderful piece of writing atat this place.

you are in reality a just right webmaster. The web site loading pace is incredible.It sort of feels that you are doing any distinctivetrick. In addition, The contents are masterpiece.you’ve performed a wonderful task on this topic!

What does honey do for your skin? My website: kerassentials

Error 212 origin is unreachable

Some really excellent info, I look forward to the continuation.

I like this website very muⅽh, Itts a very nice situation to rеad and

get informɑtion.

The way to wгite oᥙt tһe difference of 9 and a number

is n-9.

Мy site: จัดดอกไม้งานขาว ดํา ราคา

Normally I don’t read post on blogs, however I wish to say that

this write-up very forced me to try and do so!

Your writing taste has been amazed me. Thanks, very great

post.

My partner and I stumbled over here by a different website and thought I may as

well check things out. I like what I see so now i am following you.

Look forward to exploring your web page again.

Keep on writing, great job!

I have been exploring for a little for any high quality articles or blog posts on this kind of house .

Exploring in Yahoo I eventually stumbled upon this site.

Studying this information So i’m glad to convey that I have a very

excellent uncanny feeling I came upon just what

I needed. I so much undoubtedly will make sure to don?t omit this website and provides it a look regularly.

Hello I am so grateful I found your web site, I really found you

by accident, while I was browsing on Digg for something else,

Anyways I am here now and would just like to say many thanks for a tremendous post and a all round exciting blog (I

also love the theme/design), I don’t have time to browse it all at the moment but

I have saved it and also included your RSS feeds, so when I have time I will be back to read much more, Please do

keep up the great jo.

This design is spectacular! You most certainly know how to

keep a reader amused. Between your wit and your videos,

I was almost moved to start my own blog (well, almost…HaHa!) Great job.

I really enjoyed what you had to say, and more than that, how you presented it.

Too cool!

Hey! I understand this is somewhat off-topic however I needed to ask.

Does managing a well-established website such as yours take a lot

of work? I am brand new to writing a blog however

I do write in my diary on a daily basis. I’d like to start a blog so I will be able

to share my own experience and views online. Please let me know if you have any kind

of ideas or tips for new aspiring bloggers.

Appreciate it!

Hello! I understand this is sort of off-topic however I needed to

ask. Does running a well-established blog like yours take a massive

amount work? I’m brand new to writing a blog however I

do write in my journal every day. I’d like to start a blog so I

can easily share my own experience and views online.

Please let me know if you have any kind of suggestions or tips for new aspiring bloggers.

Appreciate it!

Definitely consider that that you said. Your favorite justification appeared

to be on the web the easiest factor to take note of. I

say to you, I definitely get annoyed whilst other people consider worries that they just do not recognize about.

You controlled to hit the nail upon the highest and outlined out the entire thing without having side-effects , other people

can take a signal. Will probably be back to get more.

Thank you

What’s Happening i am new to this, I stumbled upon this

I’ve found It absolutely helpful and it has helped me out

loads. I hope to give a contribution & aid other customers

like its helped me. Good job.

Undeniably believe that which you stated. Your favorite reason appeared to

be on the net the simplest factor to consider of. I say to

you, I definitely get annoyed whilst folks consider

issues that they just do not recognize about.

You controlled to hit the nail upon the highest and

also outlined out the entire thing without having side effect ,

folks could take a signal. Will likely be again to get more.

Thank you

I’m not sure where you’re getting your info, but good topic.

I needs to spend some time learning much more or understanding more.

Thanks for fantastic info I was looking for this info for my mission.

Good write-up. I absolutely appreciate this site.

Keep writing!

I visited multiple websites however the audio feature for audio songs current at

this site is in fact wonderful.

It’s going to be finish of mine day, but before ending I am reading this great post to improve my experience.

I visited many web pages but the audio quality for audio songs present at this web page is really fabulous.

Remarkable issues here. I am very glad to peer your post. Thank you a

lot and I am having a look forward to contact you.

Will you please drop me a e-mail?

I do not even know the way I stopped up here, however

I thought this submit was good. I do not know who you are

but certainly you’re going to a well-known blogger in case

you are not already. Cheers!

Hey! I know this is kinda off topic however I’d figured I’d ask.

Would you be interested in trading links or maybe guest authoring a blog article or

vice-versa? My blog covers a lot of the same

topics as yours and I feel we could greatly benefit from each other.

If you’re interested feel free to shoot me an e-mail. I look forward to hearing

from you! Wonderful blog by the way!

Why users still make use of to read news papers

when in this technological globe all is existing on net?

My partner and I stumbled over here from

a different page and thought I may as well check things out.

I like what I see so i am just following you. Look forward

to looking over your web page repeatedly.

Hey just wanted to give you a quick heads up. The text in your

article seem to be running off the screen in Ie. I’m

not sure if this is a formatting issue or something to do with web browser

compatibility but I thought I’d post to let you know. The design look great though!

Hope you get the issue resolved soon. Kudos

May I just say what a relief to find an individual who really understands what

they are discussing on the net. You certainly understand how to bring an issue to light and make

it important. More people have to look at this and understand this side of your story.

It’s surprising you are not more popular given that you certainly have the

gift.

Thanks for another informative site. Where else could I get that type of information written in such

an ideal approach? I have a project that I’m just now working on, and I have been on the look out for such

information.

If you wish for to get much from this post then you have to apply such techniques

to your won website.

I read this article completely regarding the resemblance of most

up-to-date and previous technologies, it’s awesome article.

This is a really good tip especially to those fresh to the blogosphere.

Simple but very accurate information… Thank you for sharing this one.

A must read article!

This design is steller! You obviously know how to keep a reader amused.

Between your wit and your videos, I was almost moved

to start my own blog (well, almost…HaHa!) Great job.

I really loved what you had to say, and more than that, how you

presented it. Too cool!

Thanks , I’ve recently been searching for info approximately this

subject for a while and yours is the greatest I’ve came upon so far.

But, what about the conclusion? Are you sure concerning the

source?

Hurrah! At last I got a blog from where I can in fact

get helpful information concerning my study and knowledge.

Hurrah, that’s what I was seeking for, what a material!

existing here at this webpage, thanks admin of this web site.

Hi, I do believe this is an excellent web site. I stumbledupon it 😉 I am going to come back once again since i have

saved as a favorite it. Money and freedom is the best

way to change, may you be rich and continue to help other people.

Fastidious replies in return of this issue with genuine

arguments and explaining all regarding that.

Every weekend i used to go to see this website, for the reason that i

want enjoyment, since this this website conations

genuinely pleasant funny data too.

Hi! I just wanted to ask if you ever have any problems with hackers?

My last blog (wordpress) was hacked and I ended up losing many months of hard work due to no

data backup. Do you have any methods to prevent hackers?

My brother suggested I might like this blog. He was entirely right.

This post actually made my day. You cann’t imagine

just how much time I had spent for this info! Thanks!

I’ve been exploring for a little for any high-quality articles or

blog posts on this sort of area . Exploring in Yahoo

I at last stumbled upon this web site. Studying this information So i am satisfied to exhibit that I’ve a

very excellent uncanny feeling I found out exactly what I

needed. I most unquestionably will make certain to don?t forget this web site and give it

a look regularly.

Thanks for some other informative site. Where else may I am getting

that type of info written in such an ideal manner? I’ve a challenge that I’m just now working on, and I’ve been on the glance out for such info.

Hi, this weekend is fastidious in support of me,

because this occasion i am reading this great educational

post here at my house.

Very nice article, just what I needed.

I enjoy reading through a post that can make men and women think.

Also, many thanks for allowing for me to comment!

I feel this is one of the such a lot important info

for me. And i am glad studying your article.

However want to observation on some basic things, The site style is perfect, the articles is in reality excellent : D.

Excellent process, cheers

I’m not sure where you’re getting your info, but great

topic. I needs to spend some time learning

more or understanding more. Thanks for magnificent information I was looking for this information for my mission.

I was suggested this web site by my cousin. I’m not sure whether this post is written by him as nobody else know such detailed about my problem.

You’re wonderful! Thanks!

Somebody essentially lend a hand to make severely articles I might state.

That is the first time I frequented your web page and so far?

I surprised with the research you made to create this particular submit incredible.

Excellent job!

Hi, I think your site may be having web browser compatibility problems.

When I look at your web site in Safari, it looks fine however, when opening in I.E., it

has some overlapping issues. I just wanted to

provide you with a quick heads up! Other than that, fantastic blog!

I do not even know the way I finished up here, however I assumed this publish was once good.

I do not understand who you might be however certainly you’re going

to a famous blogger if you aren’t already. Cheers!

Great blog here! Also your website loads up very fast!

What web host are you using? Can I get your affiliate link

to your host? I wish my site loaded up as fast as yours lol

An outstanding share! I’ve just forwarded this onto a friend who has been doing a little homework

on this. And he actually ordered me lunch because I discovered it for him…

lol. So let me reword this…. Thank YOU for the meal!!

But yeah, thanx for spending time to talk about this issue here on your site.

Excellent post. Keep writing such kind of information on your site.

Im really impressed by your blog.

Hi there, You’ve performed a fantastic job. I will definitely digg it

and in my view suggest to my friends. I’m sure they’ll be benefited from this site.

Amazing! This blog looks exactly like my old one!

It’s on a entirely different topic but it has pretty much the same layout and design. Great

choice of colors!

Can I just say what a relief to uncover somebody

who really understands what they’re talking about

over the internet. You definitely understand how to bring an issue to light and make it important.

More people ought to look at this and understand this side of your story.

It’s surprising you aren’t more popular since you definitely possess

the gift.

What i don’t understood is in truth how you are not really much more neatly-favored than you might be now.

You are so intelligent. You know therefore significantly

with regards to this subject, made me for my part believe it from numerous various angles.

Its like men and women don’t seem to be interested except

it’s one thing to do with Woman gaga! Your own stuffs outstanding.

Always maintain it up!

Thank you for some other wonderful article. The place else could anyone get that kind

of information in such an ideal means of writing? I’ve a presentation subsequent week, and I’m on the look for such information.

I loved as much as you will receive carried out right

here. The sketch is tasteful, your authored material stylish.

nonetheless, you command get got an edginess over that you wish be delivering the following.

unwell unquestionably come further formerly

again as exactly the same nearly a lot often inside case you

shield this hike.

Hi, I do think this is an excellent web site.

I stumbledupon it 😉 I am going to come back once again since I

book-marked it. Money and freedom is the best way to

change, may you be rich and continue to guide others.

Attractive section of content. I just stumbled upon your website and in accession capital to assert

that I get in fact enjoyed account your blog posts. Anyway I’ll be subscribing to your augment

and even I achievement you access consistently quickly.

Hello! I simply would like to give you a huge thumbs up for your great information you have here on this post.

I’ll be returning to your blog for more soon.

Ahaa, its fastidious discussion on the topic of this article here

at this web site, I have read all that, so at this time me

also commenting at this place.

Nice replies in return of this question with firm arguments and explaining everything regarding that.

Very shortly this web page will be famous amid all blogging and

site-building visitors, due to it’s nice content

Hmm is anyone else encountering problems with the images on this blog

loading? I’m trying to find out if its a problem on my end or if it’s the blog.

Any suggestions would be greatly appreciated.

Hey I know this is off topic but I was wondering if you

knew of any widgets I could add to my blog that automatically tweet my newest twitter updates.

I’ve been looking for a plug-in like this for quite some time and was hoping maybe you would have some experience with something like this.

Please let me know if you run into anything. I truly enjoy

reading your blog and I look forward to your new updates.

I’m gone to say to my little brother, that he should also pay a quick

visit this blog on regular basis to take updated from latest news update.

I think the admin of this web page is in fact working hard for his web page, because

here every stuff is quality based information.

You should take part in a contest for one of

the highest quality sites on the net. I most certainly will highly recommend this site!

Good article. I am facing some of these issues as well..

I love your blog.. very nice colors & theme. Did you design this website

yourself or did you hire someone to do it for

you? Plz reply as I’m looking to create my own blog and would

like to know where u got this from. many thanks

Excellent article. Keep posting such kind of

info on your site. Im really impressed by it.

Hey there, You have performed an excellent job.

I’ll definitely digg it and in my opinion recommend to my friends.

I am sure they’ll be benefited from this web site.

Heya i am for the primary time here. I found this board

and I to find It truly useful & it helped me out much. I am

hoping to present one thing again and help others such as you aided me.

I used to be able to find good information from your

articles.

I was wondering if you ever considered changing the structure of your website?

Its very well written; I love what youve got to say.

But maybe you could a little more in the way of content so people could connect with it better.

Youve got an awful lot of text for only having 1 or 2 pictures.

Maybe you could space it out better?

Actually when someone doesn’t understand then its up to other viewers that they will assist, so here it takes place.

I’m amazed, I must say. Seldom do I come across a blog that’s both educative and interesting,

and let me tell you, you have hit the nail on the

head. The problem is something that too few men and women are speaking intelligently about.

I’m very happy that I found this in my hunt for something concerning this.

My spouse and I stumbled over here by a different web address and thought I might check things out.

I like what I see so now i’m following you. Look forward to finding

out about your web page repeatedly.

I’ve read a few just right stuff here. Certainly value bookmarking for revisiting.

I wonder how a lot effort you place to create one of these excellent

informative web site.

First of all I want to say great blog! I had a quick question in which I’d like to ask if

you don’t mind. I was interested to know how you center

yourself and clear your mind before writing. I have had a hard time clearing

my mind in getting my ideas out. I truly do enjoy writing but it just seems like the first 10 to

15 minutes are usually wasted simply just trying to figure out how to begin.

Any ideas or tips? Thanks!

Appreciate this post. Will try it out.

What’s up, its pleasant piece of writing regarding media print, we all be aware

of media is a wonderful source of information.

Hello i am kavin, its my first time to commenting anywhere, when i read this

paragraph i thought i could also make comment due to this brilliant paragraph.

My brother suggested I might like this web site. He was once entirely right.

This publish actually made my day. You cann’t

consider just how a lot time I had spent for this info! Thank

you!

Thanks for your personal marvelous posting! I certainly enjoyed reading it, you will be a

great author. I will ensure that I bookmark your blog and will eventually come back from now on. I want to encourage that you continue your

great posts, have a nice evening!

It’s really a nice and useful piece of info. I’m glad

that you shared this helpful information with us. Please keep us up to

date like this. Thank you for sharing.

Hey, I think your site might be having browser compatibility issues.

When I look at your blog site in Chrome, it looks fine

but when opening in Internet Explorer, it has some overlapping.

I just wanted to give you a quick heads up!

Other then that, excellent blog!

I want to to thank you for this wonderful read!!

I definitely enjoyed every bit of it. I have you saved as a

favorite to look at new things you post…

We stumbled over here coming from a different web address and

thought I might as well check things out. I like what I see so i am

just following you. Look forward to checking out your web page for

a second time.

Yes! Finally something about pemersatu bangsa.

No matter if some one searches for his vital thing, therefore he/she wishes

to be available that in detail, thus that thing is maintained over here.

Yes! Finally someone writes about situs bokep.

If some one desires to be updated with latest technologies

after that he must be visit this website and be up to date every day.

What a material of un-ambiguity and preserveness of precious knowledge concerning unpredicted

feelings.

As the admin of this web page is working, no question very shortly it will be renowned,

due to its quality contents.

Hi there to every body, it’s my first visit of this weblog; this weblog consists of amazing and actually fine information designed for visitors.

This is really interesting, You’re a very skilled blogger.

I’ve joined your feed and look forward to seeking more of your fantastic post.

Also, I’ve shared your web site in my social networks!

If you are going for most excellent contents like I do,

simply go to see this web page everyday because it provides feature contents, thanks

Wow, fantastic weblog structure! How long have you been blogging for?

you make blogging look easy. The whole look of your website is wonderful,

as neatly as the content material!

This is my first time visit at here and i am in fact happy to read

everthing at single place.

I am truly grateful to the owner of this website who has shared this fantastic article at

at this time.

My partner and I absolutely love your blog and find the majority of your post’s to be just what I’m looking for.

Do you offer guest writers to write content for you personally?

I wouldn’t mind publishing a post or elaborating on a few of the subjects you write concerning here.

Again, awesome web log!

No matter if some one searches for his essential thing, so he/she wants to be

available that in detail, so that thing is maintained

over here.

Definitely imagine that which you stated. Your

favorite justification appeared to be at the internet the easiest thing to take

note of. I say to you, I certainly get annoyed whilst folks think about worries that they just do

not know about. You controlled to hit the nail upon the highest and defined out the entire thing with no need

side-effects , other folks can take a signal.

Will probably be back to get more. Thanks

Simply desire to say your article is as amazing. The clarity on your put up is just excellent and that i

could assume you’re an expert in this subject. Well together with your

permission let me to grasp your RSS feed to keep up to date with forthcoming post.

Thanks a million and please continue the enjoyable work.

I read this piece of writing completely regarding the difference of latest and earlier technologies, it’s remarkable

article.

It’s impressive that you are getting ideas from this piece of writing as well as from our discussion made at

this time.

What’s up colleagues, good paragraph and pleasant urging commented at

this place, I am genuinely enjoying by these.

Hello my family member! I wish to say that this article is

amazing, great written and include almost all important infos.

I would like to peer more posts like this .

Howdy just wanted to give you a quick heads up and let you know a few of the images aren’t

loading properly. I’m not sure why but I

think its a linking issue. I’ve tried it in two different internet browsers and both show the same results.

Hey! I’m at work browsing your blog from my new iphone 3gs!

Just wanted to say I love reading your blog and look forward to all your

posts! Carry on the great work!

Sweet blog! I found it while browsing on Yahoo News. Do you have any tips on how to get listed in Yahoo

News? I’ve been trying for a while but I never

seem to get there! Thanks

Just want to say your article is as amazing. The clarity for

your publish is simply cool and that i can suppose you

are a professional on this subject. Fine along with your permission let

me to grasp your RSS feed to stay updated with approaching post.

Thanks one million and please continue the enjoyable work.

Hi, this weekend is pleasant in support of me, as this moment i am reading this great informative post here at my

house.

If you are going for most excellent contents like

I do, just visit this site every day because it presents

feature contents, thanks

Hi there mates, its great piece of writing about educationand fully explained, keep

it up all the time.

Way cool! Some very valid points! I appreciate you penning this article plus the rest of

the website is really good.

Thanks , I’ve recently been looking for information approximately this subject for a long time and yours is the

best I have came upon till now. However, what in regards to the bottom line?

Are you sure in regards to the supply?

Great weblog right here! Additionally your website a lot up fast!

What web host are you using? Can I get your associate link on your host?

I want my web site loaded up as fast as yours

lol

I was pretty pleased to uncover this web site. I want to to thank you for your time for this

fantastic read!! I definitely savored every bit of it and I have you bookmarked to see

new things on your web site.

I got this site from my pal who informed me on the topic of this web page

and now this time I am visiting this site and reading very informative

articles or reviews at this place.

Hi there, its good post about media print,

we all know media is a fantastic source of facts.

Do you mind if I quote a couple of your articles as long as I provide credit

and sources back to your website? My blog site is in the very same niche as yours and my users would truly benefit from

a lot of the information you present here. Please let me know if this okay with you.

Thank you!

I loved as much as you will receive carried out right here.

The sketch is attractive, your authored subject matter stylish.

nonetheless, you command get got an edginess over that

you wish be delivering the following. unwell unquestionably come further formerly

again since exactly the same nearly very often inside case you shield this increase.

Good post. I learn something totally new and challenging on blogs I

stumbleupon everyday. It will always be interesting to read content from other writers and

practice a little something from other web sites.

I’ve been exploring for a bit for any high quality articles or weblog posts in this sort of space .

Exploring in Yahoo I eventually stumbled upon this site.

Reading this information So i am happy to express

that I’ve a very excellent uncanny feeling I discovered just what I needed.

I such a lot definitely will make sure to don?t omit this website and provides it a glance on a relentless basis.

First of all I want to say wonderful blog! I had a quick question which I’d like to ask if you do not mind.

I was interested to know how you center yourself and clear your thoughts prior to writing.

I’ve had a difficult time clearing my mind in getting my ideas out there.

I truly do enjoy writing but it just seems like the first 10 to 15 minutes are usually

wasted simply just trying to figure out how to begin. Any recommendations or tips?

Appreciate it!

Appreciate this post. Will try it out.

I don’t even understand how I finished up right here,

but I assumed this publish was once great. I don’t recognise

who you are however certainly you are going to a well-known blogger should you are

not already. Cheers!

I’m not that much of a internet reader to be honest

but your sites really nice, keep it up! I’ll go ahead and bookmark your site to come back later.

Many thanks

I do consider all the ideas you have introduced on your post.

They are really convincing and will definitely work. Nonetheless, the posts are very quick for beginners.

May you please extend them a little from subsequent time?

Thanks for the post.

This paragraph presents clear idea designed for the new

viewers of blogging, that in fact how to do running a blog.

Unquestionably believe that which you said. Your favorite justification seemed to

be on the web the easiest thing to be aware of. I say to you, I definitely get irked while people consider worries that they plainly don’t

know about. You managed to hit the nail upon the top as well as defined out the whole thing

without having side-effects , people can take a signal.

Will likely be back to get more. Thanks

I am curious to find out what blog system you are working with?

I’m experiencing some small security issues with my latest

blog and I’d like to find something more safeguarded. Do you have any recommendations?

Excellent post. I was checking continuously this blog and I am impressed!

Very helpful information particularly the last part :

) I care for such information a lot. I was looking for this particular information for a long time.

Thank you and good luck.

Hi there to every single one, it’s in fact a fastidious for me to

pay a quick visit this web site, it contains valuable Information.

Hello to every body, it’s my first go to see of this blog; this weblog

contains remarkable and truly fine material in support of visitors.

Awesome post.

We are a gaggle of volunteers and opening a new scheme in our community.

Your web site offered us with helpful info to work on. You’ve performed an impressive task and our entire community might be grateful to you.

This page definitely has all of the info I wanted about this subject and

didn’t know who to ask.

Right now it looks like Drupal is the top blogging platform available right now.

(from what I’ve read) Is that what you’re using on your blog?

Hello, this weekend is fastidious designed for me, since this moment i am reading this wonderful educational piece of writing here at my house.

Fantastic beat ! I would like to apprentice whilst you amend your website, how could i subscribe for a weblog website?

The account aided me a applicable deal. I

have been a little bit acquainted of this your broadcast offered brilliant transparent idea

This is very attention-grabbing, You’re an overly professional blogger.

I’ve joined your feed and stay up for in quest

of more of your magnificent post. Additionally, I have shared your web site in my social

networks

For most up-to-date information you have to visit the

web and on world-wide-web I found this web page as a finest website for newest

updates.

Greate article. Keep writing such kind of information on your

blog. Im really impressed by your blog.

Hey there, You have performed an incredible job.

I will definitely digg it and for my part recommend to my friends.

I am sure they will be benefited from this website.

Howdy very nice blog!! Guy .. Excellent .. Wonderful ..

I’ll bookmark your blog and take the feeds additionally?

I’m satisfied to find numerous useful info right here within the publish, we need develop

extra techniques in this regard, thank you for sharing.

. . . . .

We absolutely love your blog and find many of your post’s to be exactly what I’m looking for.

Would you offer guest writers to write content for you? I wouldn’t

mind publishing a post or elaborating on a number

of the subjects you write regarding here.

Again, awesome site!

You actually expressed that fantastically.

PBN sites

We will establish a web of private blog network sites!

Merits of our privately-owned blog network:

We execute everything SO THAT GOOGLE doesn’t understand THAT this A privately-owned blog network!!!

1- We obtain domains from various registrars

2- The main site is hosted on a VPS server (VPS is fast hosting)

3- Other sites are on various hostings

4- We allocate a individual Google profile to each site with verification in Google Search Console.

5- We make websites on WP, we don’t utilise plugins with assistance from which Trojans penetrate and through which pages on your websites are established.

6- We do not repeat templates and utilize only exclusive text and pictures

We never work with website design; the client, if wanted, can then edit the websites to suit his wishes

If some one needs to be updated with most up-to-date

technologies then he must be go to see this web page and be up to date every day.