CES is certainly the technology lovers candy store. It is nearly impossible for any one person to see everything of interest at CES. So my approach is to look for the hidden gems or something that exposes me to a concept or an idea that could have lasting industry impact.

So in this, my Friday column, I figured I would highlight a few of the most interesting things I saw at this years CES.

Recon Instruments GPS Goggles

The first was a fascinating product made by a company called Recon Instruments and in partnership with a number of Ski/Snowboard goggle companies. What makes this unique and interesting is that the pair of goggles has Recon Instruments modular technology that feature a built-in LCD screen into goggles.

The Recon Instruments module is packed with features useful while on the slopes. Things like speed, location of friends, temperature, altitude, current GPS location, vertical stats on jumps and much more.

Think of this as your heads up display while skiing or snowboarding. The module can also connect wirelessly to your Android phone allowing you to see caller ID and audio / music controls.

Go Pro Hero 2 + WiFi Backpack

In the same sort of extreme sports technology category, I was interested in the newest Go Pro the Hero2 and Wi-fi backpack accessory. I wrote about the Go Pro HD back in December and mentioned it as one of my favorite pieces of technology at the moment. The Hero2 and wi-fi backpack makes it possible to use the Go Pro in conduction with a smart phone and companion app to see what you are recording or have recorded using your smart phone display. This is useful in so many ways but what makes it interesting is I believe it represents a trend where hardware companies develop companion software or apps that create a compelling extension of the hardware experience. I am excited to see more companies take this approach and use software and apps to extend the hardware they create.

In this case the companion app acts as an accessory to the Go Pro Hero2 hardware and provides a useful and compelling experience. Another compelling feature is that you can use your smart phone and the live link to the Go Pro Hero2 to stream live video of what you are recording to the web in real-time. This would make it possible for friends, family, and loved ones to see memories being created in real-time.

Dell XPS 13 UltraBook

Dell came out strong in the UltraBook category and created possibly the best notebook they have created in some time. The XPS 13 UltraBook’s coolest features are the near edge to edge Gorilla Glass display, which needs to be seen to be appreciated, and the unique carbon fiber bottom which keeps the underside cool.

The 13.3 inch display looks amazing with the Gorilla Glass and packed into an ultra slim bezel like that of an 11-inch display. It surprises me to say that if I was to use a notebook other than my Air, this would be the one.

Samsung 55-inch OLED TV

A sight to behold was the Samsung 55-inch OLED TV. I had a similar experience when I saw this TV as I did when I first saw a HDTV running HD content. The vivid picture quality and rich deep color are hard to put into words. Samsung is leading the charge in developing as near to edge-to-edge glass on TVs and this one is even closer. The bezel and edge virtually disappear into the background leaving just the amazing picture to enjoy.

We have been waiting for OLED displays to make it to market, for the sheer reality that in five years they may be affordable. OLED represents one of the most exciting display technologies in a while and it is important the industry embrace this technology so we can get OLED on all devices with a display as fast as possible.

Samsung didn’t mention any pricing yet but said it would be available toward the end of the year. It will most likely cost an arm and a leg.

Intel’s X86 Smart Phone Reference Design

Intel made a huge leap forward this CES by finally showing the world their latest 32nm “Medfield” SOC running on a smart phone reference design. I spent a few minutes with the design, which was running Android version 2.3, and I was impressed with how snappy it was including web page pinch and view, as well as graphics capabilities.

Battery life is still a concern of mine but Intel’s expertise in hyper-threading and core management could help this. The most amazing thing about the smart phone reference design is that it didn’t’ need a fan.

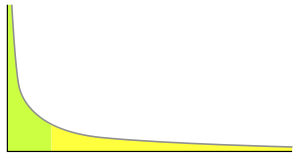

Motorola announced that they would bring Intel based smart phones to market in 2012. This is one of the things I am very excited about as It could mark a new era for Intel and the level of competition we will see in the upcoming ARM vx X86 is going to fun to watch and great for the industry and consumers.

Motorola Droid Razr Maxx

Last but not least the Motorola Razr Maxx has my vote for most interesting smart phone. It was a toss-up between the Razr Maxx and the Nokia Lumia 900. I simply choose the Razr Maxx due to the feature that I think made it most interesting. Which was the 3300 mAh (12.54 Whr) battery that Moto packed into the form factor of the Razr – it’s just slightly thicker than the Droid Razr. Motorola is claiming that the Razr Maxx can get up to 21 hours of talk time. I talked to several Motorola executives who had been using the phone while at the show and they remarked how with normal usage during the show they were able to go several days without charging. To contrast, every day while at CES my iPhone was dead by 3pm.

Making our mobile batteries last is of the utmost importance going forward. I applaud Motorola for their engineering work and creating a product that is sleek, powerful, and has superior battery life.